Every year when Google and Apple come out with their latest OS updates, there are always features that were inspired by each other. Whether it’s a new customization feature, design, or accessibility, someone always did it first. Here are the five biggest iOS 16 features that Google did first and Android phones can do right now.

Table of contents

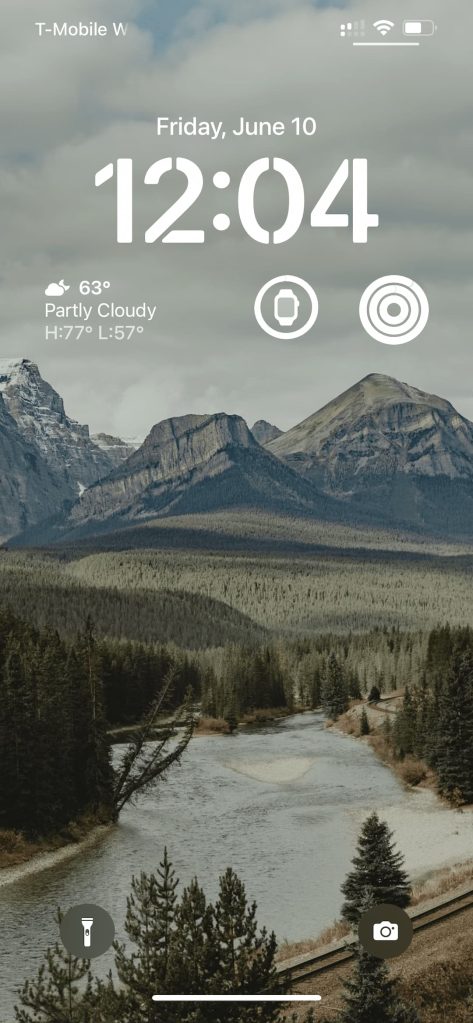

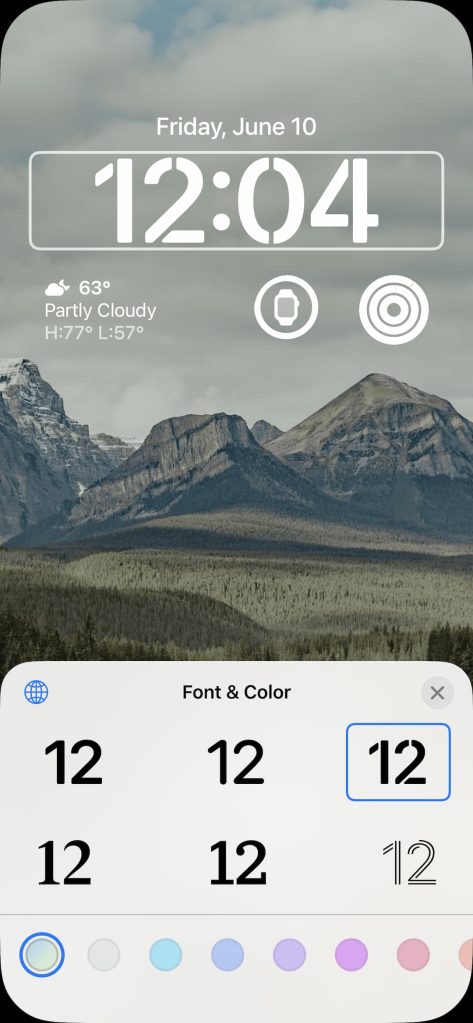

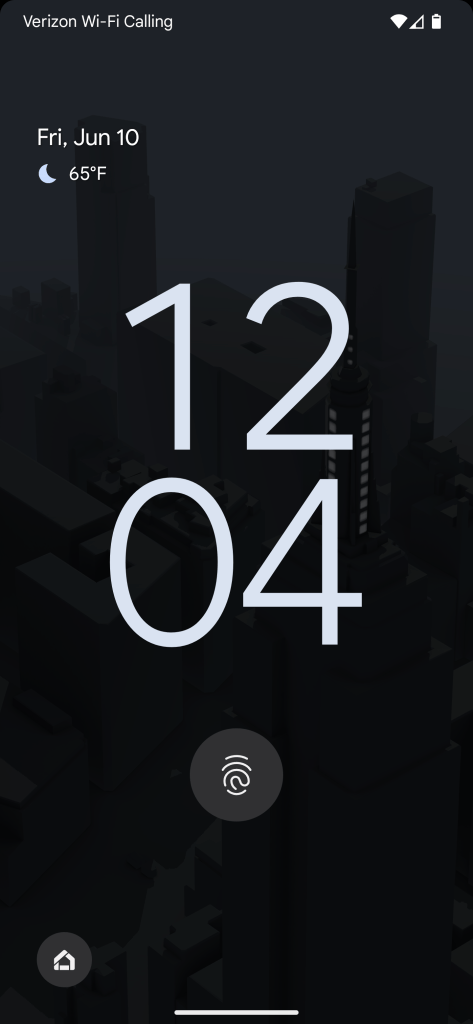

Smarter Lock Screen

I’ll be the first to admit, the new lock screen on iOS 16 looks fantastic. The way Apple uses the depth effect to add depth and realism to photos and have it interact with the clock is genius and looks fantastic. Having Apple Watch style complications with health, stock, battery, and weather is great. You can change the iOS font and clock/complication color to either match the wallpaper or a color of your choice. Everything is up to you.

Google did a lot of this first though. Google has the At a Glance widget, which provides you similar information intelligently by predicting what you’ll need. It always shows the weather and date, but other information like upcoming events, tropical storm warnings, or boarding passes before getting on a flight are intelligent. Those are more powerful than what Apple offers – you just can’t manually choose what you want all the time. The clock color can also change. It’ll pull from the Material You color palette, which matches your wallpaper. You have four color palette options with Android 12, and up to 12 option on Android 13.

A much smaller feature Apple added was Live Activities, which lets apps add a widget to the bottom of the lock screen with information like sports scores or Uber distance. This is basically like Android notifications, which have been available for app developers to use for years on Android.

The new iOS 16 Lock Screen is great for iOS users, it looks great and works well, but it’s also something Android users have been experiencing for years. iOS users are lucky to get it now even though it’s safe to say Apple was heavily inspired by Google.

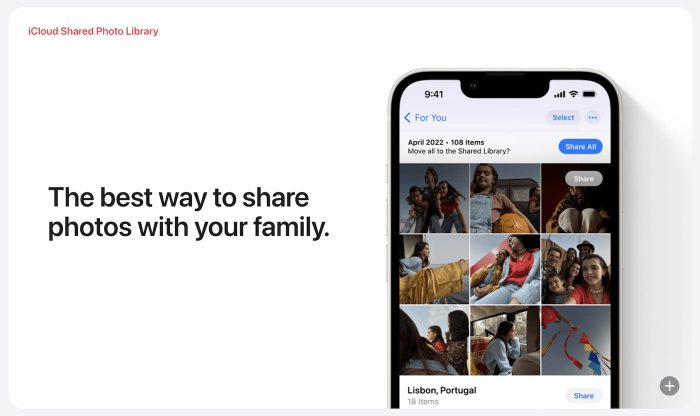

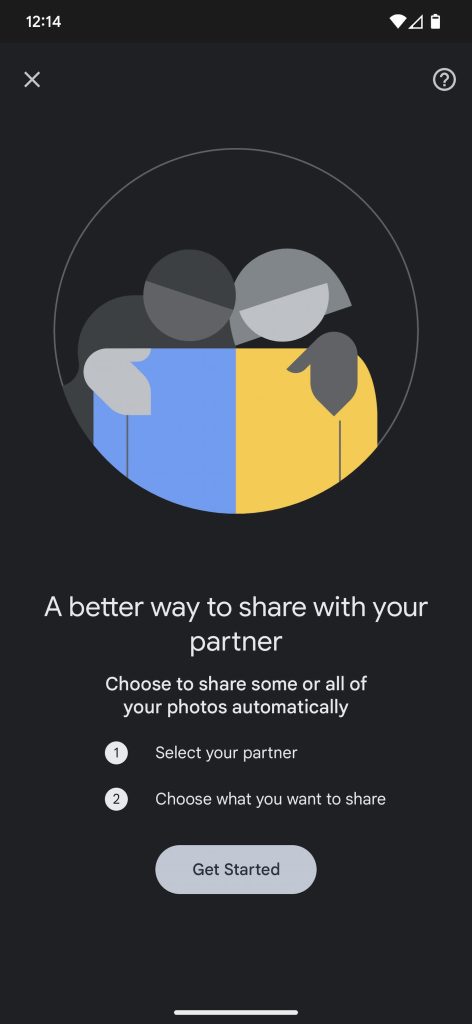

Automatic Sharing in Photos

In iOS 16, you can now have the Photos app automatically share photos of your family in a shared album you all have access to. It has options to allow all photos after a certain date or all photos with them in it. There is even a button in the camera app that automatically puts photos into the shared album. This shared album now gives everyone equal access to add photos, edit them, and delete them. Everyone has equal access and everything gets shared with everyone in the album.

Google Photos has been doing this for at least two years. Partner Sharing, Google Photos’ equivalent, lets you automatically share photos that include that person. It has all the same features as Apple’s except isn’t limited to just Apple products. Since Google Photos is web based, you can upload photos from a DSLR from any computer and have it share as well.

Beyond this, Google also has automatic albums that you can share. This will automatically add all the photos you take of a certain person or pet and add it to an album that can be shared with a link or directly through the app. You can even enable collaboration so others can add their photos to it as well. An entire group of friends can set it up to automatically add every photo of each other to the album and all have access to it.

Google’s feature has been around a bit longer and is still a bit more powerful than Apple’s. Luckily for iOS users, you can just download the Google Photos app on your iPhone for access to these features now and not have to wait for iOS 16.

Smarter dictation with punctuation and user interaction

On iOS 16 dictation now allows you to edit and interact with what you’re dictating while you dictate it. You can click and remove things and just tell the phone what you want to do, and it’ll do it. It also now automatically fills in punctuation.

These dictation features are an almost direct clone of Google Assistant voice typing from the Pixel 6 and 6 Pro. It has the same type of features to interact with text as you type, voice control over what you’ve already typed, and proper punctuation.

From my use of both the iOS 16 and Assistant voice type, Google still has a major lead with this feature. iOS 16 likes to put punctuation in places it shouldn’t and still struggles to correctly understand me. This is the first iOS 16 beta though, so it’s likely this feature will improve.

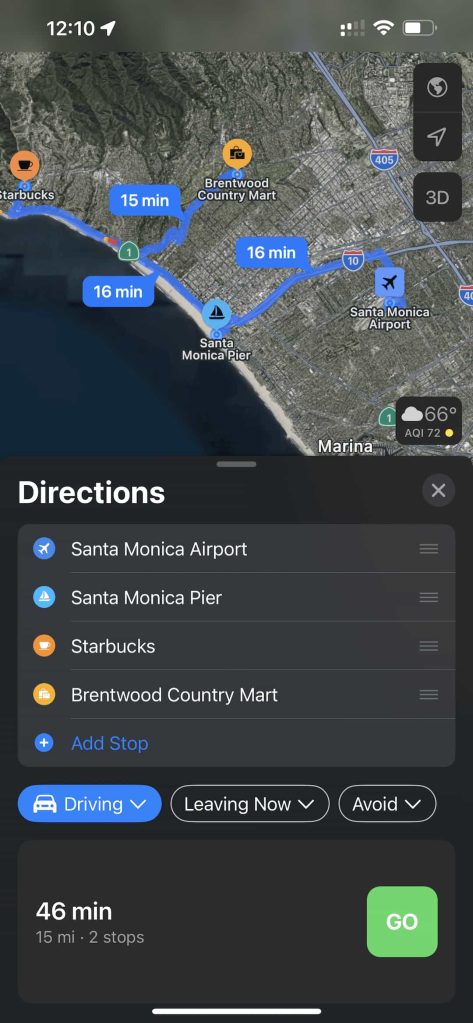

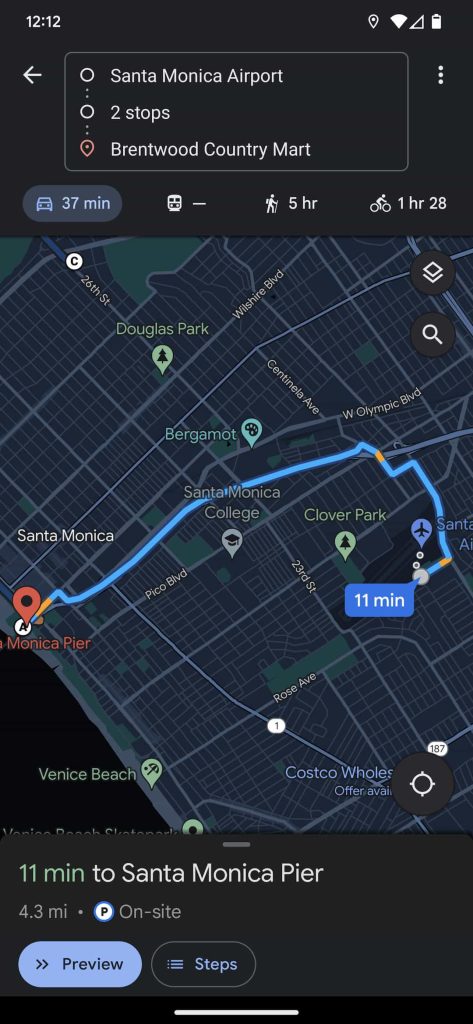

Multiple stops in Maps

Apple Maps now supports adding up to 15 stops along a route in maps. This seemingly simple feature has been in Google Maps for years at this point. The only real difference between these features is Apple Maps supports up to 15 stops while Google Maps is 10 at most. If you want multiple stops now on iOS, you can always download the Google Maps app on your iPhone.

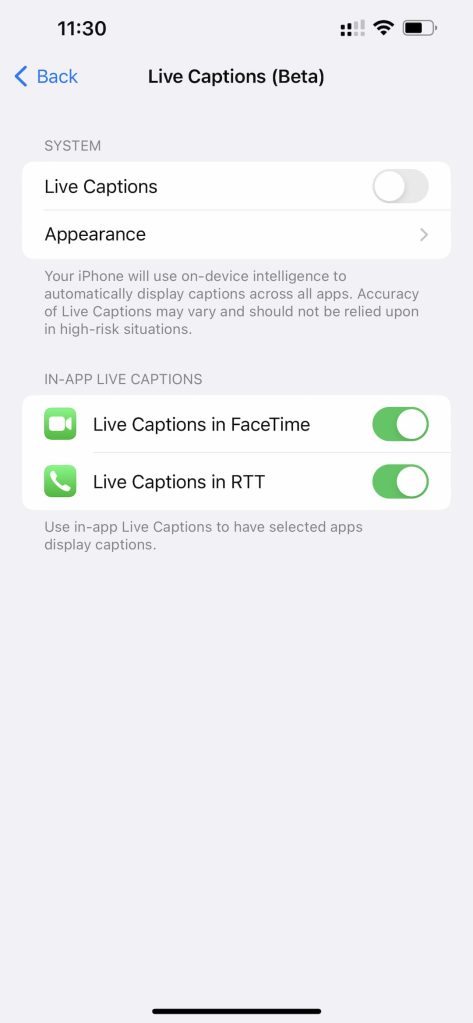

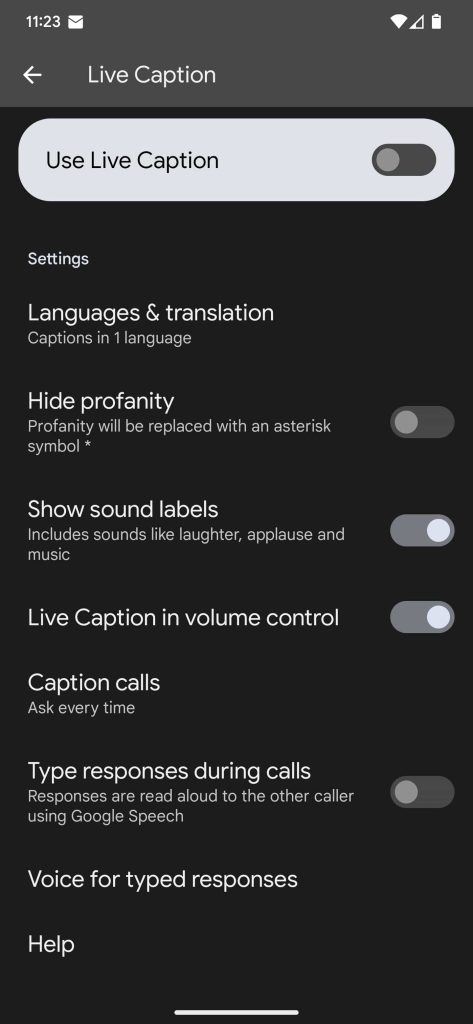

Live Captions

Live Captions were introduced in 2019 at Google I/O to use Google’s voice recognition technology to provide captions for content on phones that didn’t already have closed captions. It would work in real time and generate them for any audio, except phone calls. In March of this year, Google announced this for phone calls as well.

iOS 16 brings this exact same feature. It captions audio in real time across any app, including in calls and FaceTime. The UI even looks identical. After a quick test, though, it does seem to be quite a bit slower than Google’s alternative and not as accurate.

More on iOS 16:

- iOS 16 appears to open the door for Chromecast targets in the AirPlay menu

- WeatherKit, Apple’s Dark Sky replacement, will allow for Android and web apps

- Apple should make iOS 16 customization more like Android’s: Here’s what I want to see

Author: Max Weinbach

Source: 9TO5Google