McAfee has introduced Project Mockingbird as a way to detect AI-generated deepfakes that use audio to scam consumers with fake news and other schemes.

In a bid to combat the escalating threat posed by AI-generated scams, McAfee created its AI-powered Deepfake Audio Detection technology, dubbed Project Mockingbird.

Unveiled at CES 2024, the big tech trade show in Las Vegas, this innovative technology aims to shield consumers from cybercriminals wielding manipulated, AI-generated audio to perpetrate scams and manipulate public perception.

In these scams, such as with the video attached, scammers will start a video with an legit speaker such as a well-known newscaster. But then it will take fake material and have the speaker utter words that the human speaker never actually said. It’s deepfake, with both audio and video, said Steve Grobman, CTO of McAfee, in an interview with VentureBeat.

“McAfee has been all about protecting consumers from the threats that impact their digital lives. We’ve done that forever, traditionally, around detecting malware and preventing people from going to dangerous websites,” Grobman said. “Clearly, with generative AI, we’re starting to see a very rapid pivot to cybercriminals, bad actors, using generative AI to build a wide range of scams.”

He added, “As we move forward into the election cycle, we fully expect there to be use of generative AI in a number of forms for disinformation, as well as legitimate political campaign content generation. So, because of that, over the last couple of years, McAfee has really increased our investment in how we make sure that we have the right technology that will be able to go into our various products and backend technologies that can detect these capabilities that will then be able to be used by our customers to make more informed decisions on whether a video is authentic, whether it’s something they want to trust, whether it’s something that they need to be more cautious around.”

If used in conjunction with other hacked material, the deepfakes could easily fool people. For instance, Insomniac Games, the maker of Spider-Man 2, was hacked and had its private data put out onto the web. Among the so-called legit material could be deepfake content that would be hard to discern from the real hacked material from the victim company.

“What what we’re going to be announcing at CES is really our first public sets of demonstrations of some of our newer technologies that we built,” Grobman said. “We’re working across all domains. So we’re working on technology for image detection, video detection, text detection. One that we’ve put a lot of investment into recently is deep fake audio. And one of the reasons is if you think about an adversary creating fake content, there’s a lot of optionality to use all sorts of video that isn’t necessarily the person that the audio is coming from. There’s the classic deepfake, where you have somebody talking, and the video and audio are synchronized. But there’s a lot of opportunity to have the audio track on top of the roll or on top of other video when there’s other video in the picture that is not the narrator.”

Project Mockingbird detects whether the audio is truly the human person or not, based on listening to the words that are spoken. It’s a way to combat the concerning trend of using generative AI to create convincing deepfakes.

Creating deepfakes of celebrities in porn videos has been a problem for a while, but most of those are confined to deepfake video sites. It’s relatively easy for consumers to avoid such scams. But with the deepfake audio tricks, the problem is more insidious, Grobman said. You can find plenty of these deepfake audio scams sitting in posts on social media, he said. He is particularly concerned about the rise of these deepfake audio scams in light of the coming 2024 U.S. Presidential election.

The surge in AI advancements has facilitated cybercriminals in creating deceptive content, leading to a rise in scams that exploit manipulated audio and video. These deceptions range from voice cloning to impersonate loved ones soliciting money to manipulating authentic videos with altered audio, making it challenging for consumers to discern authenticity in the digital realm.

Anticipating the pressing need for consumers to distinguish real from manipulated content, McAfee Labs developed an industry-leading AI model capable of detecting AI-generated audio. Project Mockingbird employs a blend of AI-powered contextual, behavioral, and categorical detection models, boasting an impressive accuracy rate of over 90% in identifying and safeguarding against maliciously altered audio in videos.

Grobman said the tech to fight deepfakes is significant, likening it to a weather forecast that helps individuals make informed decisions in their digital engagements. Grobman asserted that McAfee’s new AI detection capabilities empower users to understand their digital landscape and gauge the authenticity of online content accurately.

“The use cases for this AI detection technology are far-ranging and will prove invaluable to consumers

amidst a rise in AI-generated scams and disinformation. With McAfee’s deepfake audio detection

capabilities, we’ll be putting the power of knowing what is real or fake directly into the hands of

consumers,” Grobman said. “We’ll help consumers avoid ‘cheapfake’ scams where a cloned celebrity is claiming a new limited-time giveaway, and also make sure consumers know instantaneously when watching a video about a presidential candidate, whether it’s real or AI-generated for malicious purposes. This takes protection in the age of AI to a whole new level. We aim to give users the clarity and confidence to navigate the nuances in our new AI-driven world, to protect their online privacy and identity, and well-being.”

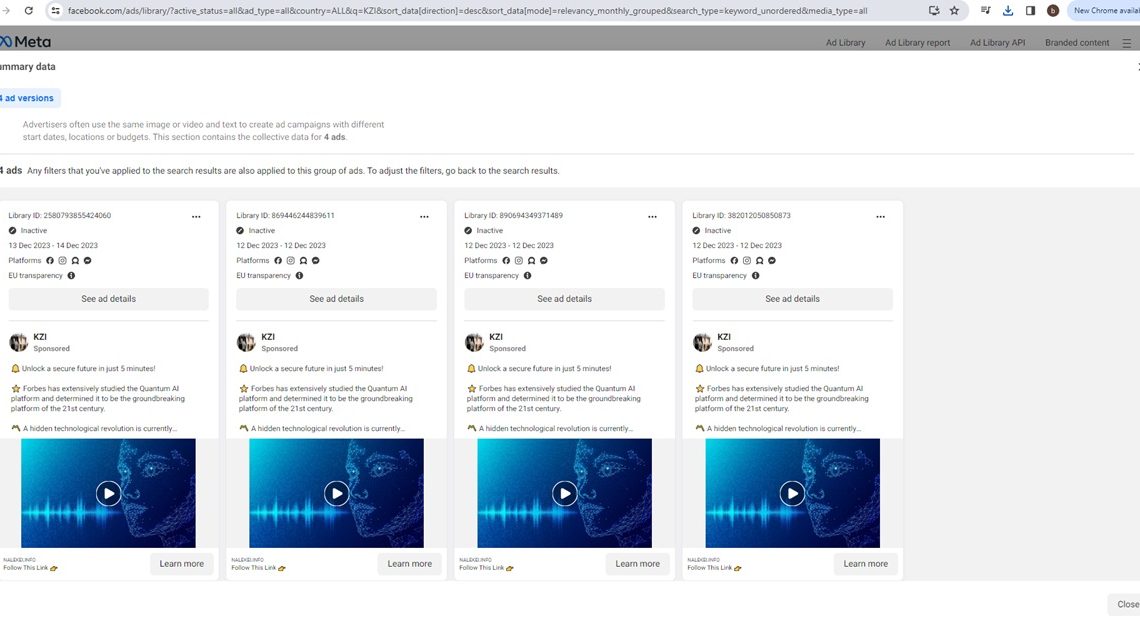

In terms of the cybercrime ecosystem, Grobman said that McAfee’s threat research team has found is the use of legitimate accounts that are registered for ad networks, in platforms like Meta as an example.

McAfee found that such deepfakes are being posted in social media ad platforms like Facebook, Instagram, Threads, Messenger and other platforms. In one case, there was a legit church whose account was hijacked and the bad actors posted content with deepfake scams onto social media.

“The target is often the consumer. The way that the bad actors are able to get to them is through some of the the soft target infrastructure of other organizations,” Grobman said. “We see this also on some of what’s being hosted once people fall for these deep fakes.”

In a case involving a crypto scam video, the bad actors want to have a user download an app or register on a web site.

“It’s putting all these pieces together, that creates a perfect storm,” he said.

He said the cyber criminals are using the ad accounts of a church’s social media account, or a business’ social media account. And that’s how they’re disseminating the news.

In an example Grobman called a “cheap fake,” it’s a legitimate video of a news broadcast. And some of the audio is real. But some of the audio has been replaced with deepfake audio in order to set up a crypto scam environment. A video from a credible source, in this case CNBC, starts talking about a new investment platform and then it’s hijacked to set up a scam to get users to go to a fake crypto exchange.

As McAfee’s tech listens to the audio, it determines where the deepfake audio starts and it can flag the fake audio.

“At the beginning, it was legitimate audio and video, then the graph shows where the fake portions are,” Grobman said.

Grobman said the deepfake detection tech will get integrated into a product to protect users, who are already concerned about being exposed to deepfakes. And in this case, Grobman notes it is pretty hard to keep deepfake audio from reaching users where they are on presumably safe social platforms.

The applications of this technology extend far and wide, equipping consumers with the means to navigate a landscape rife with deepfake-driven cyberbullying, misinformation, and fraudulent schemes. By providing users with clarity and confidence in discerning between genuine and manipulated content, McAfee aims to fortify online privacy, identity, and overall well-being.

At CES 2024, McAfee showcased the first public demonstrations of Project Mockingbird, inviting attendees to experience the groundbreaking technology firsthand. This unveiling stands as a testament to McAfee’s commitment to developing a diverse portfolio of AI models, catering to various use cases and platforms to safeguard consumers’ digital lives comprehensively.

Explaining the symbolism behind Project Mockingbird, McAfee drew parallels to the behavior of Mockingbirds, birds known for mimicking the songs of others. Similar to how these birds mimic for reasons yet to be fully understood, cybercriminals leverage AI to mimic voices and deceive consumers for fraudulent purposes.

The concerns around deepfake technology are palpable, with McAfee’s December 2023 survey revealing a growing apprehension among Americans. Nearly 68% expressed heightened concerns about deepfakes compared to the previous year, with a notable 33% reporting encounters or knowledge of deepfake scams.

The top concerns shared around how deepfakes could be used included influencing elections (52%)

cyberbullying (44%), undermining public trust in the media (48%), impersonating public figures (49%),

creating fake pornographic content (37%), distorting historical facts (43%), and falling prey to scams that

would allow cybercrooks to obtain payment or personal information (16%).

“There’s a lot of concern that people will get exposed to deep fake content in the upcoming election cycle. And I think one of the things that is often mischaracterized is what artificial intelligence is all about. And it’s often represented as artificial intelligence about having computers do the things that have traditionally been done by humans. But in many ways, the AI of 2024 is going to be about AI doing things better than humans can do.”

The question is how will we be able to tell the difference between a real Joe Biden or a deepfake Joe Biden, or the same for Donald Trump, he said.

“We build advanced AI that is able to identify micro characteristics that might be even imperceptible to humans,” he said. “During the political season, somebody it’s not necessarily illegal or even immoral to use generative AI to build a campaign ad. But what we do think is a piece of information consumers would like is to understand that it was built with generative AI as opposed to being based on real audio or video.”

The MSNBC news example showed a debate host from NBC News talking about Republican presidential candidates. At first, it’s legit video and audio. But then it veers into a fake version of his voice casting aspersions on all the candidates and praising Donald Trump. The deepfake material in this case was used to create something crass and funny.

“They switch from (the moderator’s) real voice to his fake voice as he starts describing the paradoxical view of the candidates,” Grobman said. If you take the overall assessment of this audio with our model, you can see there are clearly some areas that the model has high confidence that there are fake portions of the audio track. This is a fairly benign example.”

But it could easily be engineered as a deepfake to show a candidate saying something really damaging to a candidate’s reputation. And that could steer viewers to the wrong conclusion.

Grobman said McAfee takes raw data from a video and feeds it into a classification model, where its goal is to determine whether something is one of a set of things. McAfee has used this kind of AI for a decade where it detects malware, or to identify the content of websites, like whether a website is dangerous for its identity theft intentions. Instead of putting a file into the model, McAfee puts the audio or video into the model and screens it for the dangerous characteristics. Then it predicts if it is AI-generated or not, based on what McAfee has taught it about identifying fake or real content.

Grobman said the company deployed AI to scan mobile phones to figure out if text messages were from legit sources or not. It has also focused on providing web protection on mobile and PC platforms over the years. Now people need to be educated at how easy it is to create deepfakes for audio and imagery.

“We need consumers to have a healthy skepticism that if something doesn’t look right, that there is at least the possibility that it’s AI generated or not real,” he said. “And then having technology from trusted partners like McAfee to help them aid in identifying and catching those things that might not be so obvious will enable people to live their digital lives safely.”

Project Mockingbird has gone beyond experimentation and McAfee is building core tech building blocks that will be used across the product line.

McAfee has introduced Project Mockingbird as a way to detect AI-generated deepfakes that use audio to scam consumers with fake news and other schemes.

In a bid to combat the escalating threat posed by AI-generated scams, McAfee created its AI-powered Deepfake Audio Detection technology, dubbed Project Mockingbird.

Unveiled at CES 2024, the big tech trade show in Las Vegas, this innovative technology aims to shield consumers from cybercriminals wielding manipulated, AI-generated audio to perpetrate scams and manipulate public perception.

In these scams, such as with the video attached, scammers will start a video with an legit speaker such as a well-known newscaster. But then it will take fake material and have the speaker utter words that the human speaker never actually said. It’s deepfake, with both audio and video, said Steve Grobman, CTO of McAfee, in an interview with VentureBeat.

VB Event

The AI Impact Tour

Getting to an AI Governance Blueprint – Request an invite for the Jan 10 event.

“McAfee has been all about protecting consumers from the threats that impact their digital lives. We’ve done that forever, traditionally, around detecting malware and preventing people from going to dangerous websites,” Grobman said. “Clearly, with generative AI, we’re starting to see a very rapid pivot to cybercriminals, bad actors, using generative AI to build a wide range of scams.”

He added, “As we move forward into the election cycle, we fully expect there to be use of generative AI in a number of forms for disinformation, as well as legitimate political campaign content generation. So, because of that, over the last couple of years, McAfee has really increased our investment in how we make sure that we have the right technology that will be able to go into our various products and backend technologies that can detect these capabilities that will then be able to be used by our customers to make more informed decisions on whether a video is authentic, whether it’s something they want to trust, whether it’s something that they need to be more cautious around.”

If used in conjunction with other hacked material, the deepfakes could easily fool people. For instance, Insomniac Games, the maker of Spider-Man 2, was hacked and had its private data put out onto the web. Among the so-called legit material could be deepfake content that would be hard to discern from the real hacked material from the victim company.

“What what we’re going to be announcing at CES is really our first public sets of demonstrations of some of our newer technologies that we built,” Grobman said. “We’re working across all domains. So we’re working on technology for image detection, video detection, text detection. One that we’ve put a lot of investment into recently is deep fake audio. And one of the reasons is if you think about an adversary creating fake content, there’s a lot of optionality to use all sorts of video that isn’t necessarily the person that the audio is coming from. There’s the classic deepfake, where you have somebody talking, and the video and audio are synchronized. But there’s a lot of opportunity to have the audio track on top of the roll or on top of other video when there’s other video in the picture that is not the narrator.”

Project Mockingbird

Project Mockingbird detects whether the audio is truly the human person or not, based on listening to the words that are spoken. It’s a way to combat the concerning trend of using generative AI to create convincing deepfakes.

Creating deepfakes of celebrities in porn videos has been a problem for a while, but most of those are confined to deepfake video sites. It’s relatively easy for consumers to avoid such scams. But with the deepfake audio tricks, the problem is more insidious, Grobman said. You can find plenty of these deepfake audio scams sitting in posts on social media, he said. He is particularly concerned about the rise of these deepfake audio scams in light of the coming 2024 U.S. Presidential election.

The surge in AI advancements has facilitated cybercriminals in creating deceptive content, leading to a rise in scams that exploit manipulated audio and video. These deceptions range from voice cloning to impersonate loved ones soliciting money to manipulating authentic videos with altered audio, making it challenging for consumers to discern authenticity in the digital realm.

Anticipating the pressing need for consumers to distinguish real from manipulated content, McAfee Labs developed an industry-leading AI model capable of detecting AI-generated audio. Project Mockingbird employs a blend of AI-powered contextual, behavioral, and categorical detection models, boasting an impressive accuracy rate of over 90% in identifying and safeguarding against maliciously altered audio in videos.

Grobman said the tech to fight deepfakes is significant, likening it to a weather forecast that helps individuals make informed decisions in their digital engagements. Grobman asserted that McAfee’s new AI detection capabilities empower users to understand their digital landscape and gauge the authenticity of online content accurately.

“The use cases for this AI detection technology are far-ranging and will prove invaluable to consumers

amidst a rise in AI-generated scams and disinformation. With McAfee’s deepfake audio detection

capabilities, we’ll be putting the power of knowing what is real or fake directly into the hands of

consumers,” Grobman said. “We’ll help consumers avoid ‘cheapfake’ scams where a cloned celebrity is claiming a new limited-time giveaway, and also make sure consumers know instantaneously when watching a video about a presidential candidate, whether it’s real or AI-generated for malicious purposes. This takes protection in the age of AI to a whole new level. We aim to give users the clarity and confidence to navigate the nuances in our new AI-driven world, to protect their online privacy and identity, and well-being.”

In terms of the cybercrime ecosystem, Grobman said that McAfee’s threat research team has found is the use of legitimate accounts that are registered for ad networks, in platforms like Meta as an example.

McAfee found that such deepfakes are being posted in social media ad platforms like Facebook, Instagram, Threads, Messenger and other platforms. In one case, there was a legit church whose account was hijacked and the bad actors posted content with deepfake scams onto social media.

“The target is often the consumer. The way that the bad actors are able to get to them is through some of the the soft target infrastructure of other organizations,” Grobman said. “We see this also on some of what’s being hosted once people fall for these deep fakes.”

In a case involving a crypto scam video, the bad actors want to have a user download an app or register on a web site.

“It’s putting all these pieces together, that creates a perfect storm,” he said.

He said the cyber criminals are using the ad accounts of a church’s social media account, or a business’ social media account. And that’s how they’re disseminating the news.

In an example Grobman called a “cheap fake,” it’s a legitimate video of a news broadcast. And some of the audio is real. But some of the audio has been replaced with deepfake audio in order to set up a crypto scam environment. A video from a credible source, in this case CNBC, starts talking about a new investment platform and then it’s hijacked to set up a scam to get users to go to a fake crypto exchange.

As McAfee’s tech listens to the audio, it determines where the deepfake audio starts and it can flag the fake audio.

“At the beginning, it was legitimate audio and video, then the graph shows where the fake portions are,” Grobman said.

Grobman said the deepfake detection tech will get integrated into a product to protect users, who are already concerned about being exposed to deepfakes. And in this case, Grobman notes it is pretty hard to keep deepfake audio from reaching users where they are on presumably safe social platforms.

The applications of this technology extend far and wide, equipping consumers with the means to navigate a landscape rife with deepfake-driven cyberbullying, misinformation, and fraudulent schemes. By providing users with clarity and confidence in discerning between genuine and manipulated content, McAfee aims to fortify online privacy, identity, and overall well-being.

At CES 2024, McAfee showcased the first public demonstrations of Project Mockingbird, inviting attendees to experience the groundbreaking technology firsthand. This unveiling stands as a testament to McAfee’s commitment to developing a diverse portfolio of AI models, catering to various use cases and platforms to safeguard consumers’ digital lives comprehensively.

Explaining the symbolism behind Project Mockingbird, McAfee drew parallels to the behavior of Mockingbirds, birds known for mimicking the songs of others. Similar to how these birds mimic for reasons yet to be fully understood, cybercriminals leverage AI to mimic voices and deceive consumers for fraudulent purposes.

Survey about deepfake awareness

The concerns around deepfake technology are palpable, with McAfee’s December 2023 survey revealing a growing apprehension among Americans. Nearly 68% expressed heightened concerns about deepfakes compared to the previous year, with a notable 33% reporting encounters or knowledge of deepfake scams.

The top concerns shared around how deepfakes could be used included influencing elections (52%)

cyberbullying (44%), undermining public trust in the media (48%), impersonating public figures (49%),

creating fake pornographic content (37%), distorting historical facts (43%), and falling prey to scams that

would allow cybercrooks to obtain payment or personal information (16%).

“There’s a lot of concern that people will get exposed to deep fake content in the upcoming election cycle. And I think one of the things that is often mischaracterized is what artificial intelligence is all about. And it’s often represented as artificial intelligence about having computers do the things that have traditionally been done by humans. But in many ways, the AI of 2024 is going to be about AI doing things better than humans can do.”

The question is how will we be able to tell the difference between a real Joe Biden or a deepfake Joe Biden, or the same for Donald Trump, he said.

“We build advanced AI that is able to identify micro characteristics that might be even imperceptible to humans,” he said. “During the political season, somebody it’s not necessarily illegal or even immoral to use generative AI to build a campaign ad. But what we do think is a piece of information consumers would like is to understand that it was built with generative AI as opposed to being based on real audio or video.”

The MSNBC news example showed a debate host from NBC News talking about Republican presidential candidates. At first, it’s legit video and audio. But then it veers into a fake version of his voice casting aspersions on all the candidates and praising Donald Trump. The deepfake material in this case was used to create something crass and funny.

“They switch from (the moderator’s) real voice to his fake voice as he starts describing the paradoxical view of the candidates,” Grobman said. If you take the overall assessment of this audio with our model, you can see there are clearly some areas that the model has high confidence that there are fake portions of the audio track. This is a fairly benign example.”

But it could easily be engineered as a deepfake to show a candidate saying something really damaging to a candidate’s reputation. And that could steer viewers to the wrong conclusion.

How the detection works

Grobman said McAfee takes raw data from a video and feeds it into a classification model, where its goal is to determine whether something is one of a set of things. McAfee has used this kind of AI for a decade where it detects malware, or to identify the content of websites, like whether a website is dangerous for its identity theft intentions. Instead of putting a file into the model, McAfee puts the audio or video into the model and screens it for the dangerous characteristics. Then it predicts if it is AI-generated or not, based on what McAfee has taught it about identifying fake or real content.

Grobman said the company deployed AI to scan mobile phones to figure out if text messages were from legit sources or not. It has also focused on providing web protection on mobile and PC platforms over the years. Now people need to be educated at how easy it is to create deepfakes for audio and imagery.

“We need consumers to have a healthy skepticism that if something doesn’t look right, that there is at least the possibility that it’s AI generated or not real,” he said. “And then having technology from trusted partners like McAfee to help them aid in identifying and catching those things that might not be so obvious will enable people to live their digital lives safely.”

Project Mockingbird has gone beyond experimentation and McAfee is building core tech building blocks that will be used across the product line.

GamesBeat’s creed when covering the game industry is “where passion meets business.” What does this mean? We want to tell you how the news matters to you — not just as a decision-maker at a game studio, but also as a fan of games. Whether you read our articles, listen to our podcasts, or watch our videos, GamesBeat will help you learn about the industry and enjoy engaging with it. Discover our Briefings.

Author: Dean Takahashi

Source: Venturebeat

Reviewed By: Editorial Team