Apple didn’t say a word about augmented and virtual reality at the WWDC 2022 opening keynote (as if it was trying to hide something), but iOS 16 brings a lot of improvements when it comes to ARKit and related technologies. One of these technologies is “RoomPlan,” a new API that uses LiDAR scanning to quickly create 3D floor plans.

Apps capable of creating 3D floor plans using the iPhone and iPad camera aren’t exactly new, but they aren’t exactly accurate either. When Apple introduced the LiDAR scanner with the 2020 iPad Pro and iPhone 12 Pro, it became easier to detect the dimensions of objects more accurately.

For those unfamiliar, LiDAR is a technology that emits lasers and calculates the time it takes for the light to return to the sensor in order to precisely measure the distance between the device and the objects. As such, a LiDAR scanner is able to detect dimensions and even the shape of an object or indoor environment.

Now with iOS 16, Apple has combined LiDAR with iPhone and iPad cameras in the new RoomPlan API to let users create 3D floor plans in seconds. According to Apple, the API can be useful for real estate, architecture, and interior design apps, since it is precise and easy to use.

Help customers make more informed decisions by using RoomPlan to create a floor plan of a room directly in your apps, such as real estate, e-commerce, or hospitality apps. These scans can also be the first step in architecture and interior design workflows to help streamline conceptual exploration and planning.

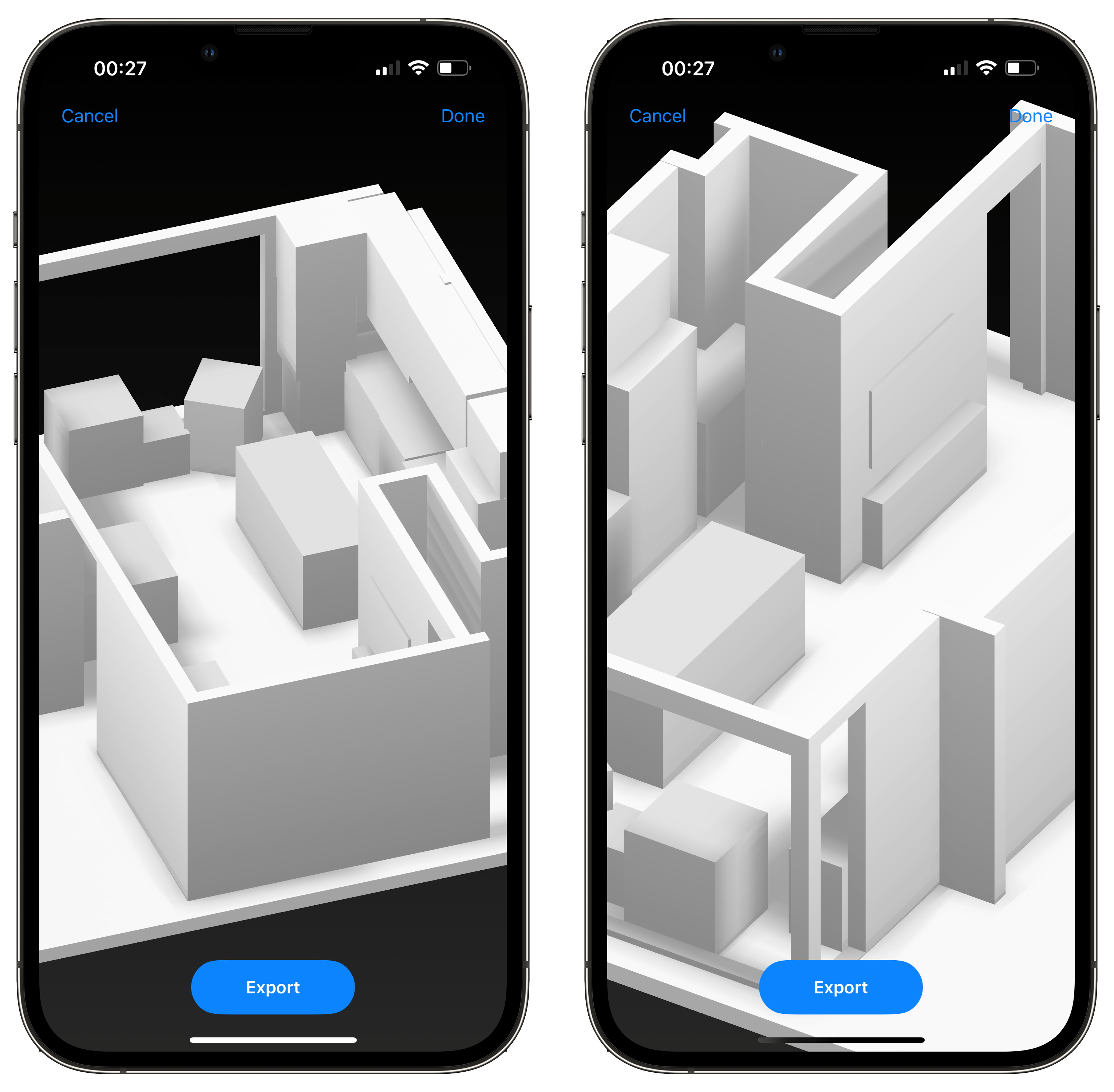

RoomPlan in action

Of course, since RoomPlan is an API, you won’t find it as a feature available right away by default on a device running iOS 16. Instead, it requires developers to create and update their apps to support the new feature.

I was able to try out the RoomPlan API on my iPhone 13 Pro Max using sample code provided by Apple. And I must say, it works quite well. With just a few seconds, I was able to create the 3D floor plan of my living room just by pointing the iPhone at the walls. All you have to do is open a compatible app and scan your environment.

The API can even recognize some objects, although the capture shows only blocks to represent them since the focus is on the floor plan.

What impressed me most, besides the precision, is how fast the scanning works. The API provides a great animation and a real-time preview that shows the scanning progress. The 3D floor plan can be exported as a USDZ file that is compatible with popular tools such as Cinema 4D, Shapr3D, and AutoCAD.

Is it really intended for iPhone users?

Apple introduced this API for iPhone and iPad devices, but something inside me says that this API has a higher purpose. It’s almost as if Apple is working on a LiDAR-based device that needs to detect the environment around the user in real time.

With iOS 16, Apple also added 4K HDR video support for ARKit apps for the first time, while it also updated the Nearby Interaction API to integrate the U1 chip with AR. As the company has reportedly been working on its own mixed reality headset, perhaps these new APIs were created with the headset in mind.

More details about RoomPlan and what’s new in ARKit can be found on Apple’s website. iOS 16 will be available this fall for all users.

Author: Filipe Espósito

Source: 9TO5Google