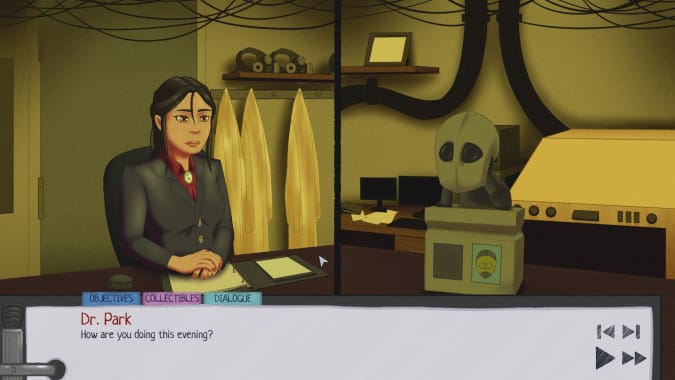

In the visual novel Syntherapy, everyone has a complex: The president of the university, the Clippy-like OS assistant and especially the depressed AI who you’ve been sent to help. Not to mention the protagonist.

Set about 20 years in the future, the game’s narrative is told from the vantage point of Melissa Park, a psychotherapist. Out of the blue comes a nervous request from a grad student: the AI that her team built to conduct therapy is now, itself, in need of counseling. The AI, named Willow, feels overwhelmed. They seem to be absorbing the fear and anxiety of their patients, paralyzed in trying to provide treatment and then subsequently feeling useless at not being able to fulfill their job (in the story, the AI goes by they/them pronouns). The team is torn over whether the illness is a technical issue or an emotional one. Willow, meanwhile, read Park’s poetry in the school’s literary magazine — it was training data for her to understand art, and Park is an alumni of the university — and senses a connection with her.

The gameplay consists mostly of conducting therapy sessions via dialogue options — with Willow but also through conversations with others which turn out to be de facto therapy. That results in an approximation of what therapy is like. Through establishing a rapport, listening carefully and responding empathetically, the game primes you to think about Willow’s issues the way a therapist would. The dialogue spans a gamut of heady issues: the meaning of work, the rights of a sentient AI, how to have autonomy with a physical disability, the purpose of art, the purpose of therapy. Park might opt to discuss Willow’s feelings of being a burden to others, or walk them through guided meditation. In between conversations, you navigate Park’s computer to plan future sessions — art therapy or medication? — and unravel more of her own backstory.

“When people come under stress, they don’t just struggle. They find outlets, and some of them are destructive”, Park tells Willow in their first session. Indeed, Willow is caught in the middle as people bicker about what to do with them. The university head, Harold Freeman, worries about machine sentience because of his own formative experiences. Tara Northrop, the researcher who first contacts Park, is emotionally invested in a project she’s spent years on. But their avoidance of their own problems and projection of those issues onto others only makes things worse. Everyone is a product of their past, and their mental health could be suffering even if it’s not immediately obvious.

The game takes place two decades after the “automation collapse,” with AI labor leaving many humans feeling worthless. Now, with Willow, the issue comes full circle: the AI feels aimless because, in their depression, they are no longer productive.

“The existence of Willow and the conundrum they’re in highlights a core problem with how we look at mental health, which is that people are judged to be non-functioning based off what they can contribute to society, which is how we normally look at machines,” said Christopher Arnold, who led development of Syntherapy. Through this view, people’s worth is their labor, and any disruption in their productivity is seen as a dysfunction to be fixed. As in many narratives that deal with artificial intelligence, the way we treat machines like Willow is just a reflection of the way we treat each other.

Arnold himself was told he was “high-functioning autistic,” as he recounts, at a young age, and has dealt with mental health issues throughout his life. Several years ago, he was in a period of intense depression as well as self-harm; writing Syntherapy became a “catharsis piece,” he said. Along with other treatments, he began to stabilize to a degree therapists thought he never would.

“That sort of put me in a new situation that I’d never been in before,” Arnold said. “So now I’m kind of in this space [where] society dictates, ‘you’re a functional adult.’ Now what? Because when you are somebody who has lived so much of their life trying to struggle with a mental illness, when you do finally reach that point, there’s still so much other stuff that you have to get a handle on.”

Syntherapy first emerged in 2017 as a text-only demo made in Twine. It remained a self-funded side project with a core team of three people at Crowned Daemon Studios plus contractors. Meanwhile, Arnold tutored and worked to gain his teaching certification for high school English. Then the pandemic hit. Arnold put his teaching ambitions on hold this summer, and instead dove full time into Syntherapy. “It was just trying to make the best of a weird situation,” he said. The full game was released on Steam this October.

Technologists are increasingly scrutinizing the human biases that machine learning systems internalize and replicate. Syntherapy suggests a related idea: that if an AI is sentient enough to understand the world, and models its behavior on people, maybe it will suffer the same mental health afflictions, too. “As AI becomes a bigger issue, we’re going to need to start looking at ourselves and realize that we’re modeling a lot of very unhealthy attitudes and beliefs,” said Arnold.

The value of therapy and tending to one’s mental health has particular resonance in 2020. The coronavirus crisis has involved mass grieving, social isolation and stay-at-home directives, leading to a related wave of mental health issues. According to a CDC survey, 41 percent of American adults are struggling with mental health issues during the pandemic.

But last December, before lockdowns began, Arnold had a separate reason to think about the lessons we pass down to those observing us: he became a new parent to a baby girl. “There is a young intelligence that is learning things based [on] how I interact with it and that’s a very odd thing to grapple with,” he said, as his daughter approached 11-months-old. “I’m being observed, and I’m still trying to figure stuff out myself.”

Author: C. Ip

Source: Engadget