At I/O 2021 in May, Google unveiled Project Starline has a conferencing booth that can capture and display realistic 3D video. The company today shared more technical details about the effort and how it surpasses 2D calls.

Project Starline combines Google’s work in 3D imaging, real-time compression, spatial audio, and its “breakthrough light field display system.” The goal is to replicate in-person interactions virtually by “enabl[ing] a sense of depth and realism.” These new details come from a technical paper and talk at SIGGRAPH Asia.

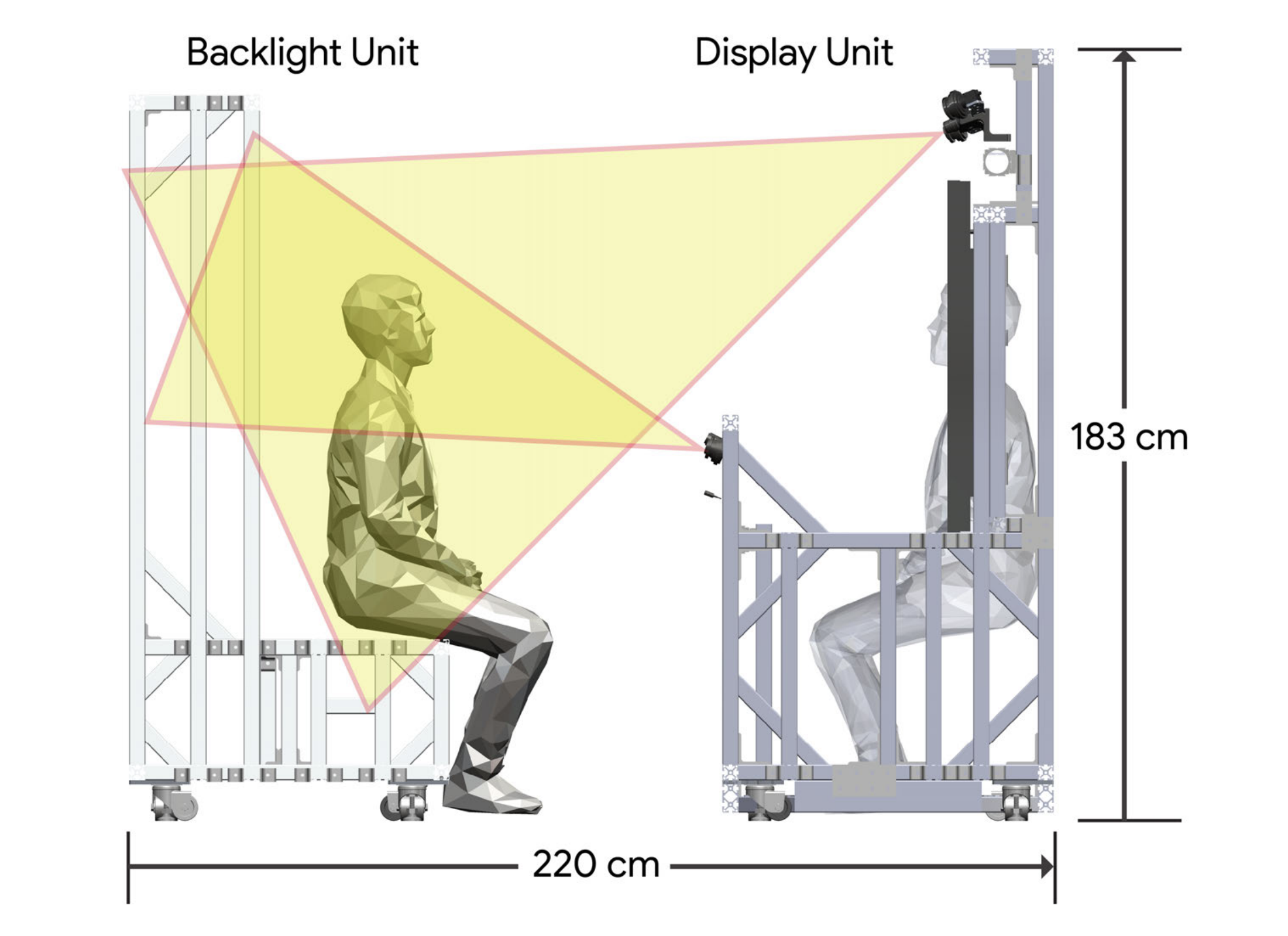

The rig starts with a 65-inch 8K panel that operates at 60Hz. Google says this “screen-based system is motivated in part by the significant weight and discomfort associated with most current AR and VR headsets,” which have low angular resolution and field of view.

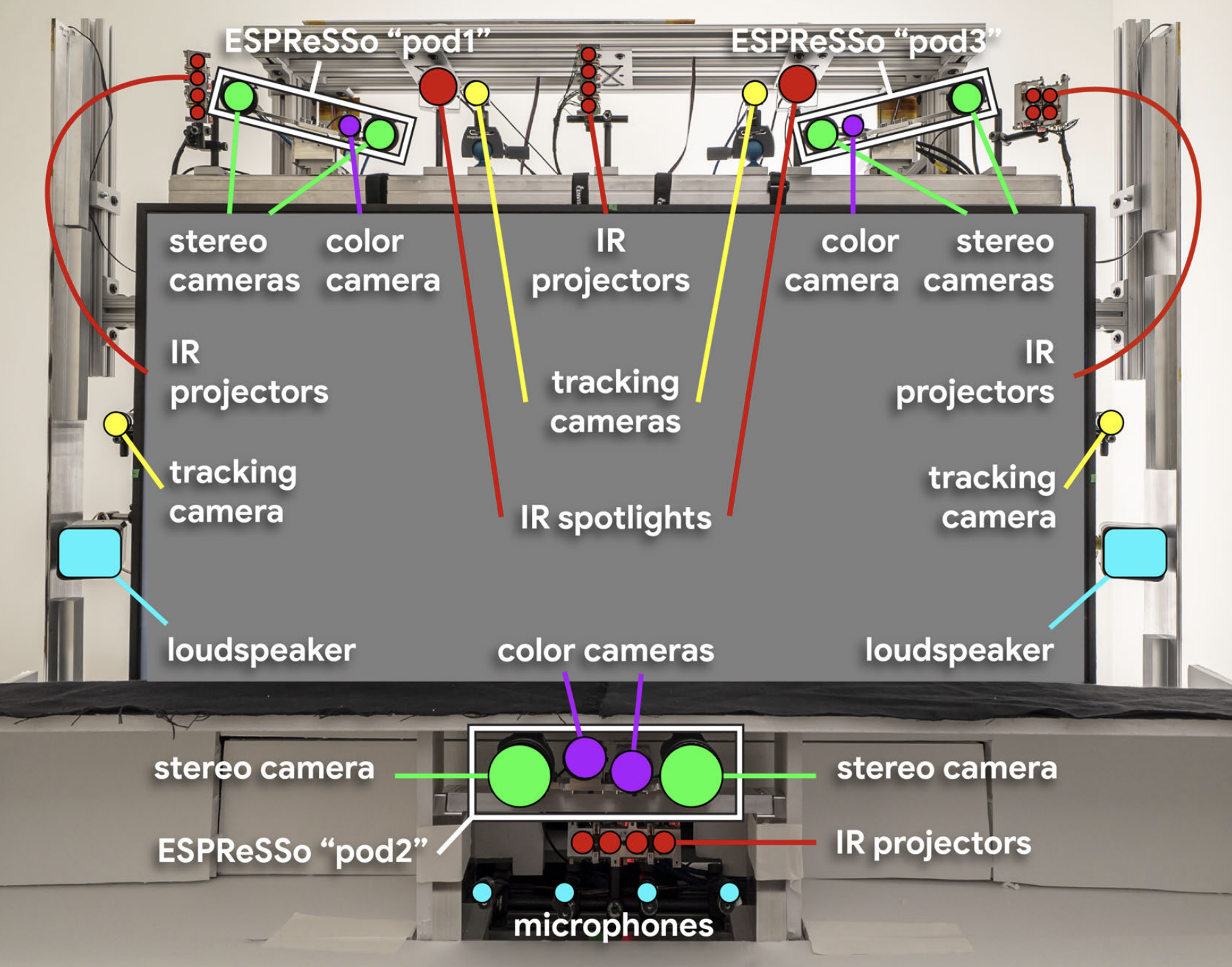

So far, Google employees, including those outside of the Starline team, have used the system for “thousands of hours” to “onboard, interview and meet new teammates, pitch ideas to colleagues and engage in one-on-one collaboration.” This “display unit” also includes speakers, microphones, illuminators, and the computer, as well as several sets of cameras:

- Four high frame-rate (120FPS) face tracking cameras to “estimate the 3D location of the eyes, ears, and mouth within about five millimeters of precision.”

- Three groups of camera pods for 3D video. Each contains two infrared and one color camera, while the bottom pod contains another color camera zoomed in on the face.

In all, four color and three depth streams are compressed on four NVIDIA GPUs (two Quadro RTX 6000 and two Titan RTX) and transmitted via WebRTC.

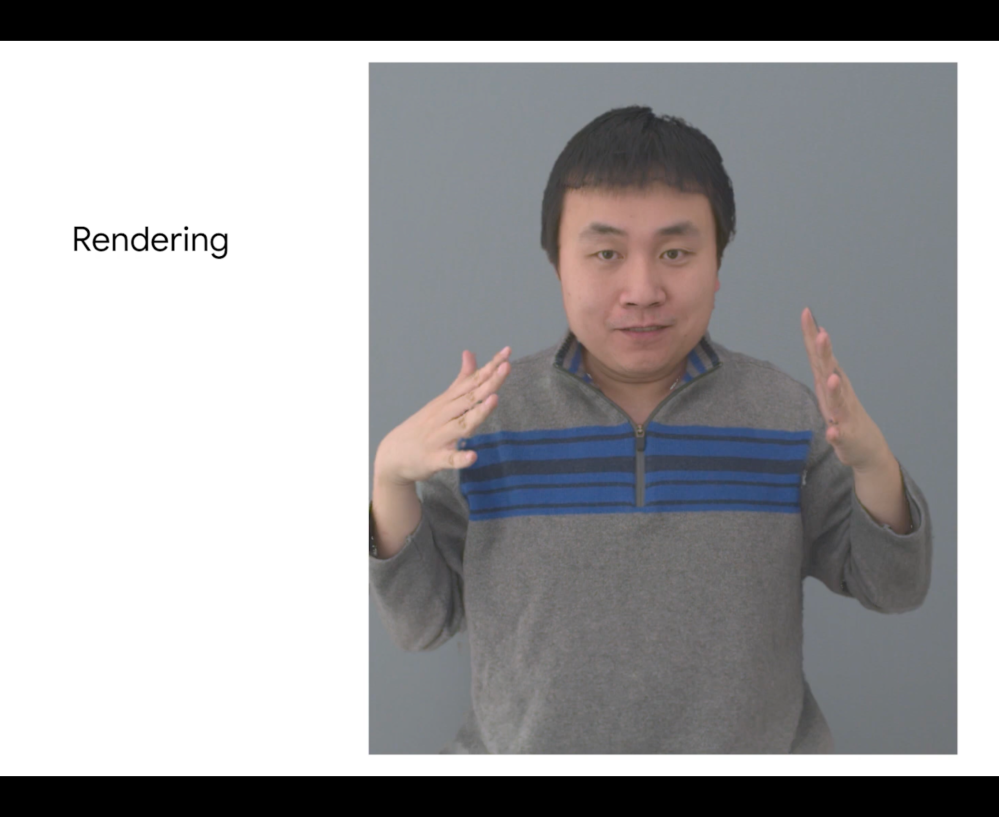

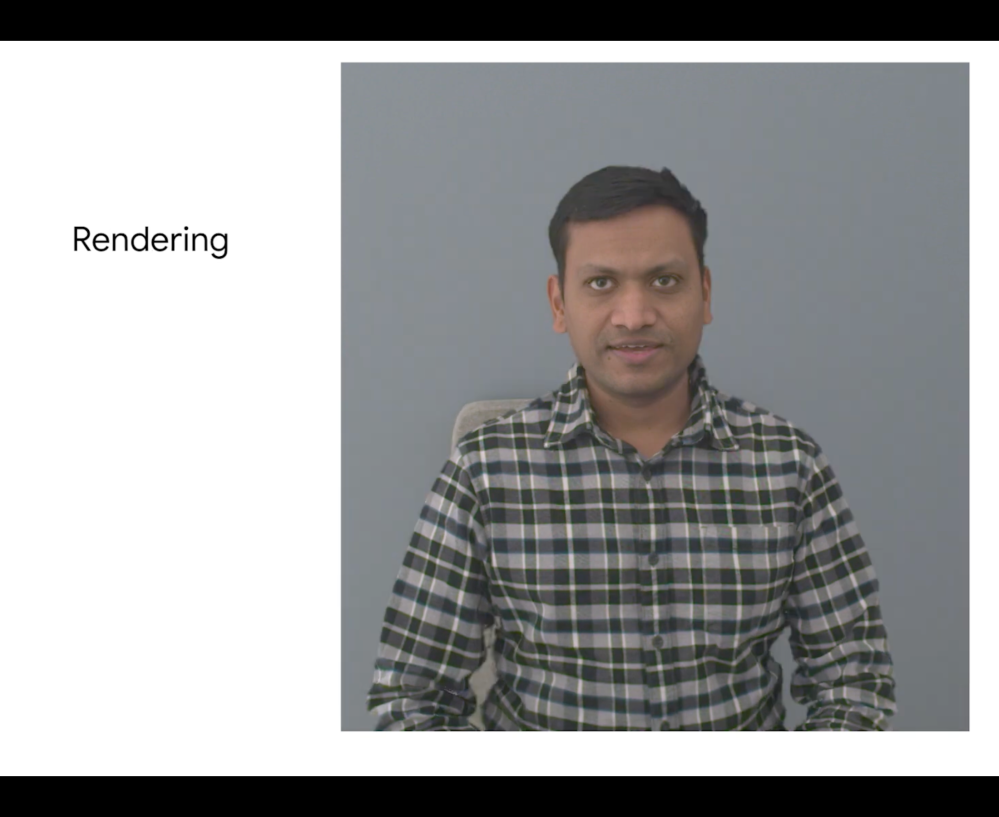

On the receiving side, after decompression, the system re-projects three depth images to the local subject’s eye positions.

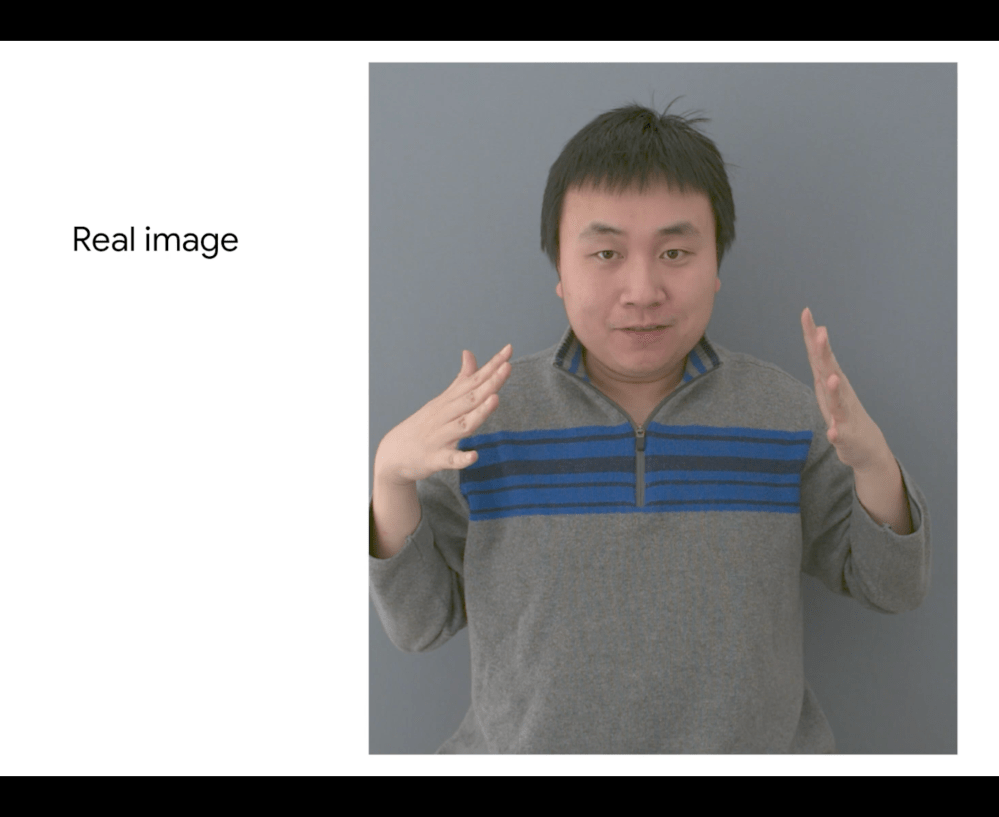

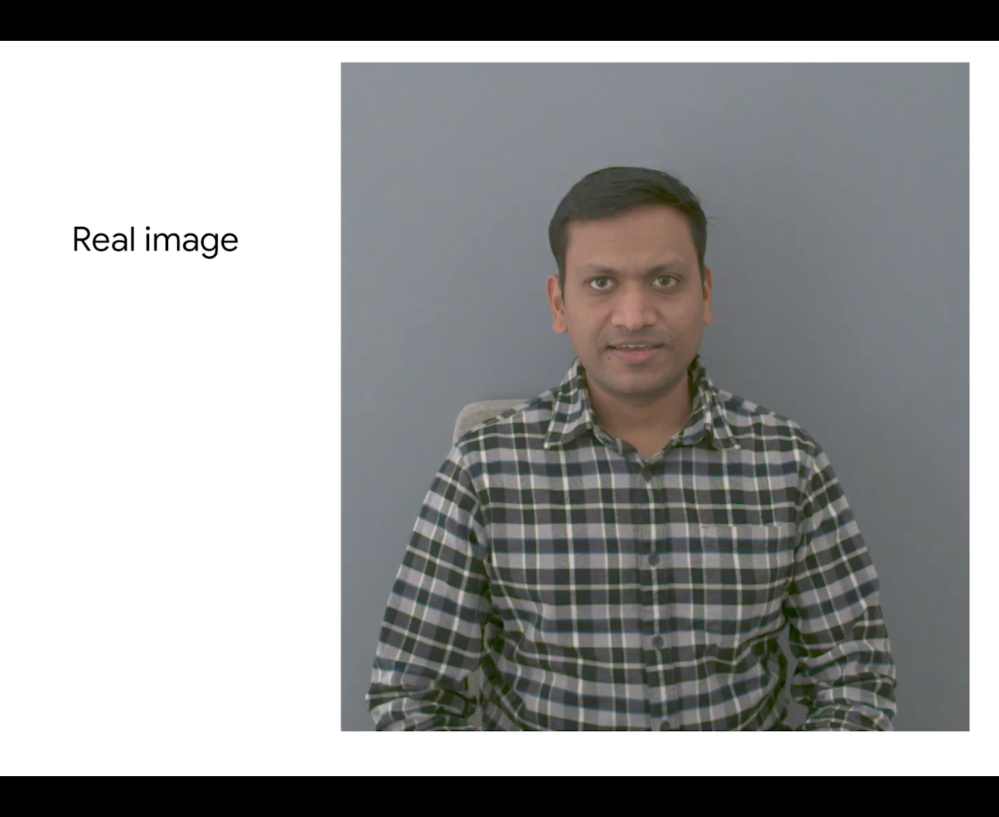

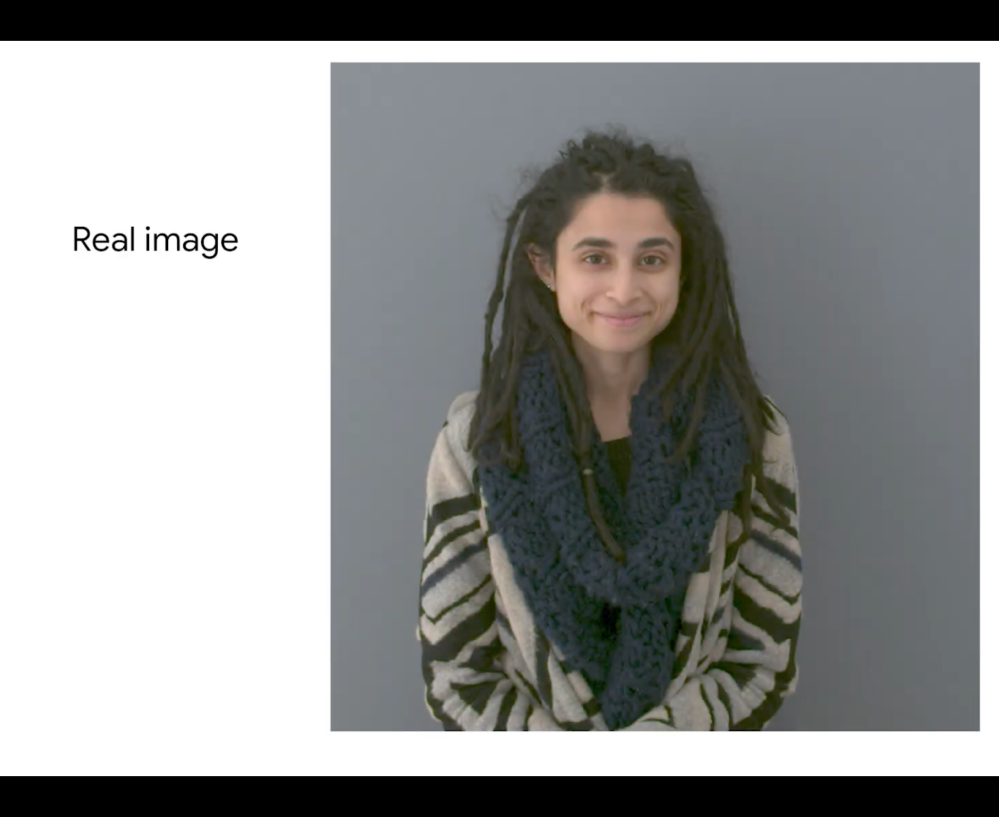

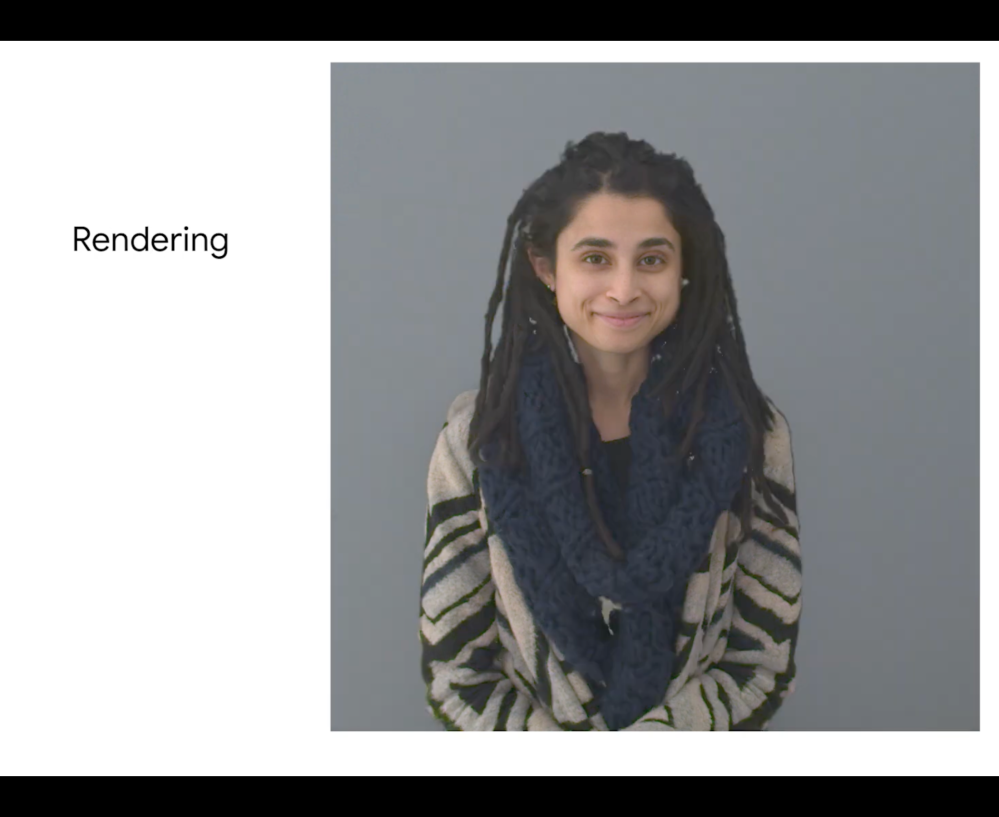

We can evaluate the quality of this image reconstruction by placing cameras in front of the display along the remote user’s line of sight and then render a reconstruction from the same viewpoints.

Meanwhile, the “backlight unit” serves as a bench and infrared backlight with both components aiding lighting.

In terms of accuracy, Google found that people were performing non-verbal behaviors typically associated with in-person interactions when using Project Starline:

- ~40% more hand gestures

- ~25% more head nods

- ~50% more eyebrow movements

Memory recall also improved by ~30%, while “people focused ~15% more on their meeting partner in an eye-tracking experiment.”

Author: Abner Li

Source: 9TO5Google