Cerebras and G42 said they have broken ground on Condor Galaxy 3, an AI supercomputer that can hit eight exaFLOPs of performance.

That’s a lot of performance which will be delivered over 58 million AI-optimized cores, said Andrew Feldman, CEO of Sunnyvale, California-based Cerebras, in an interview with VentureBeat. And it’s going to G42, a national-scale cloud and generative AI enabler based in Abu Dhabi in the United Arab Emirates. It’s going to be one of the world’s largest AI supercomputers, Feldman said.

Featuring 64 of Cerebras’ newly announced CS-3 systems – all powered by what Feldman says is the industry’s fastest AI chip, the Wafer-Scale Engine 3 (WSE-3) — Condor Galaxy will deliver 8 exaFLOPs of AI with 58 million AI-optimized cores.

“We built big, fast AI supercomputers. We began building clusters and the clusters got bigger, and then the cluster got bigger still,” Feldman said. “And then we began training giant models on them.”

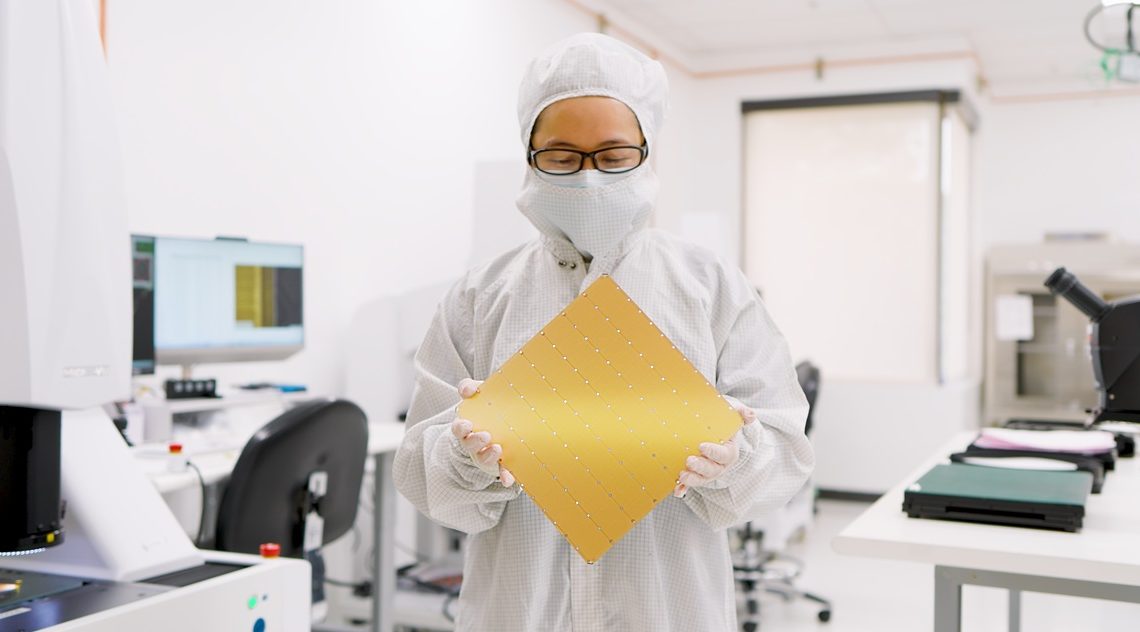

As far as “chip” goes, Cerebras has a pretty unique approach. The company designs its cores to be small, but they are spread across an entire semiconductor wafer — normally used for hundreds of chips. By using the same substrate for its chips, it speeds up communication and makes processing more efficient. That’s how it can fit 900,000 cores on a single chip, or rather a pretty large wafer.

Located in Dallas, Texas, Condor Galaxy 3 is the third installation of the Condor Galaxy network of AI supercomputers. The Cerebras and G42 strategic partnership already delivered 8 exaFLOPs of AI supercomputing performance via Condor Galaxy 1 and Condor Galaxy 2, each amongst the largest AI supercomputers in the world.

Condor Galaxy 3 brings the current total of the Condor Galaxy network to 16 exaFLOPs. By the end of 2024, Condor Galaxy will deliver more than 55 exaFLOPs of AI compute. Overall, Cerebras will build nine AI supercomputers for G42.

“With Condor Galaxy 3, we continue to achieve our joint vision of transforming the worldwide inventory of AI compute through the development of the world’s largest and fastest AI supercomputers,” said Kiril Evtimov, Group CTO of G42, in a statement. “The existing Condor Galaxy network has trained some of the leading open-source models in the industry, with millions of downloads, and we look forward to seeing the next wave of innovation Condor Galaxy supercomputers can enable with twice the performance.”

At the heart of the 64 Cerebras CS-3 systems comprising Condor Galaxy 3, the new WSE-3 5 nanometer chip delivers twice the performance at the same power and cost. Purpose built for training the industry’s largest AI models, the four trillion transistor WSE-3 delivers an astounding 125 petaflops of peak AI performance with 900,000 AI-optimized cores per chip.

“We are honored that our newly announced CS-3 systems will play a critical role in our pioneering strategic partnership with G42,” said Feldman. “Condor Galaxy 3 through Condor Galaxy 9 will each use 64 of the new CS-3s, expanding the amount of compute we will deliver from 36 exaFLOPs to more than 55 exaFLOPs. This marks a significant milestone in AI computing, providing unparalleled processing power and efficiency.”

Condor Galaxy has trained generative AI models, including Jais-30B, Med42, Crystal-Coder-7B and BTLM-3B-8K. Jais 13B and Jais30B are the best bilingual Arabic models in the world, now available on Azure Cloud. BTLM-3B-8K is the number one leading 3B model on HuggingFace, offering 7B parameter performance in a light 3B parameter model for inference, the company said.

Med42, developed with M42 and Core42, is a leading clinical LLM, trained on Condor Galaxy 1 in a weekend and surpassing MedPaLM on performance and accuracy.

Condor Galaxy 3 will be available in Q2 2024.

In other news, Cerebras talked about the chip that powers the supercomputer. It said it has doubled down on its existing world record of fastest AI chip with the introduction of the Wafer Scale Engine 3.

The WSE-3 delivers twice the performance of the previous record-holder, the Cerebras WSE-2, at the same power draw and and for the same price. Purpose built for training the industry’s largest AI models, the 5nm-based, 4 trillion transistor WSE-3 powers the Cerebras CS-3 AI supercomputer, delivering 125 petaflops of peak AI performance through 900,000 AI optimized compute cores.

Feldman said the computer will be delivered on 150 pallets.

“We’re announcing our five-nanometer part for our current generation wafer scale engine. This is the fastest chip on Earth. It’s a 46,000-square-millimeter part manufactured at TSMC. In the five nanometer node it is 4 trillion transistors, 900,000 ai cores and 125 petaflops of AI compute,” he said.

With a huge memory system of up to 1.2 petabytes, the CS-3 is designed to train next generation frontier models 10x larger than GPT-4 and Gemini. 24 trillion parameter models can be stored in a single logical memory space without partitioning or refactoring, dramatically simplifying training workflow and accellerating developer productivity. Training a one-trillion parameter model on the CS-3 is as straightforward as training a one billion parameter model on GPUs.

The CS-3 is built for both enterprise and hyperscale needs. Compact four system configurations can fine tune 70B models in a day while at full scale using 2048 systems, Llama 70B can be trained from scratch in a single day – an unprecedented feat for generative AI.

The latest Cerebras Software Framework provides native support for PyTorch 2.0 and the latest AI models and techniques such as multi-modal models, vision transformers, mixture of experts, and diffusion. Cerebras remains the only platform that provides native hardware acceleration for dynamic and unstructured sparsity, speeding up training by up to eight times.

“When we started on this journey eight years ago, everyone said wafer-scale processors were a pipe dream. We could not be more proud to be introducing the third-generation of our groundbreaking water scale AI chip,” said Feldman. “WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models. We are thrilled for bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges.”

With every component optimized for AI work, CS-3 delivers more compute performance at less space and less power than any other system. While GPUs power consumption is doubling generation to generation, the CS-3 doubles performance but stays within the same power envelope. The CS-3 offers superior ease of use, requiring 97% less code than GPUs for LLMs and the ability to train models ranging from 1B to 24T parameters in purely data parallel mode. A standard implementation of a GPT-3 sized model required just 565 lines of code on Cerebras – an industry record.

“We support models up to 24 trillion parameters,” Feldman said.

Industry partnerships and customer momentum

Cerebras already has a sizeable backlog of orders for CS-3 across enterprise, government and international clouds.

“We have been an early customer of Cerebras solutions from the very beginning, and we have been able to rapidly accelerate our scientific and medical AI research, thanks to the 100x-300x performance improvements delivered by Cerebras wafer-scale technology,” said Rick Stevens, Argonne National Laboratory Associate Laboratory Director for Computing, Environment and Life Sciences, in a statement. “We look forward to seeing what breakthroughs CS-3 will enable with double the performance within the same power envelope.”

This week, Cerebras also announced a new technical and GTM collaboration with Qualcomm to deliver 10 times the performance in AI inference through the benefits of Cerebras’ inference-aware training on CS-3.

“Our technology collaboration with Cerebras enables us to offer customers the highest-performance AI training solution combined with the best perf/TCO$ inference solution. In addition, customers can receive fully optimized deployment ready models thereby radically reducing time to ROI as well,” said Rashid Attar, VP of cloud computing at Qualcomm, in a statement.

By using Cerebras’ industry-leading CS-3 AI accelerators for training and the Qualcomm Cloud AI 100 Ultra for inference, production-grade deployments can realize a driving 10 times price-performance improvement.

“We’re announcing a global partnership with Qualcomm to train models that are optimized for their inference engine. And so this partnership allows us to use a set of techniques that are unique to us and some that are available more broadly to radically reduce the cost of inference,” Feldman said. “So, this is a partnership in which we will be training models such that they can accelerate inference on multiple different strategies.”

Cerebras has more than 400 engineers. “It’s hard to do to deliver huge amounts of compute on schedule. And I don’t think there’s any other player in the category. Any other startup who’s delivered the amount of compute we have over the past six months. And that together with Qualcomm, we’re driving the cost of inference down,” Feldman said.

Cerebras and G42 said they have broken ground on Condor Galaxy 3, an AI supercomputer that can hit eight exaFLOPs of performance.

That’s a lot of performance which will be delivered over 58 million AI-optimized cores, said Andrew Feldman, CEO of Sunnyvale, California-based Cerebras, in an interview with VentureBeat. And it’s going to G42, a national-scale cloud and generative AI enabler based in Abu Dhabi in the United Arab Emirates. It’s going to be one of the world’s largest AI supercomputers, Feldman said.

Featuring 64 of Cerebras’ newly announced CS-3 systems – all powered by what Feldman says is the industry’s fastest AI chip, the Wafer-Scale Engine 3 (WSE-3) — Condor Galaxy will deliver 8 exaFLOPs of AI with 58 million AI-optimized cores.

“We built big, fast AI supercomputers. We began building clusters and the clusters got bigger, and then the cluster got bigger still,” Feldman said. “And then we began training giant models on them.”

GB Event

GamesBeat Summit Call for Speakers

We’re thrilled to open our call for speakers to our flagship event, GamesBeat Summit 2024 hosted in Los Angeles, where we will explore the theme of “Resilience and Adaption”.

As far as “chip” goes, Cerebras has a pretty unique approach. The company designs its cores to be small, but they are spread across an entire semiconductor wafer — normally used for hundreds of chips. By using the same substrate for its chips, it speeds up communication and makes processing more efficient. That’s how it can fit 900,000 cores on a single chip, or rather a pretty large wafer.

Located in Dallas, Texas, Condor Galaxy 3 is the third installation of the Condor Galaxy network of AI supercomputers. The Cerebras and G42 strategic partnership already delivered 8 exaFLOPs of AI supercomputing performance via Condor Galaxy 1 and Condor Galaxy 2, each amongst the largest AI supercomputers in the world.

Condor Galaxy 3 brings the current total of the Condor Galaxy network to 16 exaFLOPs. By the end of 2024, Condor Galaxy will deliver more than 55 exaFLOPs of AI compute. Overall, Cerebras will build nine AI supercomputers for G42.

“With Condor Galaxy 3, we continue to achieve our joint vision of transforming the worldwide inventory of AI compute through the development of the world’s largest and fastest AI supercomputers,” said Kiril Evtimov, Group CTO of G42, in a statement. “The existing Condor Galaxy network has trained some of the leading open-source models in the industry, with millions of downloads, and we look forward to seeing the next wave of innovation Condor Galaxy supercomputers can enable with twice the performance.”

At the heart of the 64 Cerebras CS-3 systems comprising Condor Galaxy 3, the new WSE-3 5 nanometer chip delivers twice the performance at the same power and cost. Purpose built for training the industry’s largest AI models, the four trillion transistor WSE-3 delivers an astounding 125 petaflops of peak AI performance with 900,000 AI-optimized cores per chip.

“We are honored that our newly announced CS-3 systems will play a critical role in our pioneering strategic partnership with G42,” said Feldman. “Condor Galaxy 3 through Condor Galaxy 9 will each use 64 of the new CS-3s, expanding the amount of compute we will deliver from 36 exaFLOPs to more than 55 exaFLOPs. This marks a significant milestone in AI computing, providing unparalleled processing power and efficiency.”

Condor Galaxy has trained generative AI models, including Jais-30B, Med42, Crystal-Coder-7B and BTLM-3B-8K. Jais 13B and Jais30B are the best bilingual Arabic models in the world, now available on Azure Cloud. BTLM-3B-8K is the number one leading 3B model on HuggingFace, offering 7B parameter performance in a light 3B parameter model for inference, the company said.

Med42, developed with M42 and Core42, is a leading clinical LLM, trained on Condor Galaxy 1 in a weekend and surpassing MedPaLM on performance and accuracy.

Condor Galaxy 3 will be available in Q2 2024.

Wafer Scale Engine 3

In other news, Cerebras talked about the chip that powers the supercomputer. It said it has doubled down on its existing world record of fastest AI chip with the introduction of the Wafer Scale Engine 3.

The WSE-3 delivers twice the performance of the previous record-holder, the Cerebras WSE-2, at the same power draw and and for the same price. Purpose built for training the industry’s largest AI models, the 5nm-based, 4 trillion transistor WSE-3 powers the Cerebras CS-3 AI supercomputer, delivering 125 petaflops of peak AI performance through 900,000 AI optimized compute cores.

Feldman said the computer will be delivered on 150 pallets.

“We’re announcing our five-nanometer part for our current generation wafer scale engine. This is the fastest chip on Earth. It’s a 46,000-square-millimeter part manufactured at TSMC. In the five nanometer node it is 4 trillion transistors, 900,000 ai cores and 125 petaflops of AI compute,” he said.

With a huge memory system of up to 1.2 petabytes, the CS-3 is designed to train next generation frontier models 10x larger than GPT-4 and Gemini. 24 trillion parameter models can be stored in a single logical memory space without partitioning or refactoring, dramatically simplifying training workflow and accellerating developer productivity. Training a one-trillion parameter model on the CS-3 is as straightforward as training a one billion parameter model on GPUs.

The CS-3 is built for both enterprise and hyperscale needs. Compact four system configurations can fine tune 70B models in a day while at full scale using 2048 systems, Llama 70B can be trained from scratch in a single day – an unprecedented feat for generative AI.

The latest Cerebras Software Framework provides native support for PyTorch 2.0 and the latest AI models and techniques such as multi-modal models, vision transformers, mixture of experts, and diffusion. Cerebras remains the only platform that provides native hardware acceleration for dynamic and unstructured sparsity, speeding up training by up to eight times.

“When we started on this journey eight years ago, everyone said wafer-scale processors were a pipe dream. We could not be more proud to be introducing the third-generation of our groundbreaking water scale AI chip,” said Feldman. “WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models. We are thrilled for bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges.”

With every component optimized for AI work, CS-3 delivers more compute performance at less space and less power than any other system. While GPUs power consumption is doubling generation to generation, the CS-3 doubles performance but stays within the same power envelope. The CS-3 offers superior ease of use, requiring 97% less code than GPUs for LLMs and the ability to train models ranging from 1B to 24T parameters in purely data parallel mode. A standard implementation of a GPT-3 sized model required just 565 lines of code on Cerebras – an industry record.

“We support models up to 24 trillion parameters,” Feldman said.

Industry partnerships and customer momentum

Cerebras already has a sizeable backlog of orders for CS-3 across enterprise, government and international clouds.

“We have been an early customer of Cerebras solutions from the very beginning, and we have been able to rapidly accelerate our scientific and medical AI research, thanks to the 100x-300x performance improvements delivered by Cerebras wafer-scale technology,” said Rick Stevens, Argonne National Laboratory Associate Laboratory Director for Computing, Environment and Life Sciences, in a statement. “We look forward to seeing what breakthroughs CS-3 will enable with double the performance within the same power envelope.”

Qualcomm deal

This week, Cerebras also announced a new technical and GTM collaboration with Qualcomm to deliver 10 times the performance in AI inference through the benefits of Cerebras’ inference-aware training on CS-3.

“Our technology collaboration with Cerebras enables us to offer customers the highest-performance AI training solution combined with the best perf/TCO$ inference solution. In addition, customers can receive fully optimized deployment ready models thereby radically reducing time to ROI as well,” said Rashid Attar, VP of cloud computing at Qualcomm, in a statement.

By using Cerebras’ industry-leading CS-3 AI accelerators for training and the Qualcomm Cloud AI 100 Ultra for inference, production-grade deployments can realize a driving 10 times price-performance improvement.

“We’re announcing a global partnership with Qualcomm to train models that are optimized for their inference engine. And so this partnership allows us to use a set of techniques that are unique to us and some that are available more broadly to radically reduce the cost of inference,” Feldman said. “So, this is a partnership in which we will be training models such that they can accelerate inference on multiple different strategies.”

Cerebras has more than 400 engineers. “It’s hard to do to deliver huge amounts of compute on schedule. And I don’t think there’s any other player in the category. Any other startup who’s delivered the amount of compute we have over the past six months. And that together with Qualcomm, we’re driving the cost of inference down,” Feldman said.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.

Author: Dean Takahashi

Source: Venturebeat

Reviewed By: Editorial Team