Google’s biggest consumer-facing augmented reality offerings today are Lens and Live View in Maps. At WWDC 2021, Apple announced it was planning to go after those two features with iOS 15 this fall.

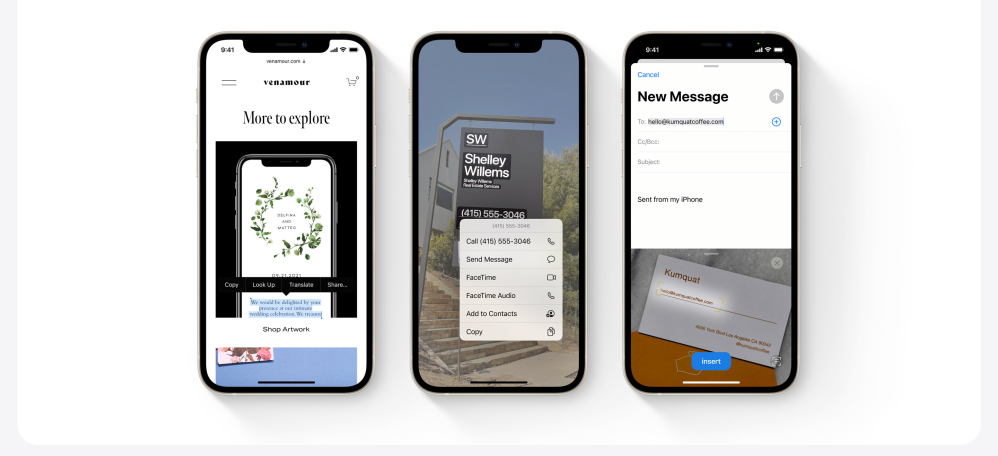

Apple’s Google Lens competitor is primarily accessed in the Camera and Photos apps. When your phone is pointed at text or you’re viewing an existing image with words, an indicator appears in the bottom right to start an analysis.

This is in contrast to the Lens viewfinder being accessible from the Search app, Assistant, and homescreen (both as an app icon and in the Pixel Launcher search bar), as well as Google Camera and other third-party clients. On images you already captured, Lens is in Photos, while it’s also available in Google Images. For Google, it does make sense to surface visual lookup in its search tools, but the approach feels a bit overwhelming.

At a high level, the iOS capabilities fall under the “Intelligence” umbrella. However, Apple is very clearly emphasizing “Live Text” over visual search:

Let’s say I just finished a meeting with the team, and I want to capture my notes from the whiteboard. I can now just point the camera at the whiteboard and an indicator appears here in the lower right. When I tap it, the text just jumps right out. I can use my normal text selection gestures. Just drag and copy. Now I can switch over to Mail and paste those in and then send this off to Tim.

The default behavior for Apple is copying text. It makes sense as optical character recognition (OCR) is a big timesaver compared to manually transcribing text in an image.

Meanwhile, “Look Up” and “Translate” are more secondary and require an explicit confirmation to view rather than all information being passively surfaced at once. Apple is doing visual search for “recognized objects and scenes.” It can recognize pets/breeds, flower types, art, books, nature, and landmarks.

Visual search is a useful capability to have, but it’s more “fun” than something you’ll use everyday. Apple’s priorities very much reflect people’s usage of Google Lens today. That said, visual lookup is the future. While it might be too late for Apple to make its own web search engine, today’s announcement reflects how it very much wants to own AR search, lest it be dependent on Google when the AR glass form factor emerges.

Another feature that will excel on wearables in AR navigation. Apple showed off the ability to hold up your iPhone and scan the buildings in the area to “generate a highly accurate position.” Apple Maps then proceeds to show detailed directions, like large arrows and street names.

Like Live View, Apple is first focusing on directions. Google Maps has already moved past that and at I/O 2021 announced plans to let you bring up Live View outside of navigation to scan your surroundings and see place details.

Apple’s last announcement in the AR space today is taking pictures of an object to create a 3D model on macOS.

After iOS 15 launches this fall, AR utilities will be commonplace on the two biggest mobile platforms. These capabilities are useful on phones, but they will be truly useful on glasses. It’s clear that both Apple and Google are building out the accuracy of these services before they take center stage on the next form factor. It will take sometime to get there, but the groundwork is actively being laid and everyone can soon preview this future.

Author: Abner Li

Source: 9TO5Google