As OpenAI continues to drive virality with the content produced by Sora, its yet-to-launch AI video platform, competitors are going all in to push the bar with their respective offerings. Just a few days ago, Pika Labs introduced lip-sync to its product. And now, an entirely new AI video startup “Haiper” has emerged from stealth with $13.8 million in seed funding from Octopus Ventures.

Founded by former researchers at Google’s Deepmind Yishu Miao (CEO) and Ziyu Wang, London-based Haiper offers a platform that allows users to generate high-quality videos from text prompts or animate existing images. The platform has it’s own visual foundation model under the hood and takes on existing AI video tools in the market such as Runway and Pika Labs. However, from early tests, it still appears to lag behind what OpenAI has to offer with Sora.

Haiper plans to use the funding to scale its infrastructure and improve its product, ultimately building towards an AGI capable of internalizing and reflecting human-like comprehension of the world.

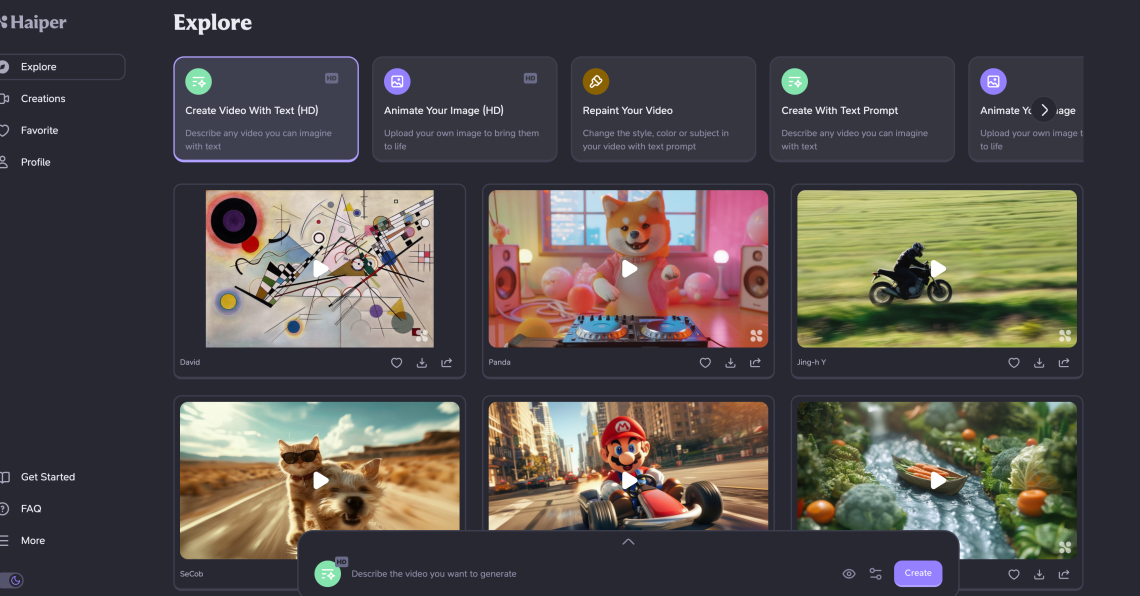

Much like Runway and Pika, Haiper, in its current form, provides users with a web platform, where they get a straightforward interface to enter a text prompt of their choice to start creating AI videos of anything they could imagine. The platform currently offers tools to generate videos in both SD and HD quality, although the length for HD content remains restricted to two seconds while SD can go up to four seconds. The lower-quality video tool also comes with the option to control motion levels.

When we tested the product, the output for HD videos were more consistent, probably due to the shorter length, while the lower-quality videos it produced were often blurred out with the subject changing shape, size and color, especially in cases of higher levels of motion. There’s also no option to extend the generations, as seen in Runway, although the company claims it plans to launch the capability soon.

In addition to text-to-video features, the platform also provides tools to let users upload and animate existing images or repaint a video, changing its style, background color, elements, or subject with a text prompt.

Haiper claims that the platform and the proprietary visual foundation model underpinning it can cater to a wide range of use cases, right from individual applications such as content for social media to business-centric uses like generating content for a studio. However, the company has not shared anything about the commercialization plan and continues to offer the technology for free.

With this funding, Haiper plans to evolve its infrastructure and product with the ultimate goal of building an artificial general intelligence (AGI) with full perception abilities. The investment takes the total capital raised by the company to $19.2 million.

In the next few months, Haiper plans to iterate from user feedback and release a series of large trained models that will enhance the quality of AI video outputs, potentially bridging the gap between rival offerings available in the market.

As the company scales this work, it will look to enhance the models’ understanding of the world, essentially creating AGI that could replicate the emotional and physical elements of reality – covering the tiniest visual aspects, including light, motion, texture and interactions between objects – for creating true-to-life content.

“Our end goal is to build an AGI with full perceptual abilities, which has boundless potential to assist with creativity. Our visual foundation model will be a leap forward in AI’s ability to deeply understand the physics of the world and replicate the essence of reality in the videos it generates. Such advancements lay the groundwork for AI that can understand, embrace, and enhance human storytelling,” Miao said in a statement.

With these next-gen perceptual capabilities, Haiper expects its technology will even go beyond content creation and make an impact in other domains, including robotics and transportation. This approach to video AI makes it an interesting company to watch out for in the red-hot AI domain.

Join leaders in Boston on March 27 for an exclusive night of networking, insights, and conversation. Request an invite here.

As OpenAI continues to drive virality with the content produced by Sora, its yet-to-launch AI video platform, competitors are going all in to push the bar with their respective offerings. Just a few days ago, Pika Labs introduced lip-sync to its product. And now, an entirely new AI video startup “Haiper” has emerged from stealth with $13.8 million in seed funding from Octopus Ventures.

Founded by former researchers at Google’s Deepmind Yishu Miao (CEO) and Ziyu Wang, London-based Haiper offers a platform that allows users to generate high-quality videos from text prompts or animate existing images. The platform has it’s own visual foundation model under the hood and takes on existing AI video tools in the market such as Runway and Pika Labs. However, from early tests, it still appears to lag behind what OpenAI has to offer with Sora.

Haiper plans to use the funding to scale its infrastructure and improve its product, ultimately building towards an AGI capable of internalizing and reflecting human-like comprehension of the world.

What does Haiper offer with AI video platform?

Much like Runway and Pika, Haiper, in its current form, provides users with a web platform, where they get a straightforward interface to enter a text prompt of their choice to start creating AI videos of anything they could imagine. The platform currently offers tools to generate videos in both SD and HD quality, although the length for HD content remains restricted to two seconds while SD can go up to four seconds. The lower-quality video tool also comes with the option to control motion levels.

When we tested the product, the output for HD videos were more consistent, probably due to the shorter length, while the lower-quality videos it produced were often blurred out with the subject changing shape, size and color, especially in cases of higher levels of motion. There’s also no option to extend the generations, as seen in Runway, although the company claims it plans to launch the capability soon.

In addition to text-to-video features, the platform also provides tools to let users upload and animate existing images or repaint a video, changing its style, background color, elements, or subject with a text prompt.

Haiper claims that the platform and the proprietary visual foundation model underpinning it can cater to a wide range of use cases, right from individual applications such as content for social media to business-centric uses like generating content for a studio. However, the company has not shared anything about the commercialization plan and continues to offer the technology for free.

Plan to build AGI with perception of the world

With this funding, Haiper plans to evolve its infrastructure and product with the ultimate goal of building an artificial general intelligence (AGI) with full perception abilities. The investment takes the total capital raised by the company to $19.2 million.

In the next few months, Haiper plans to iterate from user feedback and release a series of large trained models that will enhance the quality of AI video outputs, potentially bridging the gap between rival offerings available in the market.

As the company scales this work, it will look to enhance the models’ understanding of the world, essentially creating AGI that could replicate the emotional and physical elements of reality – covering the tiniest visual aspects, including light, motion, texture and interactions between objects – for creating true-to-life content.

“Our end goal is to build an AGI with full perceptual abilities, which has boundless potential to assist with creativity. Our visual foundation model will be a leap forward in AI’s ability to deeply understand the physics of the world and replicate the essence of reality in the videos it generates. Such advancements lay the groundwork for AI that can understand, embrace, and enhance human storytelling,” Miao said in a statement.

With these next-gen perceptual capabilities, Haiper expects its technology will even go beyond content creation and make an impact in other domains, including robotics and transportation. This approach to video AI makes it an interesting company to watch out for in the red-hot AI domain.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.

Author: Shubham Sharma

Source: Venturebeat

Reviewed By: Editorial Team