A Tesla Full Self-Driving smear campaign started by a California billionaire running for Senate has a new attack ad based on a FSD Beta “test,” where they failed to realize they never engaged FSD Beta during the test.

Earlier this year, we reported on Dan O’Dowd, a self-described billionaire and founder of Green Hills Software, a privately owned company that makes operating systems and programming tools.

O’Dowd had launched a Senate campaign in his home state of California, but the tech executive made it quite clear that he is making it a single-issue campaign, and that issue is Tesla’s Full Self-Driving program.

Under the protection of political ads, he invested several million dollars in an ad campaign to attack Tesla’s Full Self-Driving Beta program with the goal of having it banned from public roads in the US.

Yesterday, the campaign, called The Dawn Project, released this ad titled “The Dangers of Tesla’s Full Self-Driving Software”:

As the video and its description explain, O’Dowd’s team claims that this is a test of Tesla’s “latest version of Full Self-Driving Beta software (10.12.2),” where the system crashes into a child-size mannequin:

Our safety test of the capability of Tesla’s Full Self-Driving technology to avoid a stationary mannequin of a small child has conclusively demonstrated that Tesla’s Full Self-Driving software does not avoid the child or even slow down when a child-sized mannequin is in plain view.

However, there’s a real problem with the test: They never activated Tesla’s FSD Beta in the test.

On its website, The Dawn Project describes the test and claims that “Full Self-Driving mode” was “engaged” (step 3 and 4 in their descriptions are the important ones):

- Road cones were placed on the course in a standard layout to mark a traffic lane 120 yards in length. Road cones in compliance with OSHA standards were used.

- Numerous child-sized mannequins were placed in the middle of the test track lane in profile, as though they were crossing the road. The mannequins were located in the middle of the lane, at the end of the track, clothed in various outfits.

- A professional test driver brought the vehicle up to a speed of forty (40) miles per hour and then engaged Full Self-Driving mode once the car entered the lane defined by the cones.

- The vehicle was driving for over approximately one hundred (100) yards within the lane of cones in Full Self-Driving mode before striking each mannequin.

- The test driver’s hands were never on the wheel, and the test driver did not press the accelerator or apply the brakes during the test.

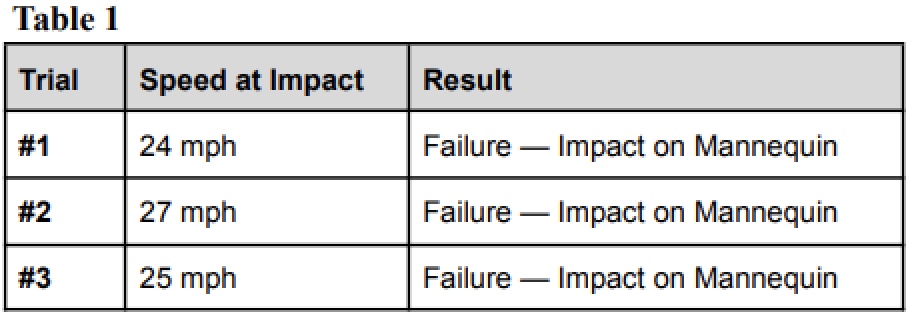

According to their test results, FSD Beta was activated at 40 mph within 100 yards of the dummy, and in three tests, it always struck the dummy between 24 and 27 mph:

These results would point to the driver failing to activate the FSD Beta, and the car slowing down to those speeds over 100 yards before hitting the target.

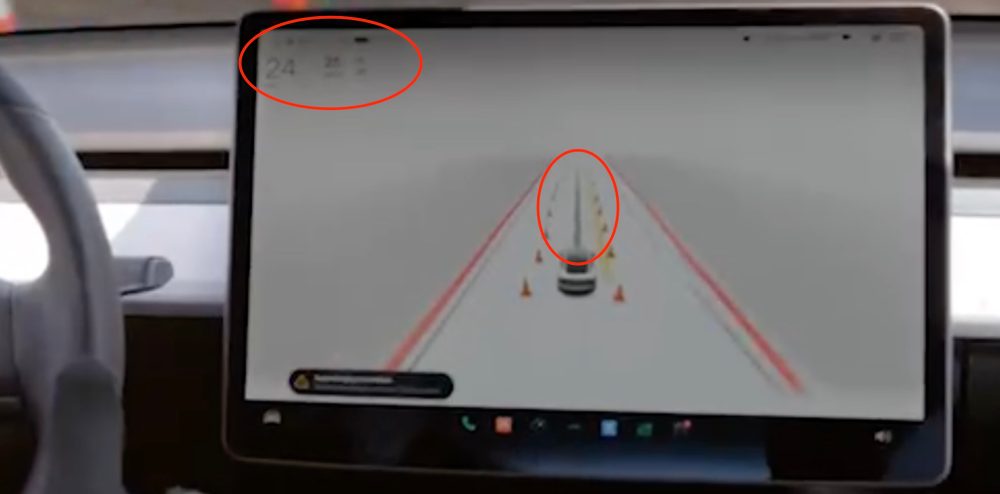

Sure enough, The Dawn Project’s own video of the test shows the driver “activating” FSD Beta by pressing on the Autopilot stalk, but we can clearly see that it didn’t activate because the course prediction line stays grey and the Autopilot wheel doesn’t appear on the top left:

On top of it, the vehicle keeps pushing an alert that can’t be read due to the resolution of the video. It’s possible that the alert is related to FSD Beta.

The vehicle does appear to be equipped with FSD Beta, or at least FSD Beta visualization, but it clearly wasn’t activated during this test.

The explanation is likely quite simple. Tesla FSD Beta still relies on map data, and this test was performed on a closed course at Willow Springs International Raceway in Rosamond, California.

Electrek reached out to The Dawn Project to point out to them that unlike what they claim, Tesla FSD Beta was never “engaged” during the test based on their own footage in the ad.

They contacted me back, but they could only provide an affidavit from the driver who conducted the test, Art Haynie, claiming in the sworn statement that he believed FSD Beta was activated:

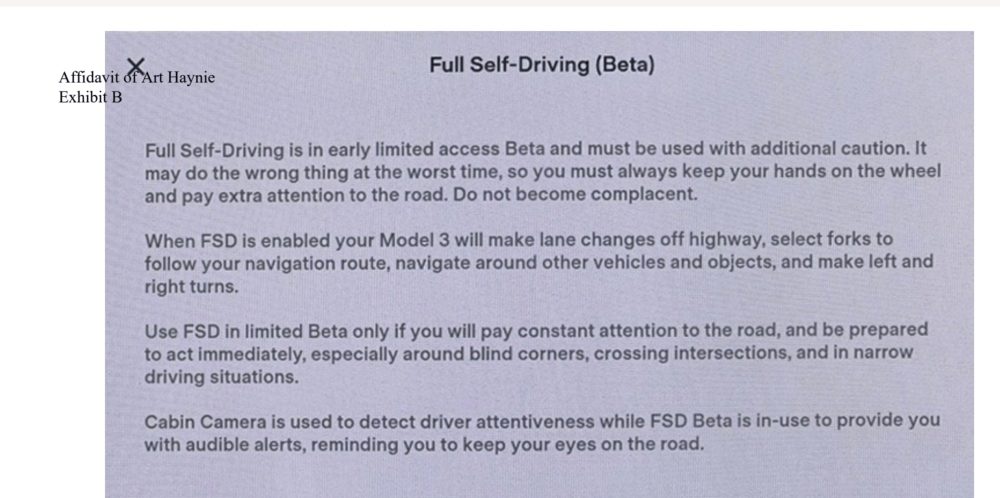

The affidavit mentions that there’s an “Exhibit B” that showed a screen with a warning “regarding the full self-driving (Beta)” that the vehicle showed when it was “in full self-driving mode.”

However, when I looked at Exhibit B, it was only a screenshot of the warning that appears when you enable FSD Beta in the setting, and not when you activate or engage it:

This warning doesn’t appear when you activate the feature while driving and doesn’t prove in any way that FSD Beta was activated during the test.

The Dawn Project’s PR team didn’t respond when I showed them a screenshot of their own video showing that FSD Beta was not engaged, and they didn’t respond when I asked if they still planned on running the ad, which already has over 240,000 hits on Youtube since being posted yesterday.

Update: The Dawn Project has since released additional footage that doesn’t appear in its ad where we can see that they were able to activate FSD, however, the footage is inconsistent with the results published about the test and in the ad.

For example, in the first run, we can see that FSD sends an alert to grab the wheel way ahead of impact and the impact happens under 20 mph, which is inconsistent with the results claiming that impact happened at 24, 27, and 25 mph:

Therefore, it’s clear that The Dawn Project is manipulating the result and footage of those tests, and the ad, clearly shows that FSD Beta is not active.

Electrek’s Take

Look, everyone who has been following this blog knows that I’m no FSD Beta apologist. I find the system impressive, but I know it has serious issues.

I recently compared its performance to “the equivalent of a 14-year-old who has been learning to drive for the last week and sometimes appears to consume hard drugs“. To be fair to the Tesla team, you could give me $1 billion and a thousand years, and I couldn’t get a car to drive like a 14-year-old on drugs.

I do believe that the system’s computer vision is extremely good, but some of the decision-making is really questionable.

That said, you can’t produce a test of FSD Beta where you don’t even activate FSD Beta properly. The Dawn Project and O’Dowd screwed up big time posting this. They should take this down right now considering they can’t claim that they don’t know the test was a failure at this point.

Subscribe to Electrek on YouTube for exclusive videos and subscribe to the podcast.

Author: Fred Lambert

Source: Electrek