![]()

Scientists from the University of Irvine have developed a camera system that combines artificial intelligence (AI) with an infrared camera to capture full-color photos even in complete darkness.

Human vision perceives light on what is known as the “visible spectrum,” wavelengths of light between about 300 and 700 nanometers. Infrared light exists beyond 700 nanometers and is invisible to humans without the help of special technology, and many night vision systems can detect infrared light and transpose it into a digital display that provides humans with a monochromatic view.

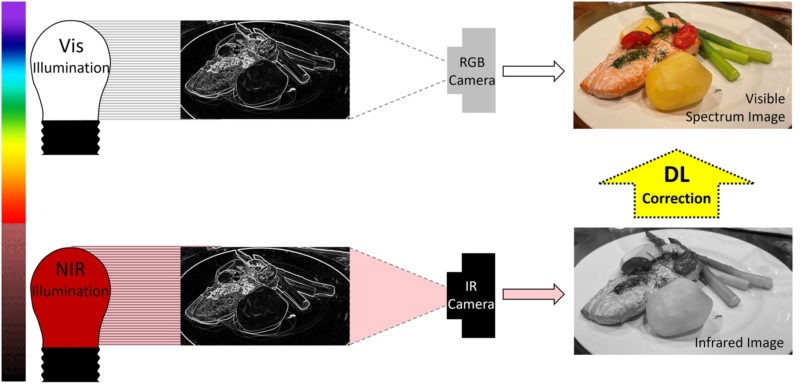

Scientists endeavored to take that process one step further and combined that infrared data with an AI algorithm that predicts color to render images in the same way they would appear if the light existed in the visible spectrum.

Typical night vision systems render scenes as a monochromatic green display, and newer night vision systems use ultrasensitive cameras to detect and amplify visible light. The scientists say that computer vision tasks with low illuminance imaging have employed image enhancement and deep learning to aid in object detection and characterization from the infrared spectrum, but not with accurate interpretation of the same scene in the visible spectrum. They want to change that.

“We sought to develop an imaging algorithm powered by optimized deep learning architectures whereby infrared spectral illumination of a scene could be used to predict a visible spectrum rendering of the scene as if it were perceived by a human with visible spectrum light,” the scientists explain in a research paper published to Plos One.

“This would make it possible to digitally render a visible spectrum scene to humans when they are otherwise in complete ‘darkness’ and only illuminated with infrared light.”

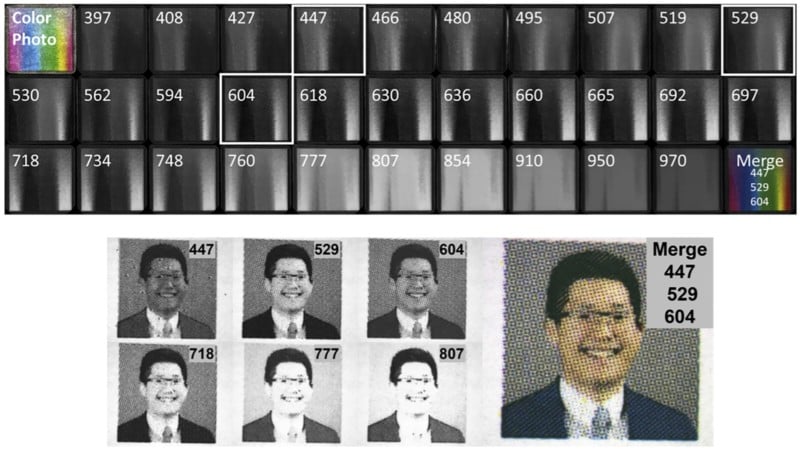

To achieve their goal, the scientists used a monochromatic camera sensitive to visible and near-infrared light to acquire an image dataset of printed images of faces under multispectral illumination that spanned standard visible red (604 nm), green (529 nm), and blue (447 nm) as well as infrared wavelengths (718, 777, and 807 nm).

“Conventional cameras acquire blue (B), green (G), or red (R) pixels of data to produce a color image perceptible to the human eye. We investigated if a combination of infrared illuminants in the red and near-infrared (NIR) spectrum could be processed using deep learning to recompose an image with the same appearance as if it were visualized with visible spectrum light. We established a controlled visual context with limited pigments to test our hypothesis that DL can render human-visible scenes using NIR illumination that is, otherwise, invisible to the human eye.”

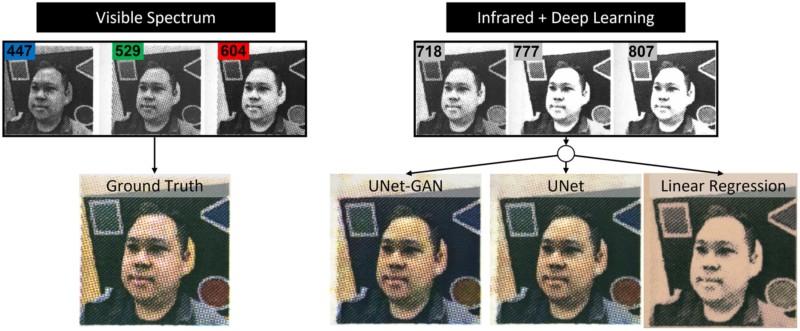

The team was able to optimize a convolutional neural network to predict visible spectrum images from only near-infrared information. The study is what they describe as the first step toward predicting human vision from near-infrared illumination.

“To predict RGB color images from individual or combinations of wavelength illuminations, we evaluated the performance of the following architectures: a baseline linear regression, a U-Net inspired CNN (UNet), and a U-Net augmented with adversarial loss (UNet-GAN),” they explain.

“Further work can profoundly contribute to a variety of applications including night vision and studies of biological samples sensitive to visible light,” the scientists say.

Even as impressive as the initial results are, the AI is incomplete. Right now, the system can only work successfully on human faces.

“Human faces are, of course, a very constrained group of objects, if you like. It doesn’t immediately translate to colouring a general scene,” Professor Adrian Hilton, Director of the Centre for Vision, Speech and Signal Processing (CVSSP) at the University of Surrey, tells New Scientist.

“As it stands at the moment, if you apply the method trained on faces to another scene, it probably wouldn’t work, it probably wouldn’t do anything sensible.”

Still, with more input data and further training, there is no reason to believe that the system could not become even more accurate and reliable.

The research paper titled “Deep learning to enable color vision in the dark” can be read on Plos One.

Image credits: Header photo licensed via Depositphotos.

Author: Jaron Schneider

Source: Petapixel