![]()

Researchers have developed a novel way to give any modern digital camera the ability to calculate depth with a low-power and compact optical device.

With its ability to gauge depth by sending out pulses of light and measuring its return time, LiDAR – which stands for “light detection and ranging” – has been a game-changer for everything from car manufacturers that deploy them to help driver-assist features become more powerful and accurate, to drones giving them the ability to avoid obstacles in their flight path. Even smartphones can use the sensor data provided by LiDAR to determine an object’s position in 3D space and create an artificial depth of field.

LiDAR alone isn’t used to take pictures, however. For that, CMOS sensors are typically employed. CMOS sensors are great for recording visual information at high resolution but don’t have the ability to see beyond two dimensions.

“The problem is CMOS image sensors don’t know if something is one meter away or 20 meters away. The only way to understand that is by indirect cues, like shadows, figuring out the size of the object,” Amin Arbabian, associate professor of electrical engineering at Stanford, tells Popular Science.

Arabian says CMOS sensors see everything in 3D space as being one-dimensional. But with some of the features of LIDAR, they could learn to see depth. To that end, Arabian and his team developed a hybrid sensor that takes the best features of both a CMOS image sensor and a LIDAR sensor to amplify the amplitude, frequency, and intensity of light coming into the camera and analyze them for not only color, brightness, and white balance, but also range data.

In the full research paper published in Nature, the team explains that it placed an acoustic modulator between the lens and the camera. This transparent crystal is made out of lithium niobate and is charged by two transparent electrodes. Working in concert with the image sensor, the system can modulate the light data coming through the lens and determine where an object is.

The light coming from the image is sent through the modulator. Using two piezoelectric electrodes, the modulator sends current through the crystal, turning the light on and off millions of times per second. In doing so, and seeing how fast the light returns, the modulator is able to ascertain the distance the light has traveled, and as such, the range to the target.

Paired with a digital camera, the modulator is able to create its own image data pack that tells the camera the distance of everything in the image and, therefore, it can compute depth.

Not only do the researchers think this has large ramifications for the possible benefits cameras can provide, but they also think they can make it smaller and cheaper than conventional LIDAR sensors.

Applications for a low-cost version of the modulator could range from the ability to improve smartphone cameras and allow them to create more realistic background defocus, to giving a camera the ability to recreate what it sees virtually for use in augmented reality (AR) and virtual reality (VR) applications.

The modulator would also make it possible to create megapixel-resolution LiDAR, for extremely sharp and accurate data. This higher resolution LiDAR would be able to identify targets at a greater range, and whether they are moving towards or from detection — a great benefit to autonomous driving systems to safely operate without human supervision.

As self-driving cars become more of a possibility, the need for ultra-smart and reliable image sensors that can perceive depth while a car is moving towards it becomes a critical component in safe, autonomous travel.

Currently, the two systems work in concert, but the Stanford team hopes to develop a single system that can replace the aging CMOS design and make cameras and other systems more powerful and flexible.

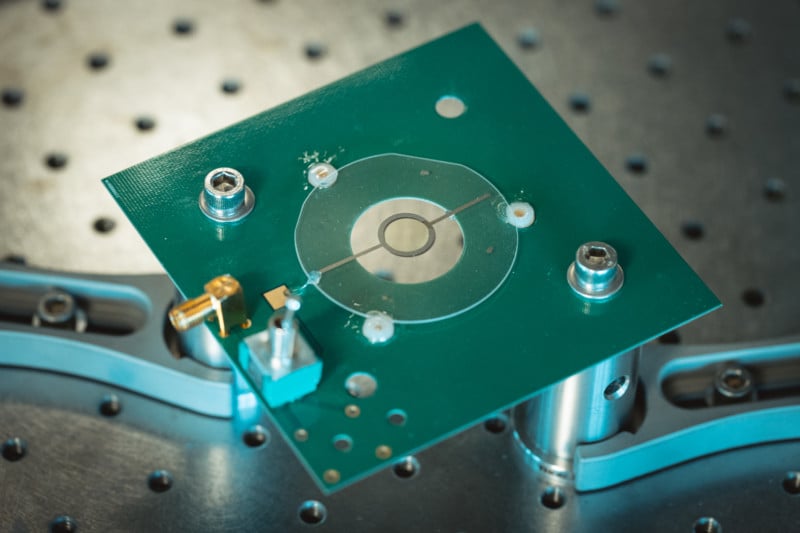

Image credits: Header photo depicts the lab-based prototype LiDAR system that the research team built, which successfully captured megapixel-resolution depth maps using a commercially available digital camera. | by Andrew Brodhead/Stanford University

Author: James DeRuvo

Source: Petapixel