Nvidia has completed its acquisition of Run:ai, a software company that makes it easier for customers to orchestrate GPU clouds for AI, and said that it would open-source the software.

The purchase price wasn’t disclosed, but was pegged by reports at $700 million when Nvidia first reported its intent to close the deal in April. Run:ai posted the deal news on its website today and also said that Nvidia plans to open-source the software. Run:ai’s software remotely schedules Nvidia GPU resources for AI in the cloud.

Neither company explained why Run:ai will open-source its platform, but it’s probably not hard to figure out. Since Nvidia has grown to be the number one maker of AI chips, its stock price has soared to $3.56 trillion, making it the most valuable company in the world. That’s great for Nvidia, but it makes it hard for it to acquire companies because of antitrust oversight.

A spokesperson for Nvidia said in a statement only that “We’re delighted to welcome the Run:ai team to Nvidia.” When Microsoft acquired Activision Blizzard for $68.7 billion, it appeased antitrust regulators by licensing Activision’s Call of Duty game to other platforms for a decade to address worries that the company would become too powerful in gaming. The same might be happening here.

Run:ai founders Omri Geller and Ronen Dar said in a press release that open-sourcing its software will help the community build better AI, faster.

“While Run:ai currently supports only Nvidia GPUs, open-sourcing the software will enable it to extend its availability to the entire AI ecosystem,” Geller and Dar said.

They said they will continue to help their customers to get the most out of their AI Infrastructure and offer the ecosystem maximum flexibility, efficiency and utilization for GPU systems, wherever they are: on-prem, in the cloud through native solutions, or on Nvidia DGX Cloud, co-engineered with leading CSPs.

The founders also said, “True to our open-platform philosophy, as part of Nvidia, we will keep empowering AI teams with the freedom to choose the tools, platforms, and frameworks that best suit their needs. We will continue to strengthen our partnerships and work alongside the ecosystem to

deliver a wide variety of AI solutions and platform choices.”

The Israel-based company said its goal when it was founded in 2018 was to be a driving force in the AI revolution and empower organizations to unlock the full potential of their AI infrastructures.

“Over the years, our world-class team has achieved milestones that we could only dream of back then. Together, we’ve built innovative technology, an amazing product, and an incredible go-to-market engine,” the founders said.

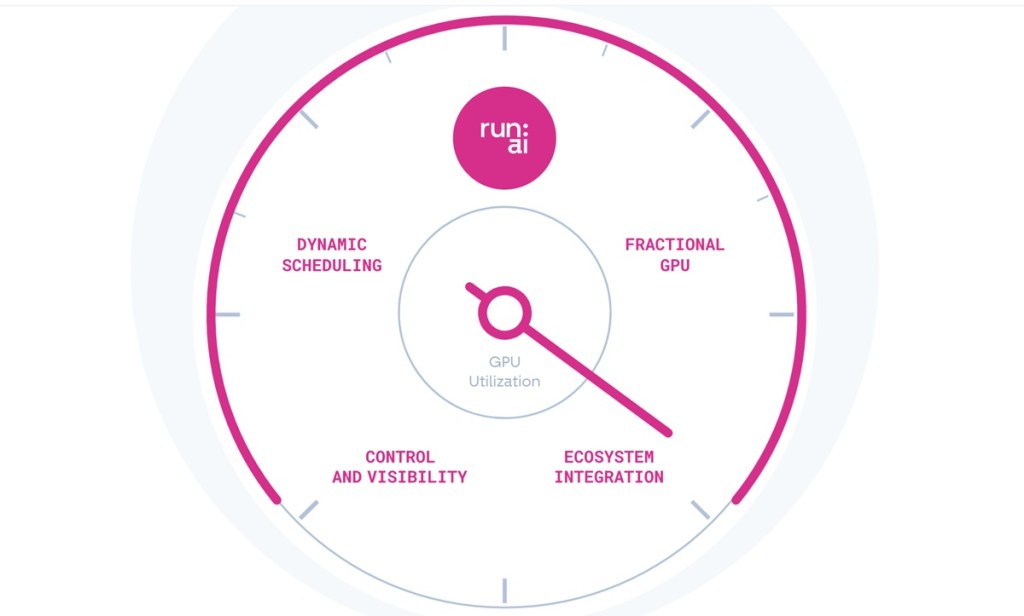

Run:ai helps customers to orchestrate their AI Infrastructure, increase efficiency and utilization, and boost the productivity of their AI teams.

“We are thrilled to build on this momentum, now as part of Nvidia. AI and accelerated computing are transforming the world at an unprecedented pace, and we believe this is just the beginning,” the Run:ai founders said. “GPUs and AI infrastructure will remain at the forefront of driving these transformative innovations and joining Nvidia provides us an extraordinary opportunity to carry forward a joint mission of helping humanity solve the world’s greatest challenges.”

Nvidia has been a longtime maker of graphics chips, and those chips have become a lot more useful in recent years in running AI software. Now the company is also emphasizing software, and this acquisition is aimed at giving customers maximum choice, efficiency and flexibility for GPU orchestration software. Nvidia and Run:ai have been working together since 2020 and they have joint customers.

TLV Partners led the seed round for Run:ai in 2018. Rona Segev, managing director of TLV, said in a statement, “The AI market in early 2018 seemed like a different world. OpenAI was still a research company and Nvidia’s market cap was ‘only’ around $100 billion. We met Omri and Ronen who painted a picture for us of what the future of AI would look like. In their vision of the future, AI was ubiquitous.”

Segev added, “Everyone on the planet would be interacting with AI daily, and it would be obvious that every company would be leveraging AI in one way or another. The only thing preventing that vision from becoming a reality, according to them, was the lack of efficiency and [the] costs associated with training AI models and running them in production on multiple GPU clusters. To solve this problem, Omri and Ronen pitched an idea of creating an orchestration layer between AI models and GPUs that would enable a much more efficient use of the underlying compute resources leading to faster training times and significantly reduced costs.”

And Segev said, “Of course, this was all theoretical at the time as they hadn’t yet incorporated a company, let alone a product. We didn’t know much about the industry at the time. But there was something special about Omri and Ronen. They had a unique combination of intellect, charm, craziness and humility that created the perfect recipe for the type of founders we’re looking to back.”

Author: Dean Takahashi

Source: Venturebeat

Reviewed By: Editorial Team