NeuralCam is an iPhone app that aims to bring to the iPhone the kind of ultra-low-light capabilities we’ve seen in some recent Android smartphones.

Modern iPhones have gotten better and better at handling low-light photos. I put that to the test soon after I got my iPhone X, and wrote then about how impressed I was when testing it on some after-sunset shots…

Normally, low light means noise — visible grain in the photos — while shooting through glass degrades the quality, especially through double glazing like this. I did switch off most lighting to minimize reflections, and held the phone flat to the glass, though you do see a little reflection from the kitchen lighting in the background in the secondary glazing.

All I’ve done in terms of editing is a crop and a bit of saturation boost [and the result, below] is truly stunning quality. There’s no visible grain at all. It’s well exposed (I didn’t adjust this at all). It’s sharp. The colors look great.

But once you get to near total darkness, then the iPhone really struggles. So NeuralCam borrows a technique used by professional photographers for decades. You take a series of identical shots, and then “stack” them — pulling out all the available light from each, until you end up with a decently lit shot.

However, doing this manually is fiddly. You need your camera on a tripod to eliminate camera movement. You need a scene that has no movement in it (otherwise people and vehicles, for example, will be in a different place in each shot). And you need editing software to stack the frames.

What NeuralCam aims to do is completely automate this process in-app on the iPhone.

The basic technique is the same. The app takes multiple shots, and then stacks them. But because it’s likely to be used handheld, it has to automatically align the different shots to eliminate camera movement — and it has to do the best it can, too, to compensate for any movement in the frame, such as people (or cats!) moving.

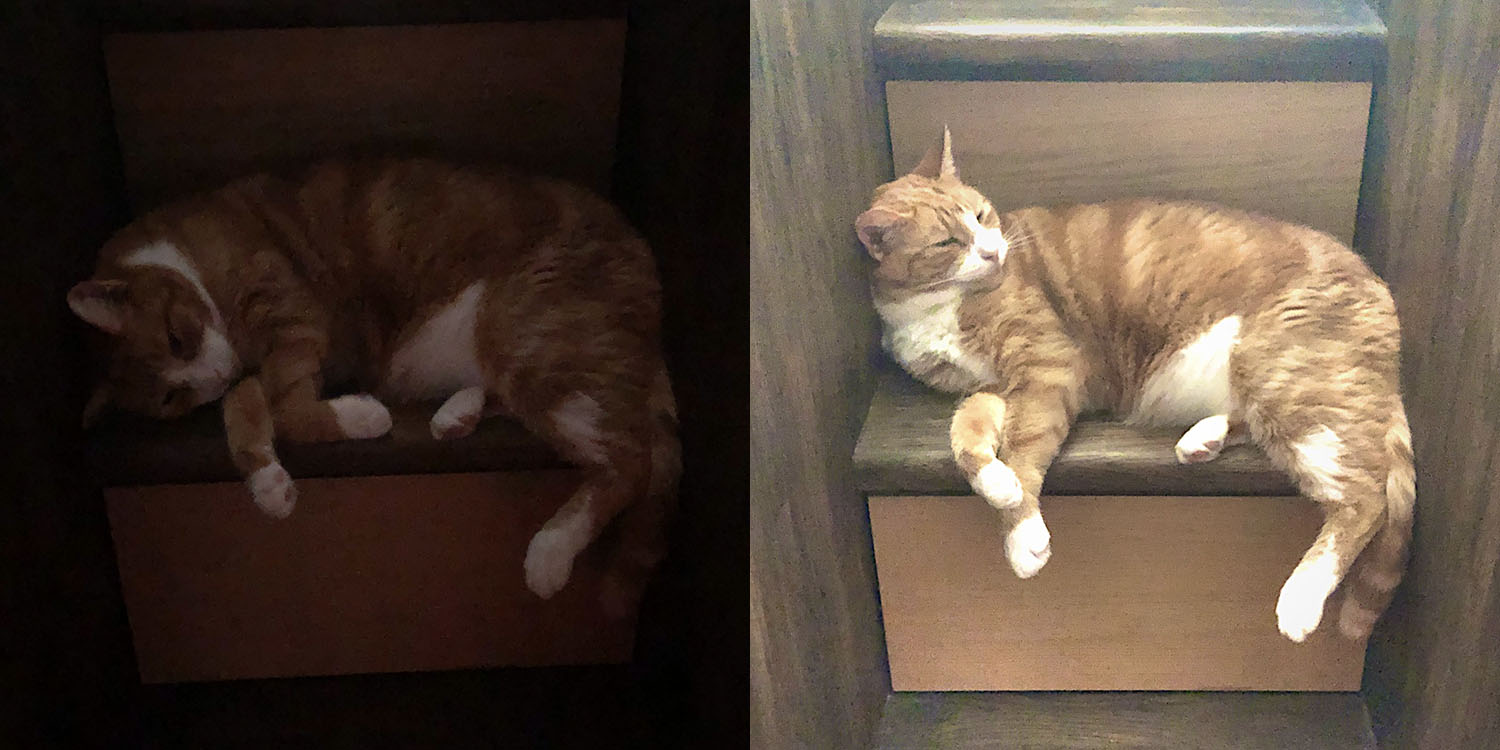

I’ll be testing the app properly — and in particular comparing it with Spectre — but here’s a quick test in an unlit bedroom, with the stock Camera app left and NeuralCam right.

It can’t perform miracles; it’s not a shot. It’s very low in contrast, and there’s a fuzziness to it where the app has compensated for movement, giving it a somewhat painting-like look. But it’s certainly very much better than the result from the stock app — and if it’s a choice between a painterly capture of a memory and nothing usable at all, the former could be worth a lot.

NeuralCam also appears to have its own software-focusing method. It doesn’t share any details, but autofocus in very low light is tricky.

iPhones up to the 5S autofocus using a technique known as contrast detection. Imagine you’re taking a photo of a black line on a white wall. When the photo is in focus, there will be a very sharp contrast between the black and the white. If the photo is out of focus, the contrast will be less clear-cut. So the camera just adjusts the focus to maximize contrast between light and dark.

However, low-light scenes have very little contrast to start with, making contrast-detection autofocus unreliable. The iPhone 6 onwards use a more sophisticated technique called phase detection. This relies on using a prism to split the light so that the same part of the image is sent to two different focus sensors. When the image is in focus, the split images will align. That’s better at handling low light, but still not perfect.

There’s no way to know what software tricks NeuralCam is employing, but as you watch it gaining focus, it definitely appears to be using some kind of app-specific method.

NeuralCam is a $2.99 download from the App Store. We’re told this is an introductory offer, but there’s no word on how long for and how much it will be afterwards.

Check out 9to5Mac on YouTube for more Apple news:

Author:

Source: 9TO5Mac

Tags: