![]()

NASA’s Mars Ingenuity drone survived a close call that could have ended in disaster. During its sixth flight, a glitch in its camera image delivery pipeline caused the drone’s onboard navigation system to malfunction.

In order to explain what happened, it’s crucial to first understand how the Ingenuity drone estimates its flight path and motion. While in the air, the drone keeps track of its motion by using an onboard inertial measurement unit (IMU) that measures Ingenuity’s accelerations and rotational rates. Extrapolated, it’s able to use this information to estimate where it is, how fast it is moving, and how it is oriented in space. NASA says that the onboard control system reacts to the estimated motions by adjusting control inputs at a rate of 500 times per second.

NASA also says that if the Ingenuity relied entirely on this system though, it would not be very accurate.

“Errors would quickly accumulate, and the helicopter would eventually lose its way,” Håvard Grip, Ingenuity’s Chief Pilot at NASA’s Jet Propulsion Laboratory, explains. “To maintain better accuracy over time, the IMU-based estimates are nominally corrected on a regular basis, and this is where Ingenuity’s navigation camera comes in.”

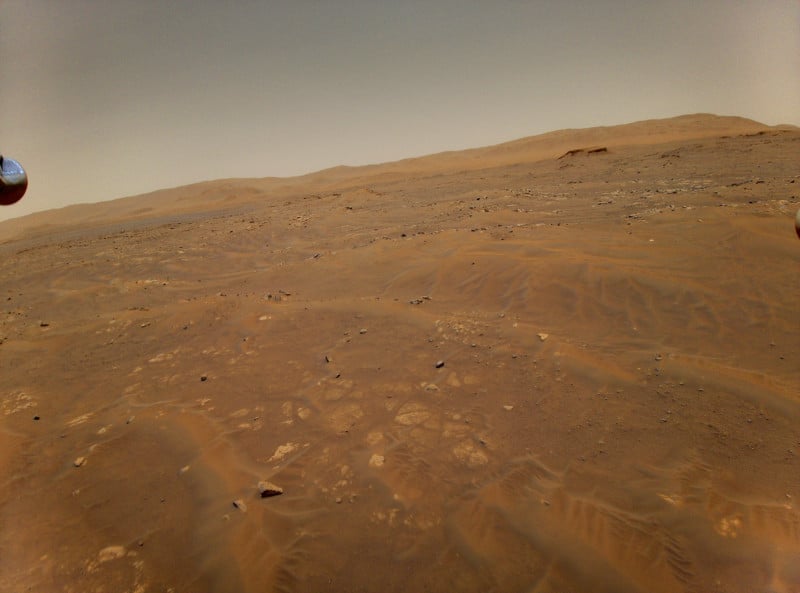

During a majority of the time it is in the air, Ingenuity’s downward-facing navigation cameras take 30 pictures a second of the surface and feeds that stream into the navigation computer.

“Each time an image arrives, the navigation system’s algorithm performs a series of actions,” Grip details. “First, it examines the timestamp that it receives together with the image in order to determine when the image was taken. Then, the algorithm makes a prediction about what the camera should have been seeing at that particular point in time, in terms of surface features that it can recognize from previous images taken moments before (typically due to color variations and protuberances like rocks and sand ripples). Finally, the algorithm looks at where those features actually appear in the image. The navigation algorithm uses the difference between the predicted and actual locations of these features to correct its estimates of position, velocity, and attitude.”

It’s this pipeline of images that suffered a glitch that put the entire system in jeopardy. About 54 seconds into the flight, that image pipeline suffered an error that caused it to drop a single photo from that 30 photos per second pipeline. While it lost that one photo, more importantly, that loss caused the ensuing photos to come in with an improper timestamp.

“From this point on, each time the navigation algorithm performed a correction based on a navigation image, it was operating on the basis of incorrect information about when the image was taken. The resulting inconsistencies significantly degraded the information used to fly the helicopter, leading to estimates being constantly “corrected” to account for phantom errors,” Grip says. “Large oscillations ensued.”

You can watch the last 29 seconds of the Ingenuity’s flight in the clip below:

Luckily, despite this error, Ingenuity was able to safely touch down on the surface within 16 feet of its intended landing location. Grip says that one reason it was able to do so was because of the efforts the engineers of the drone’s flight control team put into the programming. Ingenuity has ample “stability margin” that was designed to allow it to tolerate significant errors without crashing, which luckily included errors that would come as the result of poor timing.

Additionally, Grip says that the decision to stop using the navigation camera images as part of the algorithm during the final phase of descent paid off. The team chose this design decision because they believed it would ensure a smooth and continuous set of estimates of the helicopter’s motion during the landing phase. Because of this, the errors that were coming from the camera no longer affected the drone once it started its landing procedure.

“Ingenuity ignored the camera images in the final moments of flight, stopped oscillating, leveled its attitude, and touched down at the speed as designed,” Grip says.

“Looking at the bigger picture, Flight Six ended with Ingenuity safely on the ground because a number of subsystems – the rotor system, the actuators, and the power system – responded to increased demands to keep the helicopter flying. In a very real sense, Ingenuity muscled through the situation, and while the flight uncovered a timing vulnerability that will now have to be addressed, it also confirmed the robustness of the system in multiple ways,” he concludes.

“While we did not intentionally plan such a stressful flight, NASA now has flight data probing the outer reaches of the helicopter’s performance envelope. That data will be carefully analyzed in the time ahead, expanding our reservoir of knowledge about flying helicopters on Mars.”

Image credits: Header image by NASA/JPL-Caltech/ASU/MSSS

Author: Jaron Schneider

Source: Petapixel