Let’s talk pixels. Specifically, iPhone 14 pixels. More specifically, iPhone 14 Pro pixels. Because while the headline news is that the latest Pro models offer a 48MP sensor instead of a 12MP one, that’s not actually the most important improvement Apple has made to this year’s camera.

Indeed, of the four biggest changes this year, the 48MP sensor is to me the least important. But bear with me here, as there’s a lot we need to unpack before I can explain why I think the 48MP sensor is far less important than:

- The sensor size

- Pixel binning

- The Photonic Engine

One 48MP sensor, two 12MP ones

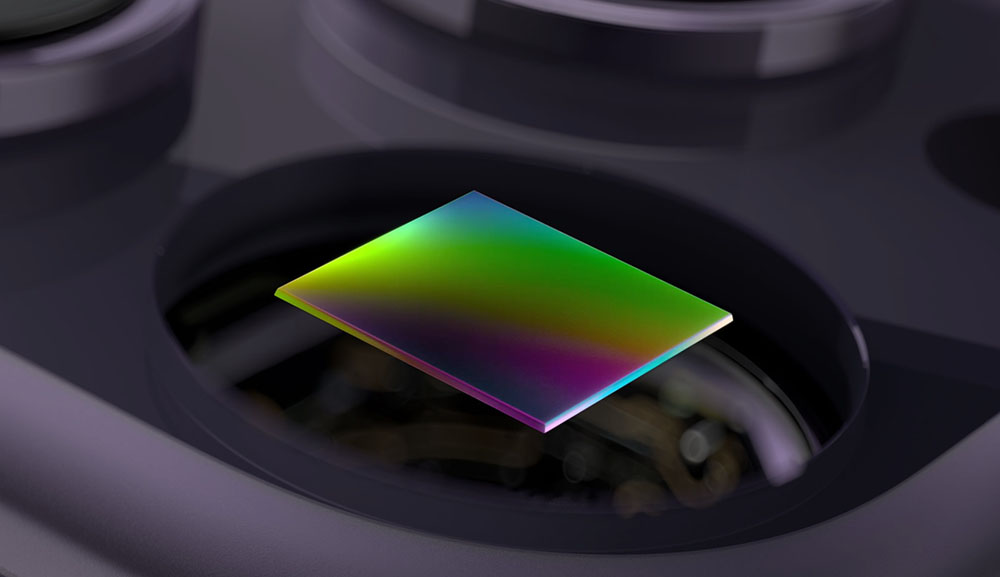

Colloquially, we talk about the iPhone camera in the singular, and then reference three different lenses: main, wide, and telephoto. We do that because it’s familiar – that’s how DLSRs and mirrorless cameras work, one sensor, multiple (interchangeable) lenses – and because that’s the illusion Apple creates in the camera app, for simplicity.

The reality is, of course, different. The iPhone actually has three camera modules. Each camera module is separate, and each has its own sensor. When you tap on, say, the 3x button, you are not just selecting the telephoto lens, you are switching to a different sensor. When you slide-zoom, the camera app automatically and invisibly selects the appropriate camera module, and then does any necessary cropping.

Only the main camera module has a 48MP sensor; the other two modules still have 12MP ones.

Apple was completely up-front about this when introducing the new models, but it’s an important detail some may have missed (our emphasis):

For the first time ever, the Pro lineup features a new 48MP Main camera with a quad-pixel sensor that adapts to the photo being captured, and features second-generation sensor-shift optical image stabilization.

The 48MP sensor works part-time

Even when you are using the main camera, with its 48MP sensor, you are still only shooting 12MP photos by default. Again, Apple:

For most photos, the quad-pixel sensor combines every four pixels into one large quad pixel.

The only time you shoot in 48 megapixels is when:

- You are using the main camera (not telephoto or wide-angle)

- You are shooting in ProRAW (which is off by default)

- You are shooting in decent light

If you do want to do this, here’s how. But mostly, you won’t …

Apple’s approach makes sense

You might ask, why give us a 48MP sensor and then mostly not use it?

Apple’s approach makes sense, because, in truth, there are very few occasions when shooting in 48MP is better than shooting in 12MP. And since doing so creates much larger files, eating up your storage with a ravenous appetite, it doesn’t make any sense to have this be the default.

I can think of only two scenarios where shooting a 48MP image is a useful thing to do:

- You intend to print the photo, in a large size

- You need to crop the image very heavily

That second reason is also a bit questionable, because if you need to crop that heavily, you might be better off using the 3x camera.

Now let’s talk sensor size

When comparing any smartphone camera to a DSLR or high-quality mirrorless camera, there are two big differences.

One of those is the quality of the lenses. Standalone cameras can have much better lenses, both because of physical size, and because of cost. It’s not unusual for a pro or keen amateur photographer to spend a four-figure sum on a single lens. Smartphone cameras of course can’t compete with that.

The second is sensor size. All other things being equal, the larger the sensor, the better the image quality. Smartphones, by the very nature of their size, and all the other tech they need to fit in, have much smaller sensors that standalone cameras. (They also have limited depth, which imposes another substantial limitation on sensor size, but we needn’t get into that.)

A smartphone-sized sensor limits the image quality and also makes it harder to achieve shallow depth of field – which is why the iPhone does this artificially, with Portrait mode and Cinematic video.

Apple’s big sensor + limited megapixel approach

While there are obvious and less obvious limits to the sensor size you can use in a smartphone, Apple has historically used larger sensors than other smartphone brands – which is part of the reason the iPhone was long seen as the go-to phone for camera quality. (Samsung later switched to doing this, too.)

But there’s a second reason. If you want the best quality images possible from a smartphone, you also want the pixels to be as big as possible.

This is why Apple has stuck religiously to 12MP, while brands like Samsung have crammed as many as 108MP into the same size sensor. Squeezing lots of pixels into a tiny sensor substantially increases noise, which is particularly noticeable in low-light photos.

Ok, I took a while to get there, but I can now, finally, say why I think the larger sensor, pixel-binning, and the Photonic Engine are a far bigger deal than the 48MP sensor …

#1: iPhone 14 Pro/Max sensor is 65% larger

This year, the main camera sensor in the iPhone 14 Pro/Max is 65% larger than the one in last year’s model. Obviously that’s still nothing compared to a standalone camera, but for a smartphone camera, that’s (pun intended) huge!

But, as we mentioned above, if Apple squeezed four times as many pixels into a sensor only 65% larger, that would actually give worse quality! Which is exactly why you’ll mostly still be shooting 12MP images. And that’s thanks to …

#2: Pixel-binning

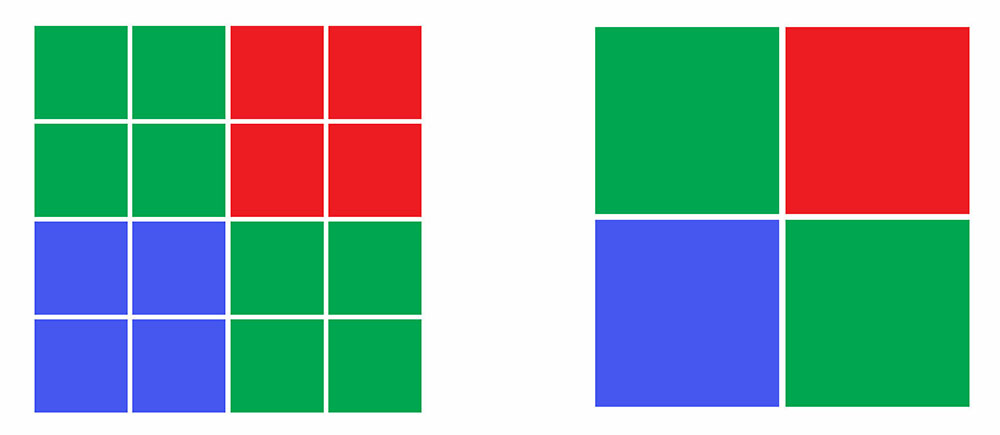

To shoot 12MP images on the main camera, Apple uses a pixel-binning technique. This means that the data from four pixels is converted into one virtual pixel (averaging the values), so the 48MP sensor is mostly being used as a larger 12MP one.

This illustration is simplified, but it gives the basic idea:

What does this mean? Pixel size is measured in microns (one millionth of a meter). Most premium Android smartphones have pixels measuring somewhere in the 1.1 to 1.8 micron range. The iPhone 14 Pro/Max, when using the sensor in 12MP mode, effectively has pixels measuring 2.44 microns. That’s a really significant improvement.

Without pixel-binning, the 48MP sensor would – most of the time – be a downgrade.

#3: Photonic Engine

We know that smartphone cameras of course can’t compete with standalone cameras in terms of optics and physics, but where they can compete is in computational photography.

Computational photography has been used in SLRs for literally decades. When you switch metering modes, for example, that is instructing the computer inside your DLR to interpret the raw data from the sensor in a different way. Similarly in consumer DSLRs and all mirrorless cameras, you can select from a variety of photo modes, which again tells the microprocessor how to adjust the data from the sensor to achieve the desired result.

So computational photography already plays a much bigger role in standalone cameras than many realize. And Apple is very, very good at computational photography. (Ok, it’s not yet good at Cinematic video, but give it a few years …)

The Photonic Engine is the dedicated chip which powers Apple’s Deep Fusion approach to computational photography, and I’m already seeing a huge difference in the dynamic range in photos. (Examples to follow in an iPhone 14 Diary piece next week.) Not just the range itself, but in the intelligent decisions being made about which shadow to bring out, and which highlight to tame.

The result is significantly better photos, which have as much to do with the software as the hardware.

Wrap-up

A dramatically bigger sensor (in smartphone terms) is a really big deal when it comes to image quality.

Pixel-binning means that Apple has effectively created a much larger 12MP sensor for most photos, allowing the benefits of the larger sensor to be realized.

The Photonic Engine means a dedicated chip for image-processing. I’m already seeing the real-life benefits of this.

More to follow in an iPhone 14 Diary piece, when I put the camera to a more extensive test over the next few days.

Author: Ben Lovejoy

Source: 9TO5Google