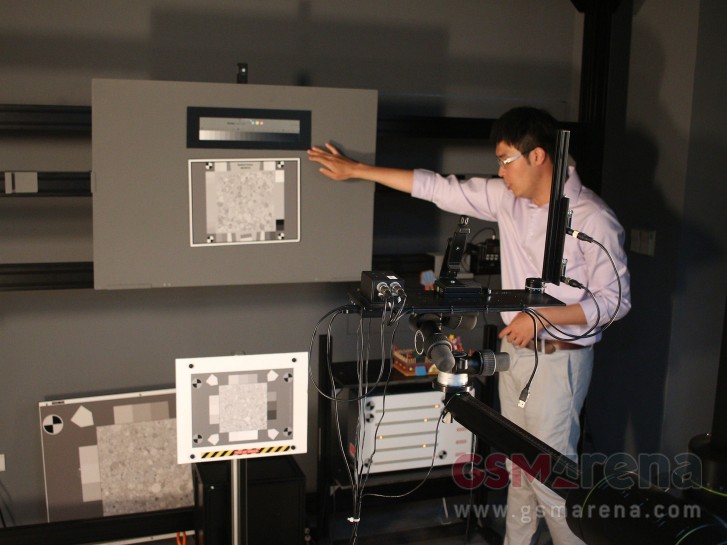

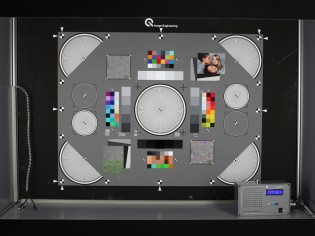

Last week, we had the opportunity to visit the OnePlus camera lab based in Taipei, Taiwan. There we got to see how the test engineers go through different tests to see if the camera is working to their standards and also the equipment they use for it.

We also had a chance to speak to Zake Zhang, Image Product Manager at OnePlus. The following is from a one on one interaction that we had, where we talked about how the camera on their latest OnePlus 7 Pro came about, how some of its tech works, what some of the difficulties they faced and what they plan to do going forward. There are also some images from the hardware testing division at the OnePlus camera lab.

Going from the OnePlus 6T to the 7 Pro, what were the key areas of improvement that the team focused on?

With the OnePlus 6T, we did not put a lot of emphasis on the camera. The OnePlus 7 Pro was the first device where we decided we should go all in and compete with the real flagships on camera. Pete (Lau) set up a goal for the team to create the best camera on any smartphone. Now that’s the long term target for us and the OnePlus 7 Pro is the first device where we started using the best hardware on the market and then maximize its capability with the software while improving it with future updates.

Will future devices have similar focus on the camera?

Yes. All of us here including Pete agree that camera is a huge part of a smartphone and in our future devices the camera will definitely be one of the most if not the most important aspect.

How big is the current camera team at OnePlus?

We currently have about 80 people in the camera team.

Are all of them based here in Taiwan?

No, we have people in multiple locations including Taipei, Shenzhen, Beijing, Nanjing and Hyderabad.

Automotive Objective Test: Does automated objective image quality tests. Can generate about 18,000 test results per day. Helps guarantee stability of image quality under multiple scenarios.

What are the main steps involved in the camera testing process and how does OnePlus arrive upon the final look of the images?

First the camera tuning team needs to bridge the gap between the camera hardware and the Qualcomm platform to make sure the camera is working. Once the camera is running we need figure out things like how to get the stabilization working, how to get multi frame images and merge them together or how to do the denoise process. This goes on for a while and takes about half a year.

There are multiple rounds of tunings. Once one round is finished, the test engineer will get involved and take the phone along with other flagship devices to take pictures in over 1000 scenes in different locations, including Taiwan, China and the new test location we are building in Germany right now. They take the photos, compare and make the decision on what needs to be improved under each condition. Then they tune again and the process repeats itself until the product launch.

Before the launch we do a test within the team where everybody gets the latest update and can try the newest software. This way everyone from the team, including Pete himself, can get involved in the process of giving feedback and making final changes before releasing to the public.

As for the look of the image, we definitely want to have our own style, which is a natural look but with emotions. It is our main focus and something that’s part of our inner white paper called Philosophy of Image, which Pete and everybody else in the team has agreed upon. We focus on this philosophy and use it to maximize the hardware that we have at our disposal.

What aspect of the camera development process is the most challenging?

I think the most challenging part for us is how to balance the resources with a small team and make decisions on the most important things that need to be focused on. We are not like some of the big companies who may have over 800 people in their camera team. We only have 80 so we need to focus on things that are the top priority.

Which particular area of the camera performance do you think you still need to improve on?

We have always been improving our software with updates. What we are focusing on right now is how we can maximize the multi-frame with the HDR and scene detection and also how we can increase the numbers that make the phone look good on paper while also making it more visually appealing to people. We will also be focusing on our scene detection, which is the basis for a lot of our algorithms.

What was the reason behind the launch device not having the DxOMark firmware?

The software that we had on Day 1 was the same as the one used by DxOMark so everyone got the same software that DxOMark gave the rating for. But even though DxOMark gave us a good score, people weren’t happy with the camera. So based on people’s feedback we had to change different parameters like contrast and colors to make it more visually appealing for our customers.

Do you think the DxOMark score still stands after all the changes?

Yes.

What was the cause of delaying video capture on the ultra wide and telephoto lenses on the 7 Pro?

It’s due to the limited resources we have.

(It has been confirmed that this feature is coming in a later update.)

What’s the technology behind Nightscape?

There are two main modes for Nightscape that the phone switches between internally. One is a handheld mode that has a shorter exposure of 2-3 seconds. The other is a tripod mode, which the phone switches to if it detects that it is stabilized. This mode can take photos with up to 40 seconds long exposure.

The handheld mode takes multiple images with short exposure times and merges them together. The tripod mode takes three different types of photos, including multiple images with short exposure, then multiple images with medium exposure, and then multiple images with long exposure. Then all the images are merged, which is a lot of data, to create a photo that has the best contrast, shadows and highlight details.

Currently, Nightscape only works with the main 48MP camera. Can we expect Nightscape to also work on the other cameras on the OnePlus 7 Pro?

We are working on bringing the Nightscape feature to the other cameras in a future update.

Camera 3A development lab: Tests the three As, auto exposure, auto white balance and autofocus

Can we expect higher than 1080p recording on the front camera?

Selfie camera isn’t something we heavily focused on in the past but have we decided to work on and improve in the future products where you will see better hardware and software.

Why are RAW images limited to 12MP resolution?

With the Pro mode we want the best quality images and we noticed with this sensor 48MP did not yield the best results, especially in low light. So we decided to stick to 12MP and use the pixel binning technology to get the best out of the sensor.

Can we expect wide color capture of photos and videos and/or HDR video capture in the future on the 7 Pro?

We have been discussing the DCI-P3 color space internally for a while now. The reason we didn’t implement it is because while it is easy for someone like Apple to implement it as theirs is a closed environment and they can have wide color support on all their devices, for us it’s difficult as a wide color photo shot on our phone may not look good when viewed on another device that does not support that color space.

As for HDR, the current HDR video implementation on other smartphones is not ideal, especially when you send the HDR video to another device where it can cause an issue if the other device does not support HDR. So we are focusing on improving the video quality but also maintaining compatibility. We will consider it when we think it’s the right time to introduce this to the audience. But right now we are definitely working on something else that can improve the video quality.

What is the reason behind the string of camera related updates weeks after the launch of the device? Could these not be included in the initial release with the launch of the device?

The main reason for this is resources; we currently have very limited resources. We try to ensure on the launch day to include our best software and bring that to the users. The majority of the features we have at launch work and work pretty well but there are some features that aren’t working quite well and because our test engineers are very strict, they do not allow us to ship those features at launch, which is why you see some features arriving in a later update.

Would you say the issues with the camera are largely related to the size of the team? Would you have a better product at your hands if you had a larger team at your disposal?

If we had more people on board we would have more resources to put into different projects and also different aspects of the development process.

But it’s not necessarily related to just the size of the team. It’s only if we hire the right people to our team would we be able to solve our problem. It’s not that we need to just keep hiring more people. Right now we have our own direction and we need people who are aligned with our vision to join us and that’s our goal for the long run.

Are you planning on expanding your camera team?

Yes, we are expanding our team. We are currently hiring more people, both in Taipei and Shenzhen.

Author: Prasad

Source: GSMArena

OnePlus Android Interview