Google, Apple, Meta, and various other companies believe that AR smart glasses will be the next major form factor after the smartphone. Humane, a secretive startup founded by ex-Apple employees, looks to have a different approach that involves what is essentially an Android-powered wearable camera that uses lasers to project a screen on any surface, including the palm of your hand.

Humane was founded by Apple software director Bethany Bongiorno and design lead Imran Chaudhri. The latter worked at Apple for over two decades (1995-2016) and was on the Human Interface Team, while the former was in charge of iOS and macOS software engineering from 2008 until she similarly left in 2016.

The startup has been very secretive about its work, but has made various bold pronouncements about “building innovative technology that feels familiar, natural, and human” and its “mission to reimagine computing.” A key part of that is making “Products that put us back in touch with ourselves, each other, and the world around us.”

According to a patent (“Wearable multimedia device and cloud computing platform with laser projection system”) published in 2020, all that marketing copy might translate into a body-worn device that — most importantly — “does not include a display, allowing the user to continue interacting with friends, family and co workers without being immersed in a display.” In fact, “minimal user interaction” is the goal.

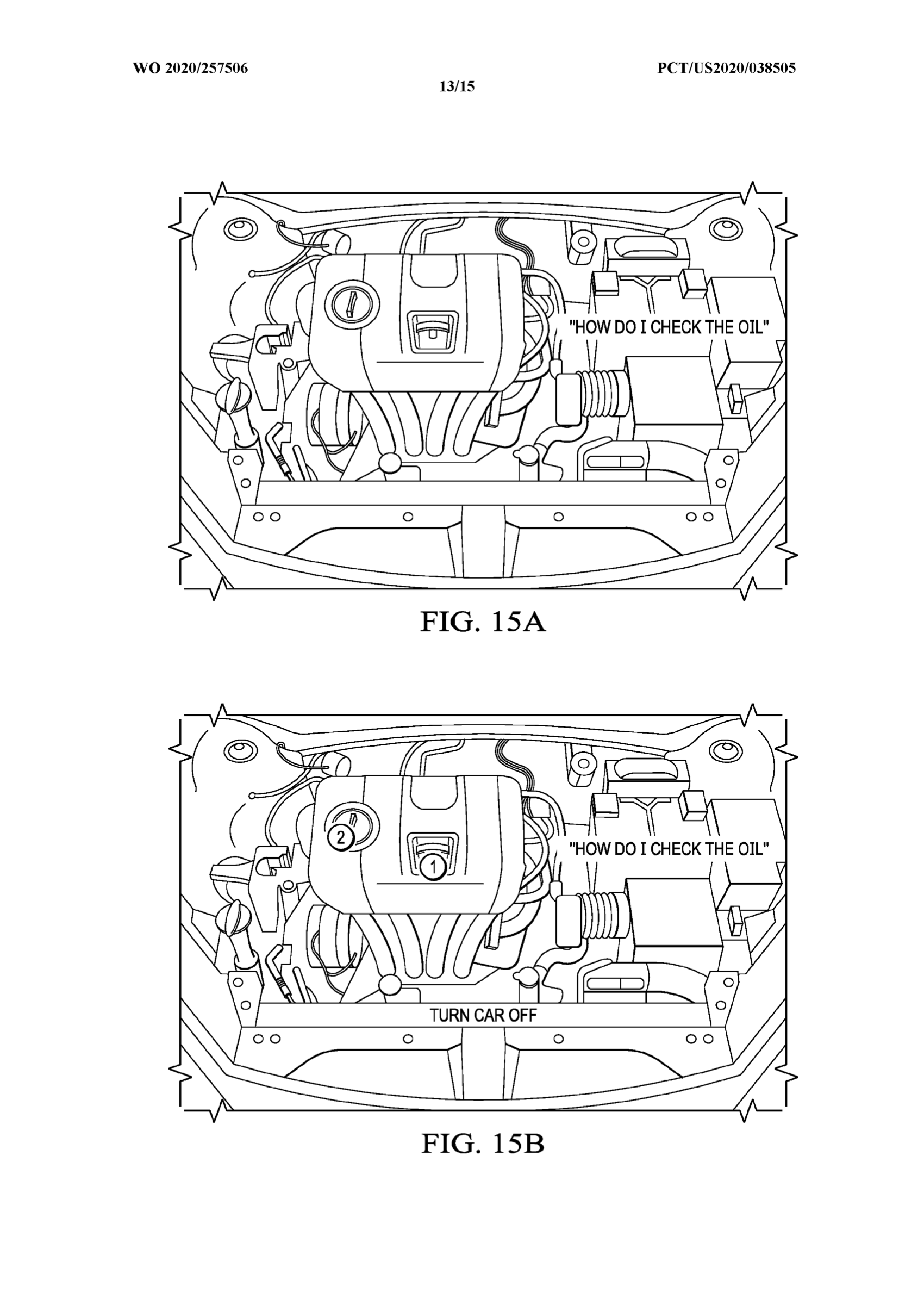

Rather, the “laser projection system” makes any surface into a display that disappears when it is no longer needed. This, according to patent drawings, includes having the time/date, numpad, turn-by-turn directions, and temperature/thermostat controls projected onto the palm of your hand. Other examples show information overlaid – in true augmented reality fashion – as you’re cooking or working on your car engine.

The laser projection can label objects, provide text or instructions related to the objects and provide an ephemeral user interface (e.g., a keyboard, numeric key pad, device controller) that allows the user to compose messages, control other devices, or simply share and discuss content with others.

Besides laser projection, this “body-worn apparatus” features a camera (“180° FOV with OIS” is mentioned as an example specification), 3D camera, and depth sensor (LiDAR or Time of Flight), which are used to recognize “air gestures” made with your hand and identify objects in the real world, like Google Lens.

In an embodiment, the cloud computing platform can be driven by context-based gestures (e.g., air gesture) in combination with speech queries, such as the user pointing to an object in their environment and saying: “What is that building?” The cloud computing platform uses the air gesture to narrow the scope of the viewport of the camera and isolate the building.

The camera can also act like a normal recording device with a tap on the “wearable multimedia device.”

In terms of specs, Humane’s “wearable multimedia device” will run on Android as evidenced by open job positions (1, 2, 3), including System Software Engineer, Android Framework:

What You Might Do

- Customize and extend AOSP for a new product category

Essential Qualifications

- 2+ years experience developing Java, JNI, and C++ code for Android devices

- Familiarity with the Android Framework and HALs

- Experience developing in Android RIL/Telephony

Preferred Skills

- Experience customizing AOSP for new product categories

- Experience with Android Telephony, Wireless, or Multimedia frameworks

- Experience with embedded Linux/Android board bringup

As such, Humane doesn’t have to create a whole new operating system, which Meta just halted work on in favor of continuing to use Android. Meanwhile, Google has just spun up a team to create an “Augmented Reality OS.”

Humane intends to build out a third-party app ecosystem:

Some example applications can include, but are not limited to: personal live broadcasting (e.g., Instagram™ Life, Snapchat™), senior monitoring (e.g., to ensure that a loved one has taken their medicine), memory recall (e.g., showing a child’s soccer game from last week) and personal guide (e.g., AI enabled personal guide that knows the location of the user and guides the user to perform an action).

Lastly, of all the open hardware positions, Humane is not hiring any display engineers that you’d expect for traditional AR smart glasses.

In terms of power, the cameras, depth sensor, and projection system have to obviously face the world, but there will be an external battery that will be hidden underneath your clothes, e.g. jacket. That pack and the front portion connects via magnets – which is what holds the device to your clothing – and this external pack wirelessly charges the internal battery. This design has also been patented.

A user magnetically fastens the wearable multimedia device to their clothing by placing the portable battery pack underneath their clothing and placing the wearable multimedia device on top of portable battery pack outside their clothing, such that permanent magnets attract each other through the clothing

Meanwhile, the patent filing features a diagram that suggests the Humane device will be powered by a “Qualcomm Snapdragon 8xx” chip. To caveat, this application is now several years old, but using a flagship smartphone chip rather than the more dedicated XR1/XR2 chip for AR/VR is interesting. This could just be the development platform, while Qualcomm Ventures LLC last year did participate in Humane’s $100 million Series B round.

Meanwhile, the “X55 (4/5G modem)” means the device will be truly independent and not require a smartphone. There’s also a microphone for voice commands (with a digital assistant possible), while GPS and inertial sensors (like accelerometers or gyroscopes) helps determine the “location and orientation of the user wearing the device when the context data was captured.”

The patent broadly mentions possibly having a heart rate sensor or fingerprint monitor, while the device would be able to connect to headphones.

That September 2021 funding was meant to “enable Humane to scale its operations.” Not having a persistent display is certainly novel and clever in that AR screen technology is one of the biggest technical barriers (with power and heat constraints) to smart glasses coming to market.

However, Humane is relying on an equally unproven interaction method that people will be widely unfamiliar with – assuming the projection technology is clear and works perfectly. A body-worn device might also be a barrier to adoption if the utility it provides is not greater than the social stigma of having a camera constantly pointed at the world.

It’s unclear when Humane plans to announce definitive details, which might have changed from the above filing. Patents are always broad and not indicative of the final product, but the marketing and hiring pieces are starting to line up. In a statement, a company spokesperson had the following to say:

“We have many ideas and we will always protect our intellectual property. Some of those ideas may become products, others may not. Expect to see more patent filings from us in the future.”

That said, the lack of a permanent display seems to be a cornerstone of what Humane has been advertising in creating technology that is less distracting, and that’s unlikely to change.

Author: Abner Li

Source: 9TO5Google