In teasing the Pixel 6 at the start of August, Google very much framed the preview around its first custom-built System on a Chip (SoC). At the Pixel Fall Event today, Google fully detailed Tensor and called it the “biggest mobile hardware innovation in the history of the company.”

Why Tensor

Google’s stated goal for building Tensor is to push what’s possible on smartphones. The company wants to bring “AI breakthroughs directly to Pixel” and drive its vision of always-available technology, i.e. Ambient Computing

The former is born out of Google’s hardware division believing that AI-backed smart features are how it can differentiate Pixel against competitors, while Google considers phones the “central control device of an ambient system.” The Pixel Launch Event notably saw Google talk about Ambient Computing again. The last time that occurred in a significant manner was the 2019 Pixel 4 launch.

In an interview with The Verge, Rick Osterloh said that work started in 2017 after coming to the realization that Google couldn’t take a piecemeal approach — like building a single co-processor, e.g. Pixel Visual/Neural Core — to boost AI models. Rather, an entire chip that’s optimized for desired tasks is needed.

The Tensor chip is specifically designed to offer Google’s latest advances in AI directly on a mobile device. This is an area where we’ve been held back for years, but now, we’re able to open a new chapter in AI-driven smartphone innovation.

The Tensor CPU + GPU

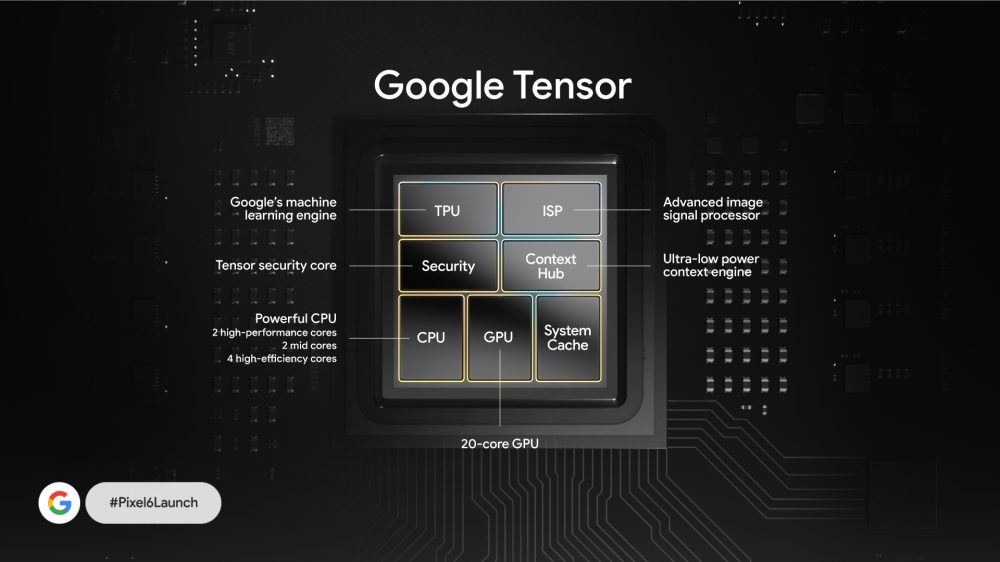

At the Pixel Launch Event, Google went into Tensor and explicitly touted the inclusion of two high-performance ARM Cortex-X1 cores at 2.8 GHz. They are joined by two “mid” 2.25 GHz A76 CPU cores, with Ars Technica’s Google Silicon interview pointing out how they are based on a 5nm process rather than the 7nm original found in flagship phone chips last year. Four high-efficiency/small A55 cores round out the CPU.

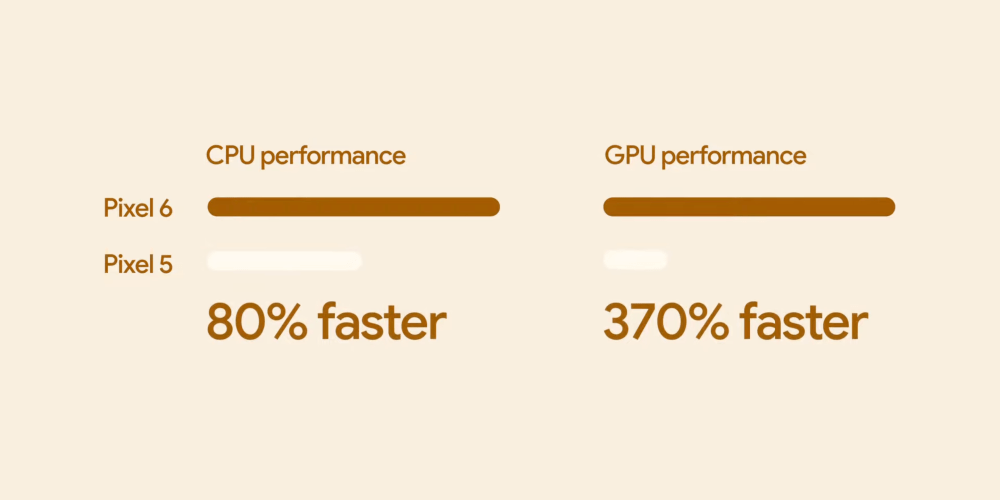

The dual-X1 approach allows Google to throw more power at workloads that are of medium intensity. In a normal CPU, the mid cores would handle such tasks, like Google Lens visual analysis, but be “maxed out.” Google says using two X1 cores in that scenario would be more efficient, and that’s what Tensor is optimized for. In real terms, it’s 80% faster than the Pixel 5’s Snapdragon 765G.

“You might use the two X1s dialed down in frequency so they’re ultra-efficient, but they’re still at a workload that’s pretty heavy. A workload that you normally would have done with dual A76s, maxed out, is now barely tapping the gas with dual X1s.”

Phil Carmack, VP and GM of Google Silicon

There’s also a 20-core GPU that Google says “delivers a premium gaming experience for the most popular Android games.” It is 370% faster than the Pixel 5, which uses the Adreno 620 GPU.

Tensor security ft. Titan M2

Meanwhile, the Tensor security core is a CPU-based subsystem that’s isolated from the application processor, and dedicated to running sensitive tasks and controls. It works with the dedicated Titan M2 security chip, which is not part of Tensor but Google touts as being resilient to advanced attacks like electromagnetic analysis, voltage glitching, and laser fault injection.

The original Titan M chip works, in conjunction with software, to stop your phone from being rolled back to an older version of Android that might have security vulnerabilities. It also prevents bootloader unlocking and verifies your lockscreen passcode.

TPU, ISP, and Context Hub

There’s also of course the “Tensor Processing Unit.” This ML engine is said to be “custom-made by Google Research for Google Research,” and built with where “ML models are heading, not where they are today.”

The Image Signal Processor (ISP) features an accelerator that runs the HDRNet algorithm, a key reason the Pixel 6 and Pixel 6 Pro can do Live HDR+ Video at 4K 60FPS, more efficiently.

The Context Hub brings “machine learning to the ultra-low power domain.” It allows the always-on display (AOD), Now Playing, and other “ambient experiences” to “run all the time, without draining your battery.”

All together now, or heterogeneous computing

All these components together make up Tensor, with Google prioritizing “total performance and efficiency.” This specifically involves excelling at heterogeneous computing tasks that require various parts of the SoC to work together. For example, Lens makes use of the CPU, GPU, ISP, and TPU to run efficiently.

As software applications on mobile phones become more complex, they run on multiple parts of the chip. This is heterogeneous computing. To get good performance for these complex applications, we made system-level decisions for the SoC. We ensured different subsystems inside Tensor work really well together, rather than optimizing individual elements for peak speeds.

What Tensor can do

Besides Live HDR+, which makes colors more accurate and vivid, at 4K60, Tensor allows other computational photography and video features like Motion Mode in Google Camera. Action Pan blurs the background, while Long Exposure works on the subject (as seen below).

Meanwhile, face detection is more accurate on the Pixel 6 and works faster — due to the integrated subsystems, while consuming half the power compared to a Pixel 5.

Assistant on Tensor is using the “most advanced speech recognition model ever released by Google” at, again, half the power. The high-quality ASR (automatic speech recognition) model is used to transcribe voice commands, as well as in long-running applications like Recorder and Live Caption “without quickly draining the battery.”

Meanwhile, there’s Assistant voice typing for editing what you just transcribed in an entirely-hands free manner, and Live Translate, with the Pixel’s translation quality improving by 18%, “a level of improvement that typically takes multiple years of research:”

Google Tensor also enables Live Translate to work on media like videos using on-device speech and translation models. Compared to previous models on Pixel 4 phones, the new on-device neural machine translation (NMT) model uses less than half the power when running on Google Tensor.

Tensor’s future

Google is not giving Tensor a generation signifier at launch, but the company will presumably append a number on the next version. (For example, the Titan M is succeeded by the Titan M2.)

There’s no doubt that Google is making more chips for phones (and other form factors are rumored). SVP Rick Osterloh said as much at the event:

Tensor also gives us a hardware foundation that we’ll be building on for years to come, so you get the personal, helpful experiences you’d expect from a Google phone.

Author: Abner Li

Source: 9TO5Google