The world speaks thousands of languages — roughly 6,500, to be exact — and systems from the likes of Google, Facebook, Apple, and Amazon become better at recognizing them each day. The trouble is, not all of those languages have large corpora available, which can make training the data-hungry models underpinning those systems difficult.

That’s the reason Google researchers are exploring techniques that apply knowledge from data-rich languages to data-scarce languages. It’s borne fruit in the form of a multilingual speech parser that learns to transcribe multiple tongues, which was recently detailed in a preprint paper accepted at the Interspeech 2019 conference in Graz, Austria. The coauthors say that their single end-to-end model recognizes nine Indian languages (Hindi, Marathi, Urdu, Bengali, Tamil, Telugu, Kannada, Malayalam and Gujarat) highly accurately, while at the same time demonstrating a “dramatic” improvement in automatic speech recognition (ASR) quality.

“For this study, we focused on India, an inherently multilingual society where there are more than thirty languages with at least a million native speakers. Many of these languages overlap in acoustic and lexical content due to the geographic proximity of the native speakers and shared cultural history,” explained lead coauthors and Google Research software engineers Arindrima Datta and Anjuli Kannan in a blog post. “Additionally, many Indians are bilingual or trilingual, making the use of multiple languages within a conversation a common phenomenon, and a natural case for training a single multilingual model.”

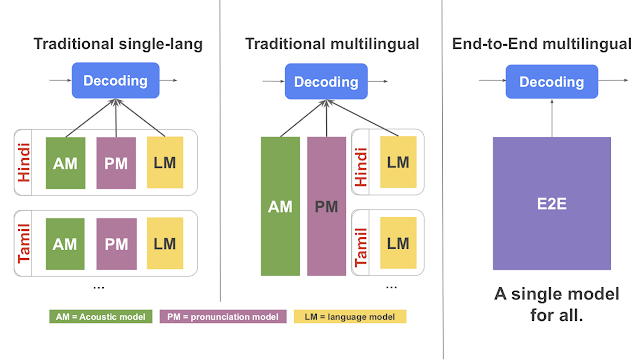

Above: A comparison of conventional ASR system architectures to that of Google’s end-to-end model.

Somewhat uniquely, the researchers’ system architecture combines acoustic, pronunciation, and language components into one. Prior multilingual ASR works accomplished this without addressing real-time speech recognition. By contrast, the model proposed by Datta, Kannan, and colleagues taps a recurrent neural network transducer adapted to output words in multiple languages one character at a time.

In order to mitigate bias arising from small data sets of transcribed languages, the researchers modified the system architecture to include an extra language identifier input, or an external signal derived from the language locale of the training data. (One example: the language preference set in a smartphone.) Combined with the audio input, it enabled the model to disambiguate a given language and learn separate features for separate languages as needed.

The team further augmented the model by allocating additional parameters per language in the form of residual adapter modules, which helped to fine-tune a global per-language model and improve overall performance. The end result is a multilingual system that outperforms all other single-language recognizers, and that simplifies training and serving while meeting the latency requirements for applications like Google Assistant.

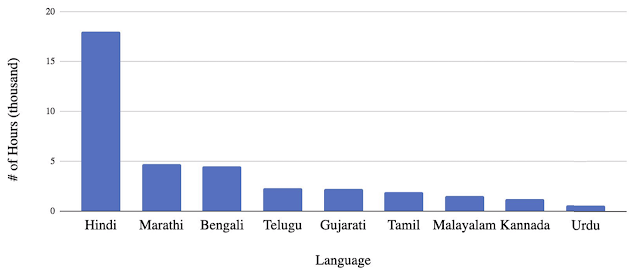

Above: Chart of training data for the nine languages Google’s AI model recognizes.

“Building on this result, we hope to continue our research on multilingual ASRs for other language groups, to better assist our growing body of diverse users,” the coauthors wrote. “Google’s mission is not just to organize the world’s information but to make it universally accessible, which means ensuring that our products work in as many of the world’s languages as possible.”

The system — or one like it — is likely to find its way into Google Assistant, which in February gained multilingual support for multiturn conversations in Korean, Hindi, Swedish, Norwegian, Danish, and Dutch. In related news, Google introduced Interpreter Mode for translations in dozens of languages and nine new AI-generated voices.

Author: Kyle Wiggers

Source: Venturebeat