Google has shown off an artificial intelligence system that can create images based on text input. The idea is that users can enter any descriptive text and the AI will turn that into an image. The company says the Imagen diffusion model, created by the Brain Team at Google Research, offers “an unprecedented degree of photorealism and a deep level of language understanding.”

This isn’t the first time we’ve seen AI models like this. OpenAI’s DALL-E (and its successor) generated headlines as well as images because of how adeptly it can turn text into visuals. Google’s version, however, tries to create more realistic images.

To assess Imagen against other text-to-image models (including DALL-E 2, VQ-GAN+CLIP and Latent Diffusion Models), the researchers created a benchmark called DrawBench. That’s a list of 200 text prompts that were entered into each model. Human raters were asked to assess each image. They “prefer Imagen over other models in side-by-side comparisons, both in terms of sample quality and image-text alignment,” Google said.

It’s worth noting that the examples shown on the Imagen website are curated. As such, these may be the best of the best images that the model created. They may not accurately reflect most of the visuals that it generated.

Like DALL-E, Imagen is not available to the public. Google doesn’t think it’s suitable as yet for use by the general population for a number of reasons. For one thing, text-to-image models are typically trained on large datasets that are scraped from the web and are not curated, which introduces a number of problems.

“While this approach has enabled rapid algorithmic advances in recent years, datasets of this nature often reflect social stereotypes, oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups,” the researchers wrote. “While a subset of our training data was filtered to removed noise and undesirable content, such as pornographic imagery and toxic language, we also utilized LAION-400M dataset, which is known to contain a wide range of inappropriate content including pornographic imagery, racist slurs and harmful social stereotypes.”

As a result, they said, Imagen has inherited the “social biases and limitations of large language models” and may depict “harmful stereotypes and representation.” The team said preliminary findings indicated that the AI encodes social biases, including a tendency to create images of people with lighter skin tones and to place them into certain stereotypical gender roles. Additionally, the researchers note that there is the potential for misuse if Imagen were made available to the public as is.

The team may eventually allow the public to enter text into a version of the model to generate their own images, however. “In future work we will explore a framework for responsible externalization that balances the value of external auditing with the risks of unrestricted open-access,” the researchers wrote.

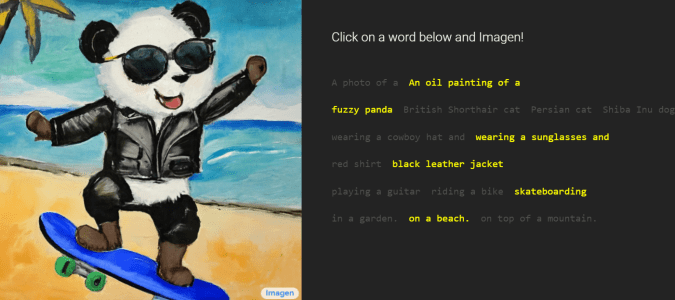

You can try Imagen on a limited basis, though. On its website, you can create a description using pre-selected phrases. Users can select whether the image should be a photo or an oil painting, the type of animal displayed, the clothing they wear, the action they’re undertaking and the setting. So if you’ve ever wanted to see an interpretation of an oil painting depicting a fuzzy panda wearing sunglasses and a black leather jacket while skateboarding on a beach, here’s your chance.

Author: K. Holt

Source: Engadget