![]()

Dear camera manufacturers, I am deeply annoyed that my phone often takes better pictures than my DSLR (or any mirrorless) straight out of the camera.

I am not a professional photographer, nor am I a vlogger. I am a professional, I am an engineer, I’m educated, I’m comfortable with technology, and I have budget enough to spend on enthusiast- to professional-level photography equipment.

There is no reason that my phone should be better than my camera (other than camera manufacturers being unwilling to invest in on-camera software). My DSLR camera, with the lenses I have, can get a lot more information in, including at a lot lower noise levels, than my phone. Therefore, pictures straight from my camera should be better than those straight from my phone — always!

Please don’t tell me I can do it in post-processing. I dislike post-processing; I don’t have the time. I want to take the picture in the moment and be done. As an exception, I’m willing to do post-processing, but it should truly be the exception.

At its simplest, that means I want photos (or time-lapse videos) I can share immediately, with a RAW (or RAW-like) backup file for those exceptional cases where I want to do additional editing (and even then ideally in-camera, to produce a new image that I can share immediately).

My favorite subjects are animals, birds, and landscapes (both static and timelapse).

Basic Features and Specs

Here are some basic features I want:

1. High ISO (at least 50K and ideally more 100K), because some animals are nocturnal and predators are most active in bad light.

2. IBIS (in-body image stabilization), because most of my animal and bird photos are freehand, and often in bad light.

3. Fast auto-focus.

4. Animal/bird eye auto-focus (thank you Canon R5 for showing what is possible).

5. Including low-light focusing ability.

6. Must allow cycling between subjects with the joystick, please.

7. Photo sharing via cellphone (but please better and faster than the current clunky camera-to-phone apps).

8. 14-bit RAW.

Other Features and Specs

Now, let’s talk about things that should have been obvious to include:

Intelligent Aperture Priority

When I’m shooting in aperture priority, then the camera should set the shutter speed so that the image is crisp, i.e. sacrifice ISO to achieve this. At its simplest the camera can take the current focal length of the lens into consideration — I’m looking at you Nikon. However, accelerometers are cheap, include them in-camera, and use this info when setting the shutter speed. Furthermore, in mirrorless systems with animal/bird eye AF, it is clear what the subject is, and its motion should also be taken into consideration.

While we are at it, when the camera knows what the subject is, the exposure set should also be set optimally for the subject.

File Optimization

The ability to set whether I want my photo files to be optimized for web-sharing, HDR TV, or printing. Of course, using the RAW files I want to be able to change the setting and create new photos on the fly.

GPS

There is no excuse to not include GPS — according to Alibaba you can buy a GPS chip for under $1, and a Sony CXD5603GF Ultra-Low-Power GPS Smart Module for $15.

No, getting the GPS coordinates from my phone via Bluetooth sucks. Don’t do it. It eats battery and is iffy. If you are concerned about power usage, see my battery grip suggestion at the end of the article.

Computational Photography

![]()

Finally, we get to the meat of the article: computational photography. As Apple, Google, and others have already shown, computational photography is where most of the innovation happens and what makes the photos my phone takes so good. I want to experience computational photography as part of my camera.

Arsenal is a great proof of concept, but I don’t want to connect 4 devices together (phone, Arsenal, camera, and tripod) to be able to take a picture. No, I want just one device — my camera. Because that way I can focus on taking the photo!

Entry-level examples of computational photography are (or at least the minimum I expect to see):

HDR

HDR, and I mean real HDR where you take multiple exposures. We all have different feelings about what makes an HDR shot good. The new Google Pixel camera app has a great solution to this with its dual exposure controls for HDR shots. My camera already has 2 dial wheels which can be used for this, all it needs is HDR on the mode dial (and of course computational photography algorithms to implement it).

It should be possible to create new images in-camera from the composite 42-bit RAW-like file (or whatever higher bit-resolution), by simply selecting new dial settings. Post-processing should also be capable of processing the RAW-like file.

For all static photos I have this expectation — I call it the Sith expectation: there is always 2, the RAW-like file and a resulting jpg. After taking the picture I want to be able to create new JPEGs from the RAW-like file by changing settings (similar to FujiFilm capabilities).

The new Google Pixel also creates a preview so that minimal re-adjusting of the dual exposure settings is required.

Of course, this would need to work handheld, and as with the phone, the camera should be able to use the accelerometer information to improve the details of the composite HDR shot. Similarly, for focus stacking, see below.

HDR would also include low light and astrophotography.

Focus Stacking

Focus stacking is commonly needed in landscape photography when shooting at high aperture with foreground elements. There is no reason this cannot be done in camera with multiple exposures (again creating a single RAW file). Where the back of the camera joystick could be used to select multiple focus points, vis a vis a default mode where the camera doesn’t simply optimize to have the whole picture in focus.

Due to the different controls used it is possible to combine focus stacking with HDR.

Timelapses

Timelapses: yeah, I said I don’t take videos, but time-lapses are different. Arsenal is a great example of what is possible, especially their day-night timelapses, but I want all of this in-camera (using 3 devices to complete 1 task just sucks). Here, please don’t fill my camera with 1000s of pics and a video, just give me the video (of course with the menu option for those people who really also, or just, want the 1000s of RAW files).

And to get a little bit fancier (since I’m going to have to wait):

Crowd/Object Removal

Remove people from places using multiple exposures. There are many architecture or landscape photos that can benefit from having no humans in them. This should be very doable using multiple exposures (assuming that the humans are in motion). This would require exposures that are set further apart (and in this case could need a tripod), i.e. unlike HDR and focus stacking where the exposures are taken in as quick as possible succession.

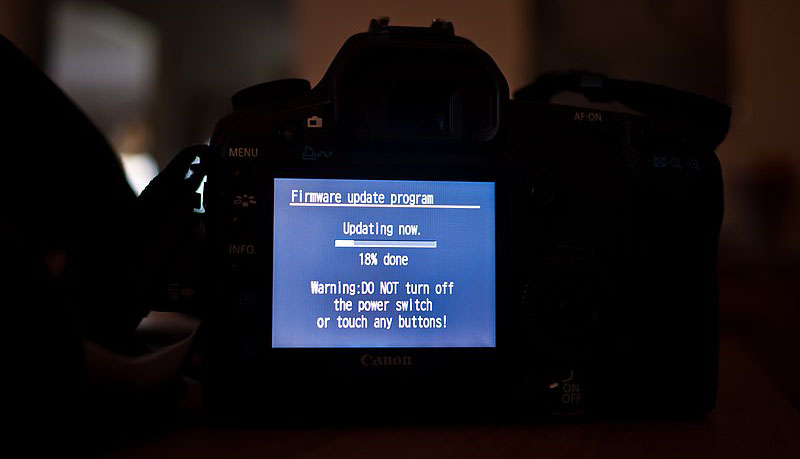

Continual Updates

I don’t want the computational photography capabilities of my camera to be frozen to when I bought it. No, I expect continual innovation, similar to my phone being updated regularly. Of course, the computational photography innovation cycle is a lot quicker than the camera hardware cycle. I.e., I don’t want to buy a new camera body every 3 years like I do cellphones, instead I expect my camera body to be good for 10 years.

My proposed solution to this is to extend the functionality of a “battery grip” so that it can be upgraded more regularly, e.g., every 3 years, for higher processing power and thus the ability to run newer algorithms. Yes, this requires adding a high-bandwidth connection between the camera body and battery grip. As Arsenal has demonstrated it possible for current cameras already to collaborate with an additional processing unit.

However, I would like this to be a single user experience, i.e. with such an improved battery grip, my camera should simply be capable of more using its existing mechanical controls.

This more regular battery grip upgrade cycle would also allow it to keep up with newer higher bandwidth ways to connect to my phone and make sharing photos quicker, easier, and hopefully less finicky.

Open Standards

This brings me to the last point — use open standards!

1. I don’t want to be forced to use your crappy app to connect my phone to my camera.

2. I still want to be able to work with my photo and RAW files 40 years from now.

3. Importantly, it allows innovation, e.g. if someone else has developed a better computational photography method than the ones you supply, then I would like to be able to load that on my camera without any fuss.

Conclusion

In closing, I’ve not asked for anything special here, there is no reason we could not have all of this today, with today’s software and hardware. Please, camera manufacturers: you can do a lot better with today’s technology, and with a little bit of care you can create products that create a platform for innovation.

The opinions expressed in this article are solely those of the author.

About the author: Dr. Jan-Jan van der Vyver started taking pictures in his early teens. He remembers developing his own black and white film. But, he loves digital photography, especially taking pictures of wild creatures.

Image credits: Header photos by Seo Jaehong and Fredrik Solli Wandem

Author: Dr. Jan-Jan van der Vyver

Source: Petapixel