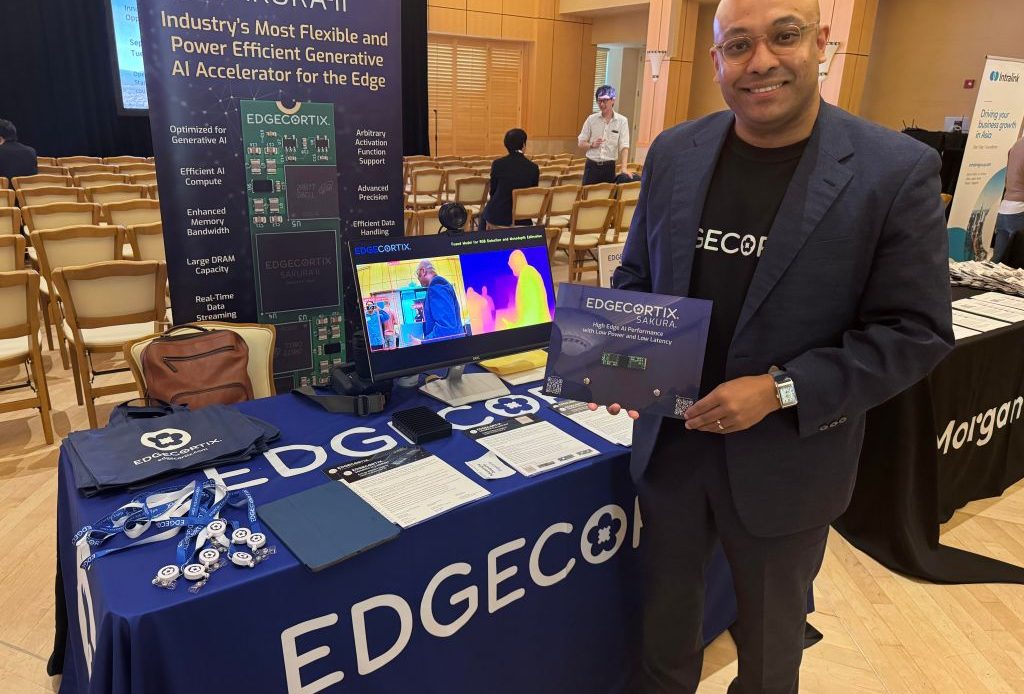

EdgeCortix is pioneering energy-efficient AI processors for the edge. It’s a mighty dream, obstructed only by this little company worth $2.76 trillion called Nvidia.But EdgeCortix has been pursuing its dream step by step, said Sakyasingha Dasgupta, CEO of the Japan-based company. A 25-year veteran of AI, Dasgupta founded the company in Japan in July 2019, and I met him at the recent Japan-U.S. Innovation Awards at Stanford University, where his company was one of those honored in the awards show.

Early on, EdgeCortix started work on an AI-specific processor architecture, working from the ground up, using a software approach. The goal was to deliver near cloud-level performance at the edge while drastically improving energy efficiency and processing speed.

EdgeCortix delivers a software-first approach with its patented “hardware and software co-exploration” system software platform that meets the rising demand of AI applications today, from vision to generative AI in a sustainable manner. By processing the data at the edge, EdgeCortix can go where the problem is, as 75% of the world’s data is being generated or is processed at the edge, said Dasgupta.

Lil Snack & GamesBeat

GamesBeat is excited to partner with Lil Snack to have customized games just for our audience! We know as gamers ourselves, this is an exciting way to engage through play with the GamesBeat content you have already come to love.

Origins

As a teenager, Dasgupta learned about tech by developing games. That got him into AI. Dasgupta spent nearly two decades in the AI industry, even before deep learning was popular. His work included research on the early neuromorphic systems.

“There were a lot of things that were happening in the AI in games, with classical AI, and then years later I found the interest turned toward neural networks. Only in the last three to four years has AI become ubiquitous, he said. And Dasgupta learned he had advantages in looking at the problem via software.

Today’s generative AI models have grown exponentially, requiring huge amounts of data and complicated hardware systems. The demand is growing so fast that it is creating an exponential curve of data, and yet traditional processors can’t keep up due to a stalling of Moore’s Law. So Dasgupta looked for ways to make computing more energy efficient so it can do better and keeping up with AI.

“When we started, we started talking about software first approach. And now we are seeing many people adopt that same language and I think the right direction,” he said. “While our hardware is extremely efficient, the key reason why we have been seeing tremendous adaptation across various customers from aerospace commercial sectors is because of the fact that the software makes it as easy.”

For a customer using a CPU or a GPU today, you can have a seamless transition to new hardware without having to tinker and change the software that they’re used to using,” he said.

Dasgupta believes there will even be servers at the edge of the network. Dasgupta believes that systems scaling is possible through both software and architectural innovations so that computers don’t become prohibitively expensive or even bigger energy hogs.

“We want to make it power efficient,” he said. “So you get more performance work done and the amount of wattage power that you consume should be much lower,” he said. “That’s really critically important when it comes to the edge.”

That means things on the edge of the network, not in the data center, like robotic systems, smart cities devices, manufacturing, and even satellite technology.

“Our core mission is to be able to bring near cloud-level performance to the edge,” he said. “That’s a tall order.”

Dasgupt foresaw that computing environments would be heterogeneous or made up of many different kinds of computer architectures like Arm, RISC-V and Nvidia GPUs.

Dasgupta has been on a long journey, and serendipity took him to Japan. While in Germany, Dasgupta met a woman from Japan. She wanted to move back there, and he went with her, and she is now his wife. He did research at the Riken Center for Computational Science, where he did research on supercomputers. He also did work at IBM Research in Tokyo and later Yorktown Heights.

At IBM, Dasgupta saw challenges on the of trying to solve bottleneck problems on both the software and hardware sides of the computing problem.

“That’s when I realized that you have to do both. You have to work on the compiler side. But at the same time, you have to create a AI domain specific hardware that was designed for that AI,” he said.

“It’s a very fragmented market and there are many processors working together, so you need a compiler that can manage heterogeneous workflow,” he said. “That’s what our software does. It’s the first industrial software that enables commercially available processes to work together.”

From MERA to Sakura-II

First, the company created compiler software to translate code from machine-focused assembly to a higher-level language. And it used that as the heart of its MERA Compiler and Software framework, as well as its novel Dynamic Neural Accelerator (DNA)—a runtime-reconfigurable processor architecture.

The software framework providesg a robust platform for deploying the latest neural network models in a framework agnostic manner. With MERA’s built-in heterogeneous support for leading processors, including AMD, Intel, Arm, and RISC-V, it is fast and easy to integrate the EdgeCortix AI platform into existing systems.

Renesas, the Japanese chip company, became one of the first early adopters of the software, which can power an entire generation of microcontrollers and processors in the market.

And the company makes partners out of its rivals. EdgeCortix licensed this software to other chip companies and was able to generate revenues early.

But it’s still moving up the food chain and continued with the mission to create more efficient neural processing. It became a fabless semiconductor company (where it designs its chips and has them manufactured by a third party) and it created Sakura, a chip solution. The 12-nanometer chip had 40 trillion operations on 10 watts of power. But with the new chip, the numbers can hit 60 trillion operations per second on eight watts of power.

Sakura-II AI Accelerator

And now the company has created the Sakura-II AI Accelerator, a coprocessor designed for applications requiring fast, real-time Batch=1 AI inferencing. Despite its small footprint, it delivers excellent performance with low power consumption, Dasgupta said. It can do 60 trillion operations per second while consuming eight watts of power.

The key to Sakura II’s efficiency is its low-latency DNA. This runtime-reconfigurable architecture enables flexible, highly parallelized processing, making it ideal for edge AI workloads.

Sakura-II is designed to handle the most challenging generative AI applications at the edge, enabling designers to create new content based on disparate inputs like images, text, and sounds. Supporting multi-billion parameter models like Llama 2, Stable Diffusion, DETR, and ViT within a typical power envelope of 8W, Sakura-II meets the requirements for a vast array of Edge Generative AI uses in Vision, Language, Audio, and many other applications.

Sakura-II modules and cards are architected to run the latest vision and generative AI models with market-leading energy efficiency and low latency. Whether customers want to integrate AI functionality into an existing system or are creating new designs from scratch, these modules and cards provide a platform for AI system development.

World Economic Forum recognition

In 2024, the World Economic Forum recognized EdgeCortix as a Technology Pioneer—one of the world’s 100 most promising start-ups driving technological innovation. The platform addresses critical challenges in edge AI across various domains, including defense, robotics, smart manufacturing, smart cities, and automotive sensing.

EdgeCortix collaborates with industry leaders to provide robust, high-performance AI-inference solutions. For instance, EdgeCortix teamed up with the RZ/V MPU series to enhance application software, including AI technology.

Dasgupta studied AI at public research institutes such as The Max Planck Society in Germany and Japan’s RIKEN research institute. He also worked on AI at Microsoft and IBM Research. It was this rich background that ultimately led him to establish EdgeCortix—a fabless semiconductor company headquartered in Tokyo—committed to optimizing AI processing at the edge while minimizing power consumption.

Such startups in Japan have been rare, and rarer still when the CEO is non-Japanese. Dasgupta said that in this way, he sees himself as a “unicorn,” meaning a rare kind of person, as opposed to a startup that is worth $1 billion. Of course, there’s no telling whether his firm could become that kind of unicorn as well.

Unlike most AI companies that prioritize silicon devices and adapt software afterward, Dasgupta championed a different approach. He recognized that many powerful AI solutions were ineffective in edge scenarios due to excessive power consumption. In July 2019, he founded EdgeCortix with a radical software-first mindset, aiming to deliver near-cloud-level performance at the edge while drastically reducing operating costs.

Under his leadership, EdgeCortix developed groundbreaking technologies, including the Mera Compiler and Software framework and the novel Dynamic Neural Accelerator (DNA)—a runtime-reconfigurable processor architecture. The company’s latest achievement is the Sakura-II AI Accelerator, and it exemplifies a commitment to efficiency, flexibility, and real-time processing of complex models, including Generative AI, in low-power environments.

EdgeCortix’s products aim to disrupt the rapidly growing edge AI hardware markets including defense, aerospace, smart cities, industry 4.0, autonomous vehicles and robotics.

The company has worked with Japanese government bodies, including the Ministry of Defense. It has put radiation-tested chips into aerospace applications.

“We can run even the recent large language models — imagine 10 billion parameters — but on eight watts of power consumption or 10 watts of power consumption. And that’s really a game changer because you can bring that to various industries,” Dasgupta said.

About 51% of customers are in Japan, 29% in the U.S., and the rest would be around EMEA, rest of India. The company has under 55 people, and it continues to grow. Revenue in 2023 grew fast and revenues are growing about two-fold in 2024.

“We’ve crossed that part where we have achieved the product market fit with the first-generation Sakura chips,” he said. “And now with the second generation is in complete mass production for generative AI market.”

The company will raise more capital and is courting investors for its next round. So far, the company has raised nearly $40 million in both equity and debt.

Author: Dean Takahashi

Source: Venturebeat

Reviewed By: Editorial Team