Google’s platform for mobile augmented reality is available on 1.2 billion Android devices today. At I/O 2022, ARCore is getting foundational improvements to make it work faster and more reliably on the Pixel 6 and in Google Maps Live View.

To improve immersion, Google has improved ARCore’s depth capabilities, which enables virtual objects to appear behind real ones (occlusion) and other more realistic placement. The current camera on a phone can create a depth map that’s about the size of a big room, or up to 8 meters (26 feet).

The average time to initial depth is reduced by around 15%. The average overall coverage of the depth map is now at 95% and is dramatically improved on surfaces with minimal texture, as you can see on the empty white wall and on the black TV. These are places that classical depth would struggle with due to a lack of visual features.

In fact, this camera approach delivers depth coverage that is “now comparable” to that of dedicated depth hardware. On Pixel phones, Google is further speeding up the depth and motion tracking process by using the second camera. This ARCore improvement is currently rolling out to the Pixel 4 and Pixel 6.

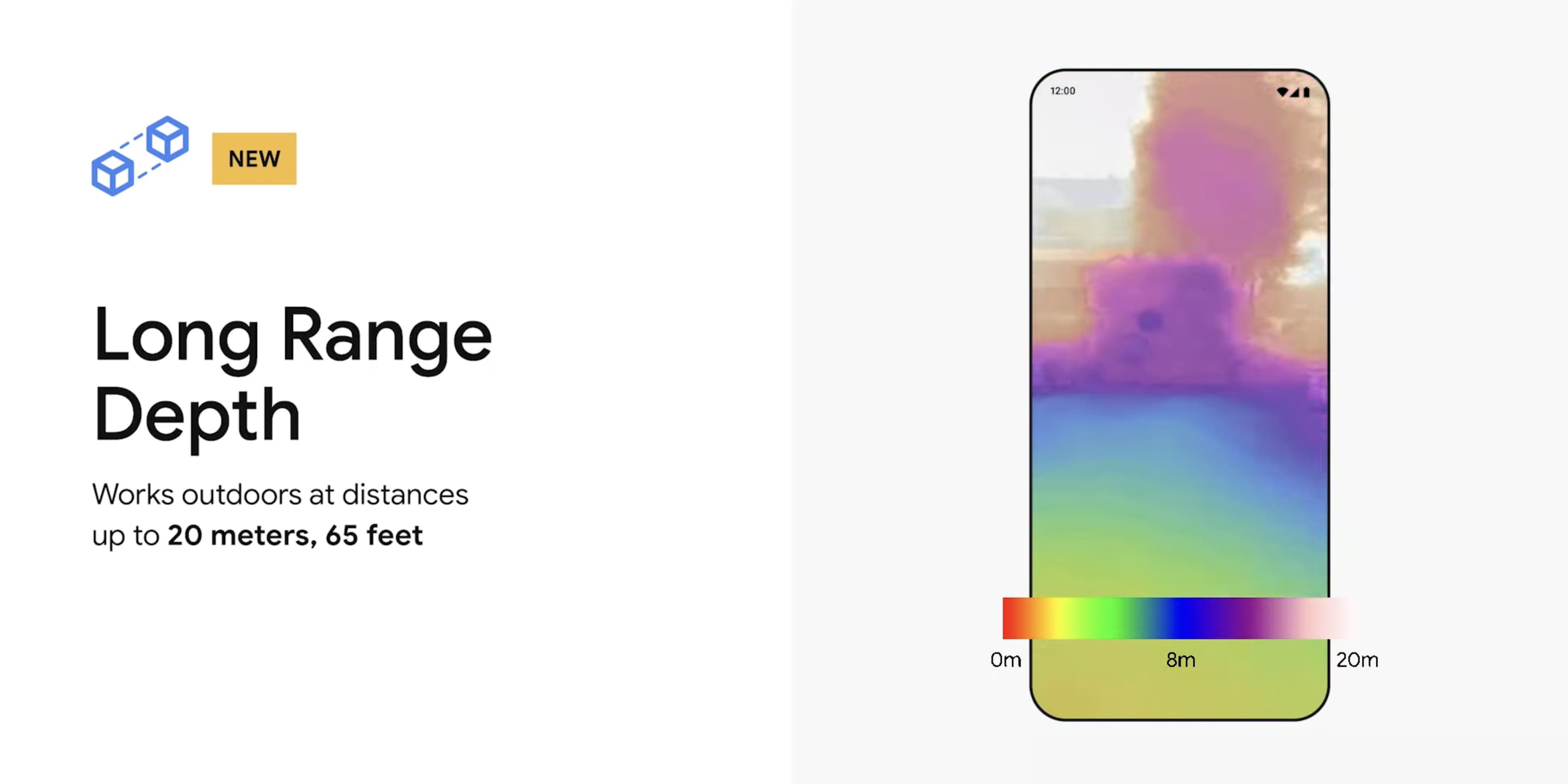

Meanwhile, Google has a new Long Range Depth capability that works up to 20 meters (65 feet) outdoors, where occlusion is now available “even in direct sunlight.” This is rolling out in Google Maps Live View to, for example, accurately blend the destination pin.

Google has also improved motion tracking with ARCore by making it 17% faster to find the first plane, while reducing tracking resets by 15%. Machine learning is also being leveraged to boost those two ARCore improvements further, with Live View in Google Maps set to benefit.

The other big improvement to ARCore at I/O 2022 is the Geospatial API, which allows AR applications to “calculate the latitude, longitude, altitude, and heading of your phone with greater accuracy than the GPS sensor can provide.” It builds on Google’s part work on Cloud Anchors.

Author: Abner Li

Source: 9TO5Google