Google today announced a trio of updates for Google Assistant that leverage AI to improve pronunciation accuracy and context.

Similar to how you can already teach Assistant to pronounce your name, Google is expanding this capability to contacts. Users will be asked to say the name, and “Assistant will listen to your pronunciation and remember it.” Google says the initial recording will not be kept.

This means Assistant will be able to better understand you when you say those names, and also be able to pronounce them correctly. The feature will be available in English and we hope to expand to more languages soon.

Once rolled out, visit Assistant settings > You > Your people and select a contact to record. It joins the “Default” option and “Spell out how it sounds.”

Meanwhile, Google is bringing its BERT neural network-based technique that helps understand natural Search queries to Assistant. The voice assistant’s natural language understanding (NLU) models have been “fully rebuilt” to better understand context and “what you’re trying to do with a command.”

Google will understand instructions like “set a timer for 5, no wait, 9 minutes.” In the past, Assistant would create a 5-minute timer, but now it sets the desired 9-minute countdown. Similarly, “make the alarm one hour later” edits a current alarm rather than creating an entirely new one. Lastly, users can ask for and control timers without needing to remember the exact title they gave it, thanks to approximate name matching.

This upgrade uses machine learning technology powered by state-of-the-art BERT, a technology we invented in 2018 and first brought to Search that makes it possible to process words in relation to all the other words in a sentence, rather than one-by-one in order.

The end result sees Assistant “respond nearly 100 percent accurately to alarms and timer tasks,” with more use cases coming in the future. You can try these enhancements today on speakers set to US English, and they will expand to phones and Smart Displays soon.

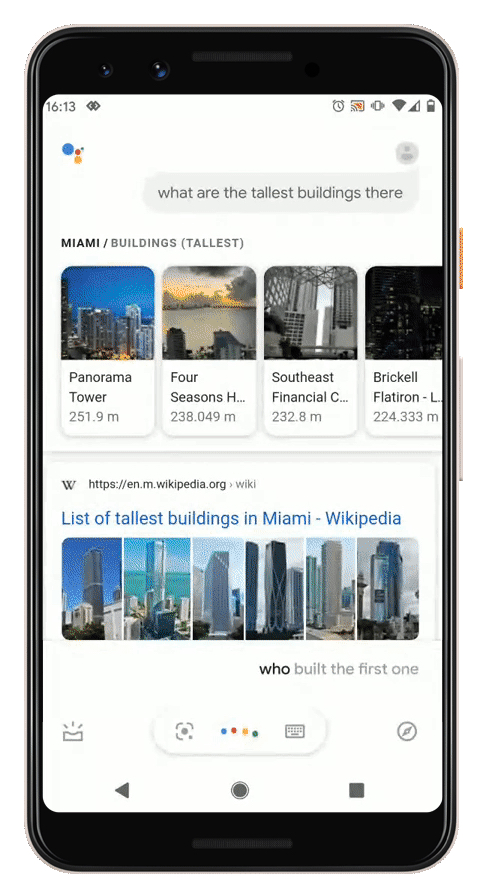

Besides language understanding, BERT is also being used to improve conversation quality. Assistant uses your previous query and is aware of what’s displayed on your screen for even more natural follow-up questions without having to repeat the topic/subject.

If you’re having a conversation with your Assistant about Miami and you want more information, it will know that when you say “show me the nicest beaches” you mean beaches in Miami. Assistant can also understand questions that are referring to what you’re looking at on your smartphone or tablet screen, like [who built the first one] or queries that look incomplete like [when] or [from its construction].

Author: Abner Li

Source: 9TO5Google