Artistic composition tools imbued with AI are fast becoming old hat. Scientists at the MIT-IBM Watson AI Lab, a collaboration between MIT and IBM to jointly pursue AI techniques over the next decade, recently detailed a tool that lets users upload any photograph and edit the appearance of buildings, flora, and fixtures. And in March during its GPU Technology Conference (GTC) in San Jose, California, Nvidia took the wraps off of GauGAN, a generative adversarial AI system that lets users create lifelike landscape images that never existed.

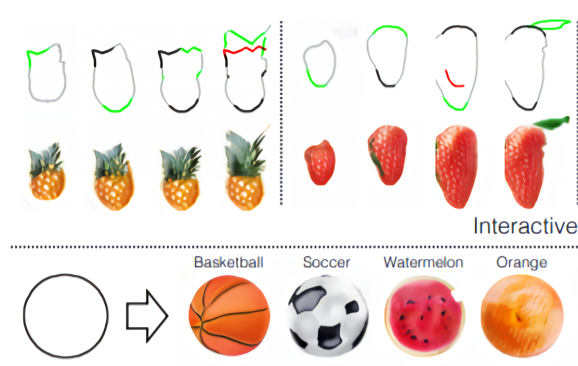

But researchers at the University of California at Berkeley, the University of Oxford, and Adobe Research hope to advance the field further with Interactive Sketch & Fill, a machine learning system that interactively recommends objects to users as they sketch them. It’s described in a newly published paper on the preprint server Arxiv.org (“Interactive Sketch & Fill: Multiclass Sketch-to-Image Translation“).

“[AI] image translation models have shown remarkable success at taking an abstract input, such as an edge map or a semantic segmentation map, and translating it to a real image,” wrote the coauthors. “Combining this with a user interface allows a user to quickly create images in the target domain. However … completing a line drawing without any feedback may prove difficult for many, as untrained practitioners generally struggle at free-hand drawing of accurate proportions of objects and their parts, 3D shapes and perspective. As a result, it is much easier with current interactive image translation methods to obtain realistic-looking images by editing existing images rather than creating images from scratch.”

The team tackled the image generation problem — which involved suggesting object shapes from user sketches and delivering previews of the finished work — with a multipart system. They architected shape and appearance completion modules to update recommended shapes based on the sketches, and they employed a GAN — a two-part neural network consisting of generators that produce samples and discriminators that attempt to distinguish between the generated samples and real-world samples — to help bolster the accuracy of the completed images.

In an evaluation of the system’s robustness, the researchers sourced two open source data sets — edges2shoes, CelebA-HQ — for renderings of objects, the edges of which they simplified with a separate AI model to more closely resemble human-drawn strokes. After testing shape completion and image generation, they brought in a more challenging corpus containing 200 images of basketballs, chickens, cookies, cupcakes, moons, oranges, soccer balls, strawberries, watermelons, and pineapples from popular internet search engines. The researchers say that the system was able to generate images of the correct class the majority of the time in all tests, which they assert is an encouraging step toward a fully end-to-end system.

“[Our] a two-stage approach for interactive object generation, centered around the idea of a shape completion intermediary … makes [AI model] training more stable and also allows us to give coarse geometric feedback to the user, which they can choose to integrate as they desire,” they wrote.

It’s not Adobe’s first foray into AI-assisted art, it’s worth noting. In a paper published in June, researchers at the University of Maryland and Adobe Research described a novel machine learning system — LPaintB — that could reproduce hand-painted canvases in the style of Leonardo da Vinci, Vincent van Gogh, and Johannes Vermeer in less than a minute.

Author: Kyle Wiggers

Source: Venturebeat