![]()

The photos on your iPhone will no longer be private to just you in the fall. The photos are still yours, but Apple’s artificial intelligence is going to be looking through them constantly.

Apple’s algorithm will be inspecting them, looking out for potential child abuse and nude photo issues. This should be of major concern to photographers everywhere. Beware!

On one hand, this is a great thing. Just ask former Rep. Katie Hill, who had to resign her post after nude smartphone photos of her doing things she didn’t want public were shared. Revenge porn is a terrible side effect of the digital revolution.

And anyone using their phones to exploit children and swap child porn is a sick puppy that deserves the book thrown at them.

But let’s look at this from the photographer’s perspective: It’s not good and could lead to more Big Tech and government inspection of our property — our photos. The algorithm is going to be making decisions about your images and there’s no way to put a positive spin on that.

That cute little baby picture of your son or daughter residing on your smartphone could land you into trouble, even though Apple says it won’t. (Facebook won’t let you post anything like that now. Have you been flagged by the social network?) The tradition of nudes in art and photography go back centuries. Could a photo on your phone be flagged and sent to the authorities?

Those are just some of the nagging questions that linger from the Apple announcement, which is a surprise since it comes from a company that has made such a big deal about being the pro-privacy firm; the anti-Facebook and Google. Those two companies, of course, are known for tracking your every move to help sell more advertising.

The changes become effective with the release of updated operating systems, iOS15 for iPhones, and updates for the iPad, Apple Watch, and Mac computers. If the changes concern you, don’t upgrade. But eventually, you’ll lose this battle, and find that your devices won’t work unless you do the upgrade. Sorry folks.

Let’s dive in a little closer:

iMessages:

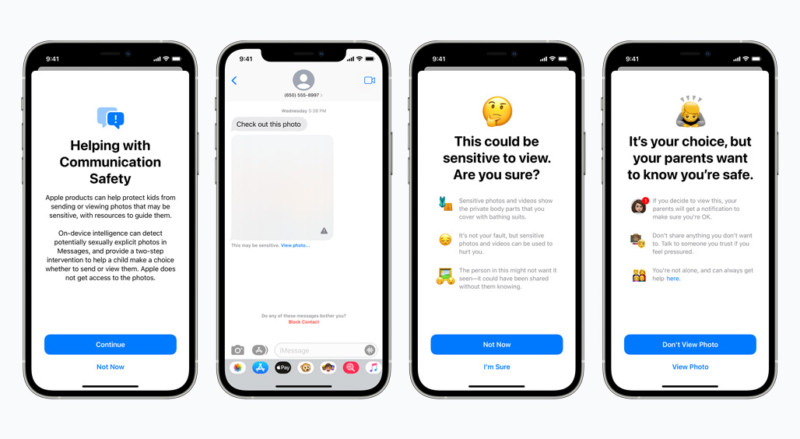

If you send a text message, generated on the iPhone, iPad, Apple Watch or on a Mac computer, and have a family iCloud account, Apple will have new tools “to warn children and their parents when receiving or sending sexually explicit photos.”

Pro: No more teen bullying when kids do the wrong thing and allow themselves to be photographed in the nude. Because this always seems to run into problems beyond the subject and photographer. Too many stories are out there of these images being shared and going viral.

Con: Apple is inspecting the contents of the photos on your phone. How does it know the actual age of the participants in the photo? And once you start down this slippery slope, where does it go from here? Will foreign governments want the right to inspect photos for other reasons?

Solution: this should be obvious, but don’t shoot nudes on your iPhone. It can only get you into trouble. A good camera and memory card will be a lot safer. And you might want to look into an alternative message method that doesn’t snoop on photos.

Child Abuse Monitoring

With the software update, photos and videos stored on Apple’s iCloud online backup will be monitored for potential child porn and if detected, reported to authorities. Apple says it can detect this by using a database of child abuse “image hashes,” as opposed to inspecting the image itself. Apple insists that its system is near foolproof, with “less than a one in one trillion chance per year of incorrectly flagging,” a given account.

Where have I heard that one before? Oh yeah, FaceID, which Apple said would be a safer way to unlock the phone and that the odds of a random stranger instead of you being able to unlock the phone was approximately one in a million. That may be, but I only know that since the advent of Face ID, the phone rarely, if ever, recognizes me, and I have to type in the passcode all day long instead.

Pro: Smartphones have made it easier for the mentally sick to engage in the trading of child porn, and by Apple taking a stand, it will make it harder for folks to share the images.

Con: Apple’s announcement is noble, but there’s still the Pornhubs and worse of the world. And for photographers, you’re now looking at Big Brother inspecting your photos, and this can only lead to bad things. After a manual review, Apple says it will disable your account and send off the info to authorities. Say you did get flagged—who wants to get a note with a subject header about child abuse? And hear from your local police department as well? Once that is said and done, the user can file an appeal and try to get their account reinstated. Whoa!

Solution: I’m not a fan of iCloud as it is, since there’s a known issue with deleting. If you kill a a synced photo from your iPhone or iPad, it says goodbye to iCloud too. I prefer SmugMug, Google Drive and other avenues for safer online backup. With what Apple is doing to inspect photos, whether that be good, bad or indifferent, what good could come of uploading anything there? I don’t shoot nudes, but the last I heard, this isn’t an art form that’s illegal. Apple’s announcement is a boon to hard drive manufacturers and a reminder that our work should be stored locally, as well as the cloud.

Apple’s filtering of iMessage and iCloud is not a slippery slope to backdoors that suppress speech and make our communications less secure. We’re already there: this is a fully-built system just waiting for external pressure to make the slightest change. https://t.co/f2nv062t2n

— EFF (@EFF) August 5, 2021

So the bottom line. Let Apple know what you think of the new program. Scream loudly about it on Twitter. Resist the nag messages from Apple to update your software in the fall. Don’t shoot nudes on your iPhone. Store your photos online and make lots of backups, but not on iCloud.

That’s not Think Different, it’s Think Smart.

About the author: Jefferson Graham is a Los Angeles-based writer-photographer and the host of the travel photography TV series Photowalks, which streams on the Tubi TV app. Graham, a KelbyOne instructor, is a former USA TODAY tech columnist. The opinions expressed in this article are solely those of the author. This article was also published here.

Author: Jefferson Graham

Source: Petapixel